- 1CNRS/ UMR 6112, Laboratoire de Planétologie et Géodynamique, Nantes cedex 3, France (stephane.lemouelic@univ-nantes.fr)

- 2School of Physical Sciences, The Open University, Milton Keynes, MK7 6AA, UK

- 3INAF-IAPS, Roma, Italy

- 4Institut für Planetologie, Westfälische Wilhelms-Universität, Münster, Germany

- 5University of Padova, Dipartimento di Geoscienze, Padova, Italy

- 6Department of Physics and Earth Sciences, Jacobs University Bremen, Germany

Virtual Reality (VR, with headsets) and Augmented Reality (AR, using a smartphone or tablet) coupled with 3D photogrammetric reconstructions are increasingly used for science, education and outreach applications. These techniques are not new [e. g. 1, 2], but they are becoming progressively more widespread thanks to the release in 2016 of technologically mature and cost-effective hardware solutions accessible to the general public. In the field of planetology, VR and AR theoretically allow the possibility to simulate field trips to remote places that are otherwise inaccessible to humans. Using high-resolution imaging, coupled with spectral or morphological data gathered by robotic explorers (orbiters, landers, rovers), we can create integrated virtual environments that accurately represent the surface of planetary bodies and allow the cross-comparison of different datasets. These virtual environments provide the possibility to navigate on a global scale using orbital data, and move down to the surface when in situ data are available to visualize and analyze local outcrops. This is particularly the case of the Moon and Mars, where both extensive remote and groundtruth data are available [3, 4, 5].

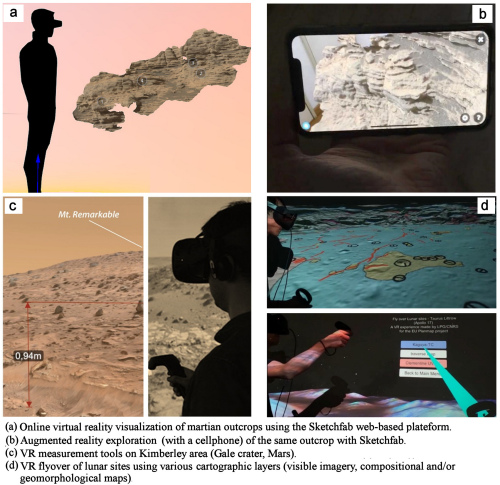

We are investigating how the information from various sources can be combined in a comprehensive way to display, manipulate, analyze and share both analytical data and results. We have for example integrated visible high-resolution imagery, digital elevation and outcrop models, geomorphological maps and compositional maps derived from spectroscopic measurements on several test sites such as the Copernicus crater and Apollo 17 landing site on the Moon, the Crommelin crater and Kimberley area (Gale crater) on Mars, and the Hokusai quadrangle area on Mercury. The VR and/or AR rendering of simple orbital and/or ground-based 3D models can be performed using a web-based solution such as Sketchfab (e.g. https://sketchfab.com/LPG-3D or https://sketchfab.com/planmap.eu), offering the possibility to visualize, interact, and share medium-resolution 3D versions of these multi-scale data (Fig. 1a and 1b). The use of a more powerful and versatile solution based on a game engine [6] allows the development of more complex solutions, for example dedicated measurement tools (Fig. 1c) or multi-layering capabilities (Fig. 1d). These approaches offer new possibilities in terms of data exploration, analysis, and applications for research and education during “virtual planetary field-trips”.

Acknowledgments: This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 776276 (PLANMAP).

References :

[1] McGreevy (1993), M.W. Virtual reality and planetary exploration. In Virtual Reality; Elsevier: Amsterdam, The Netherlands,; pp. 163–197, ISBN 0-12-745045-9.

[2] Favalli, M. et al. (2012), Multiview 3D reconstruction in geosciences. Comput. Geosci. 2012, 44, 168–176.

[3] Ostwald, A.; Hurtado, J. 3D models from structure-from-motion photogrammetry using MSL images: Methods and implications. In Proc. of the 48th LPSC, The Woodlands, TX, USA, 2017. LPI Contribution No. 1964, id.1787.

[4] Caravaca, G. et al. (2020) Planet Space Sci, 182, 104808, DOI: 10.1016/j.pss.2019.104808

[5] Le Mouélic, S. et al. (2020) Remote Sensing, 12 (11), DOI: 10.3390/rs12111900

[6] Nesbit, P.R et al. (2020), GSA Today, vol. 30, 4–10. DOI: 10.1130/GSATG425A.1

How to cite: Le Mouélic, S., Caravaca, G., Mangold, N., Wright, J., Carli, C., Altieri, F., Zambon, F., Van Der Bogert, C., Pozzobon, R., Massironi, M., Pio Rossi, A., and De Toffoli, B.: Using Virtual and Augmented Reality in Planetary Imaging and Mapping – a Case Study, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-589, https://doi.org/10.5194/epsc2020-589, 2020.