- 1JOANNEUM RESEARCH Forschungsgesellschaft mbH, DIGITAL - IVA, Graz, Austria (gerhard.paar@joanneum.at)

- 2VRVis GmbH, Vienna, Austria (traxler@vrvis.at)

- 3Imperial College London, London, United Kingdom (sanjeev.gupta@imperial.ac.uk)

- 4Arizona State University, Tempe, Arizona, USA (jim.bell@asu.edu)

Introduction

Planetary rover camera stereoscopy has evolved within decades to serve engineering and science tasks from on-board navigation over mission and instrument planning to science operations and exploitation based on 3D data [1].

Mastcam-Z [2] is a zoomable multispectral stereoscopic camera on the Mars 2020 Perseverance rover. Its stereo range useful for geologic spatial investigations spans from very close range up until a few dozens of meters, with a quadratically increasing range error [3]. Whilst small deviations of geometric camera calibration from “truth” result in a predictable and correctable systematic error, actual stereo processing introduces 3D range noise in the named quadratically increasing form.

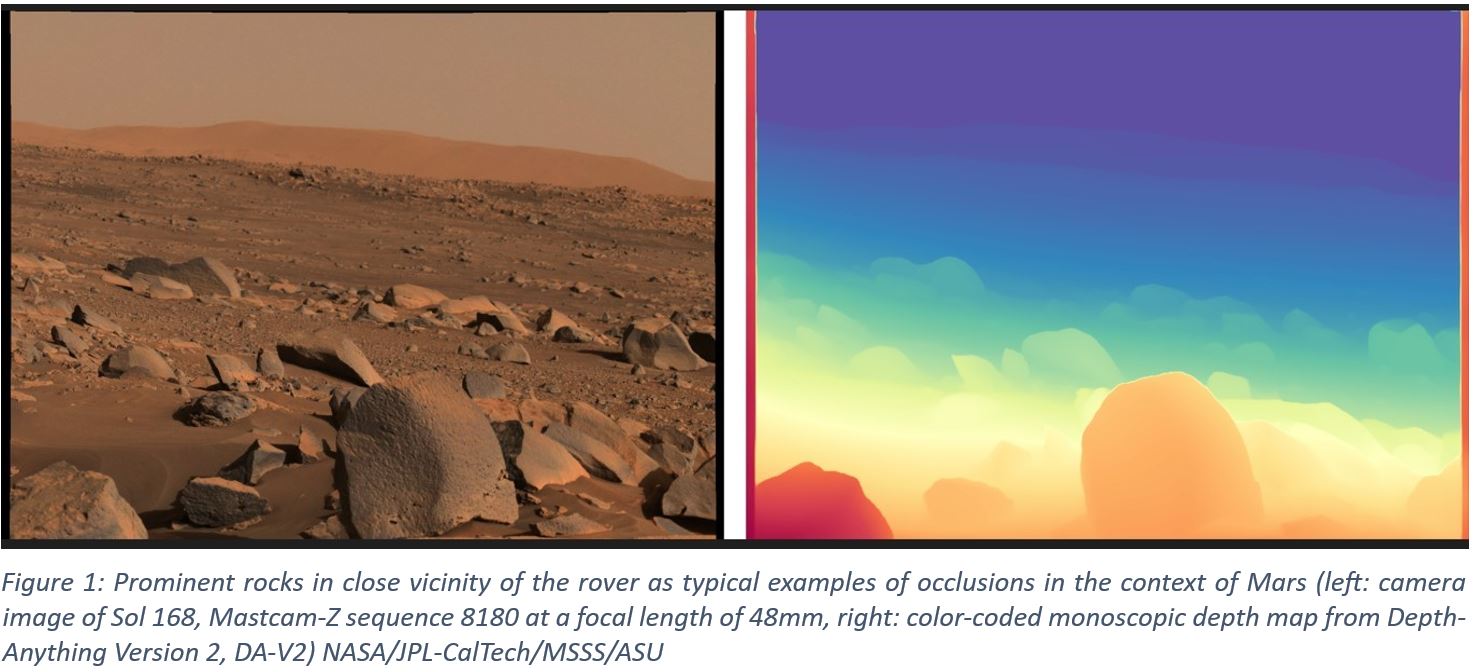

Recently available AI methods show promising solutions determining monoscopic depth (i.e. depth from single images in pixel resolution) [4] for a large range of applications. Such range maps lack true scale, as no artificial objects with known scale can be expected on planetary surfaces. Yet, pixel-resolution occlusion determination and micro-shape are well represented, as shown in Figure 1. This inspired the combination of monoscopic AI-based range determination with true scale as available from calibrated stereoscopy.

AI-Based Enhancement of Stereoscopic Range Products

Monocular depth models such as Depth Anything V2 (DA-V2, a deep learning-based system for estimating depth from a single camera image [4]) have emerged to powerful tools in image simulation, segmentation and scene understanding, however do not provide exact scale. Relative depth estimates produced by such monocular depth estimation need to be turned into physically meaningful metric depth values. Calibrated stereo camera configurations such as Mastcam-Z provide true-scale depth maps. Fusing them works by establishing local correspondences between the two depth maps and fitting range transformation functions that can convert pixel values from the monocular (unscaled) domain into the stereo (scaled for true distances) domain. These transforms are calculated and applied locally, either per pixel or per block of pixels, to account for spatial variations in the relationship between the two depth sources. Edge-aware processing ensures that depth discontinuities are handled more precisely on occlusions (such as rocks or boulders) to select search areas for which transformations are calculated. The result is a scaled depth map that combines the high-frequency detail and spatial completeness of DA-V2 with the geometric accuracy of stereo photogrammetry, enabling detailed yet quantitatively accurate depth maps suitable for scientific analysis and engineering applications, such as planetary rover navigation and terrain modeling.

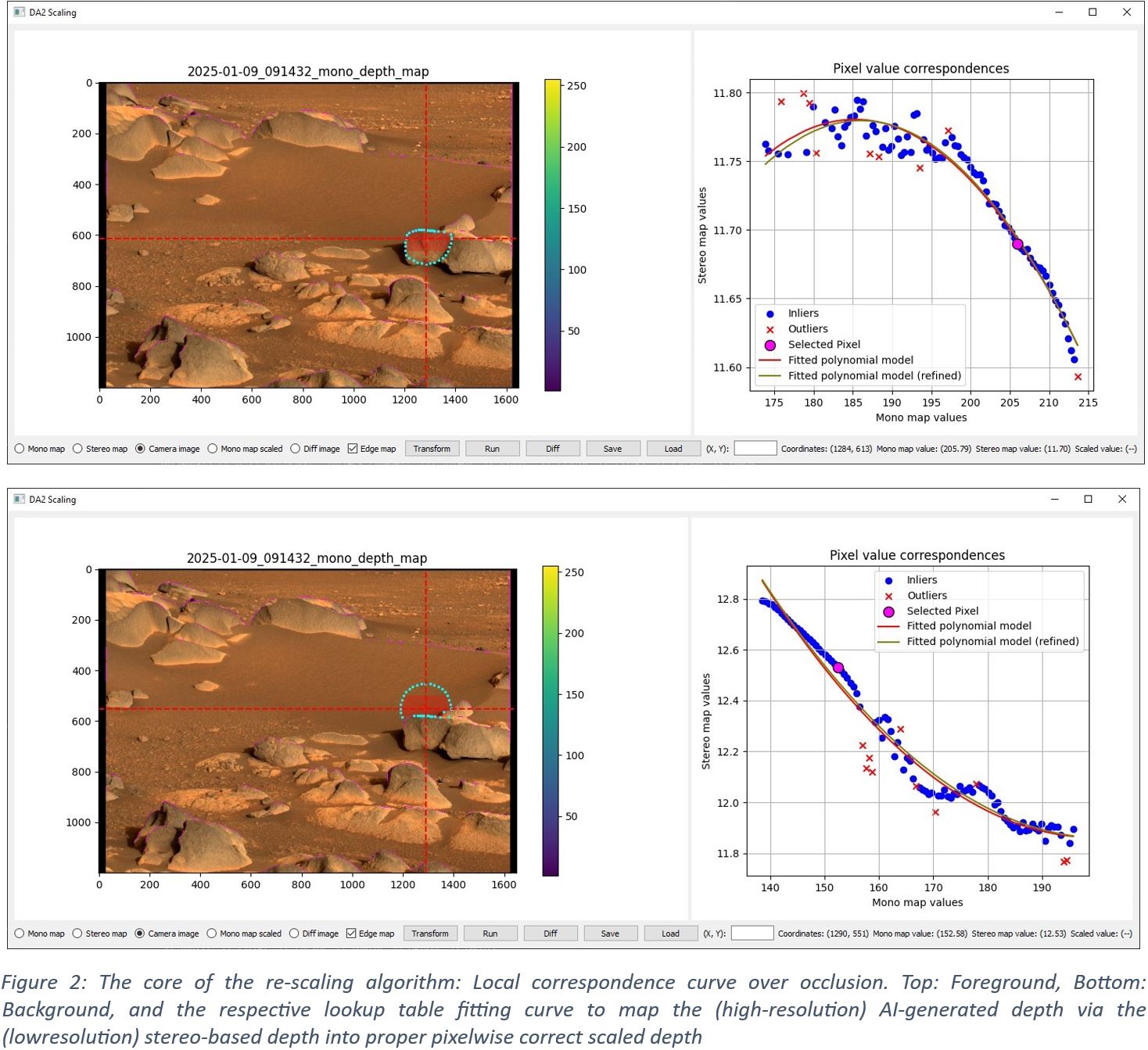

The basic operation of the algorithm is as follows:

- Detect occlusion edges in mono depth map to form a binary "edge map"

- Iterate over mono depth map in blocks of configurable size

- For each mono depth map pixel, create a circular search area using the pixel as its center, taking into account occlusion edges

- Calculate correspondences between mono and stereo map depth values within the search area (Figure 2)

- Fit function to correspondence data using quadratic, polynomial, exponential, or linear approximation

- Transform mono map values in current pixel block to final product using fitted function.

Several enhancements are implemented – most notably a two-step function fitting procedure and per-pixel interpolation of transformations based on spatial distance – as well as more fine-grained options to control the behavior of the algorithm. A prototype version of this scaled DA-V2 algorithm has been integrated into the nominal Mastcam-Z 3D PRoViP vision processing [6].

Qualitative Results

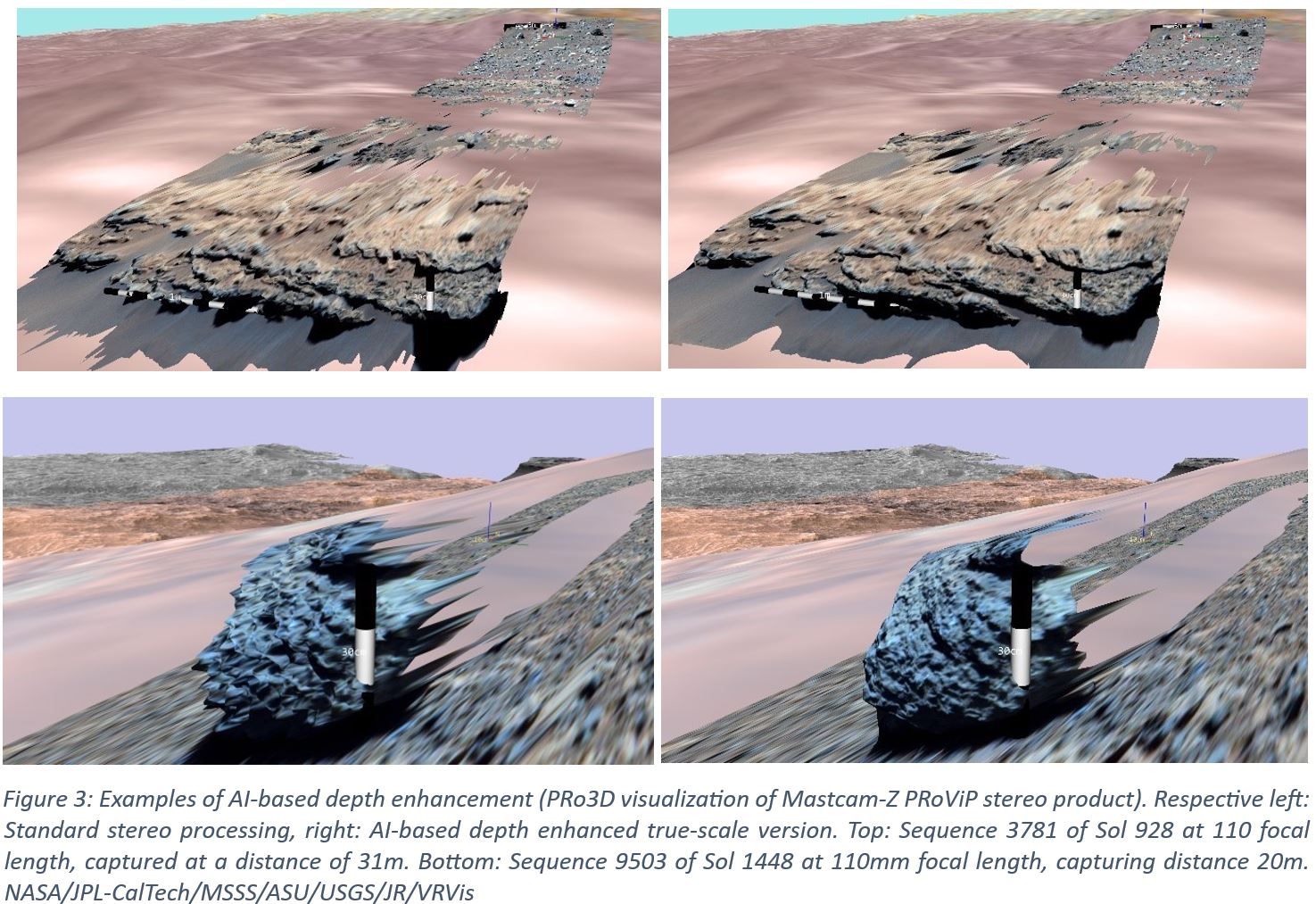

A series of preliminary tests indicate highly promising performance, leading to a substantial range extension of the nominal fixed-baseline stereo capabilities of stereoscopic imaging instruments, in particular for the planetary science case. From examples (see Figure 3) it is evident, that at least a range boost of 2 to 5 can be expected by using the described approach.

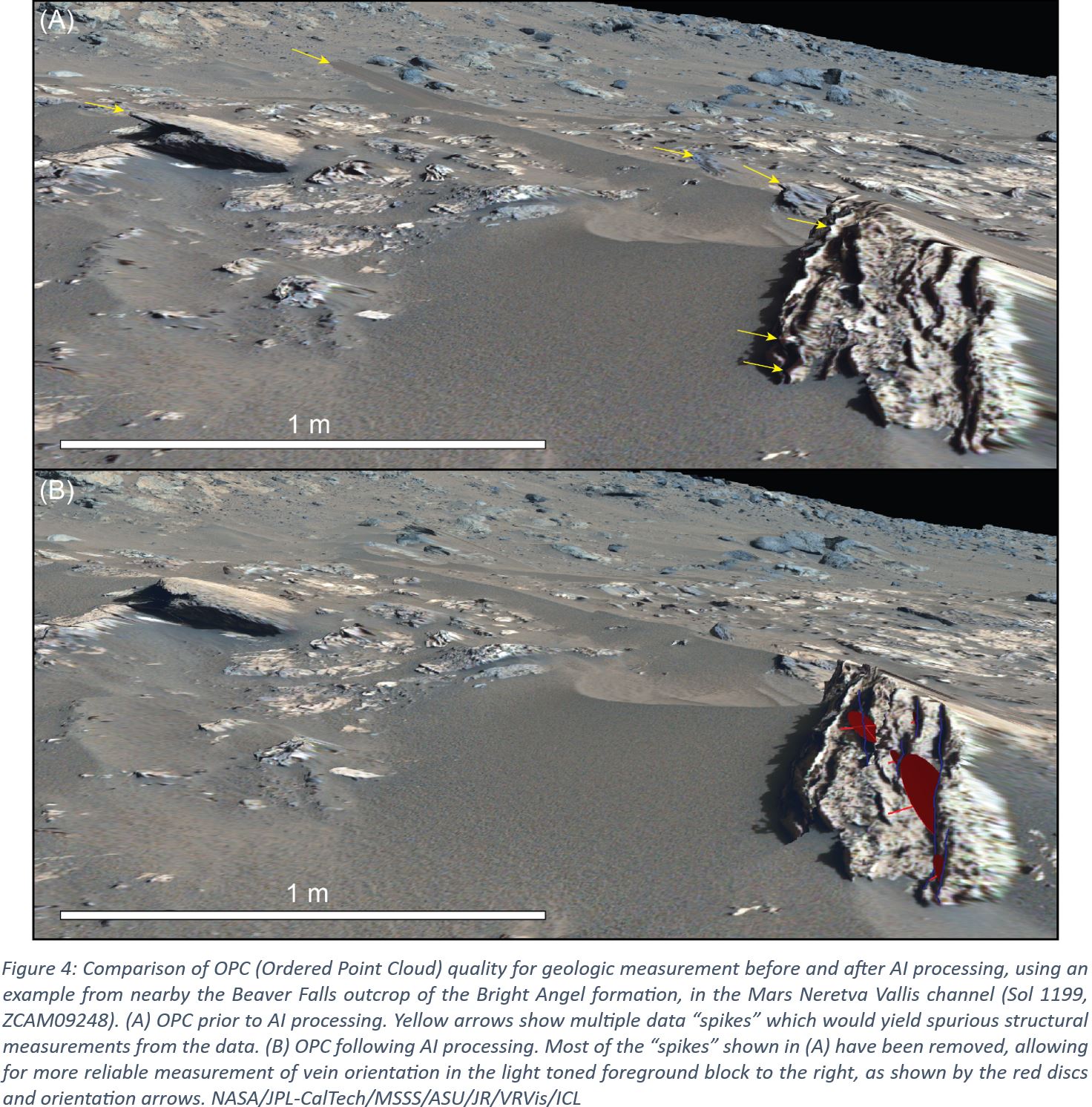

A typical use case to both the original (conventional) stereo-derived 3D result and the AI-enhanced version in comparison (Figure 4) gains the following preliminary assessment:

- Prior to AI processing, the data from this area shows several “spikes” which preclude reliable structural measurements (e.g. strike/dip of veins in the foreground block) being acquired. In the case above (at 34 mm focal length), this occurs at distances of just ~ 6 – 8 m from Mastcam-Z.

- Following AI enhancement, these “spikes” are removed, allowing more reliable measurements of Ca-sulfate vein orientations to be measured.

Towards Validation of AI-Based Stereo Enhancement

A highly comprehensible way for a qualitative comparison is to toggle between the surfaces to be compared. This allows to study structural deviations at different viewpoints. Changing into wireframe mode can further enhance the perception of topographic differences.

A series of quantitative validation approaches is under development. These include:

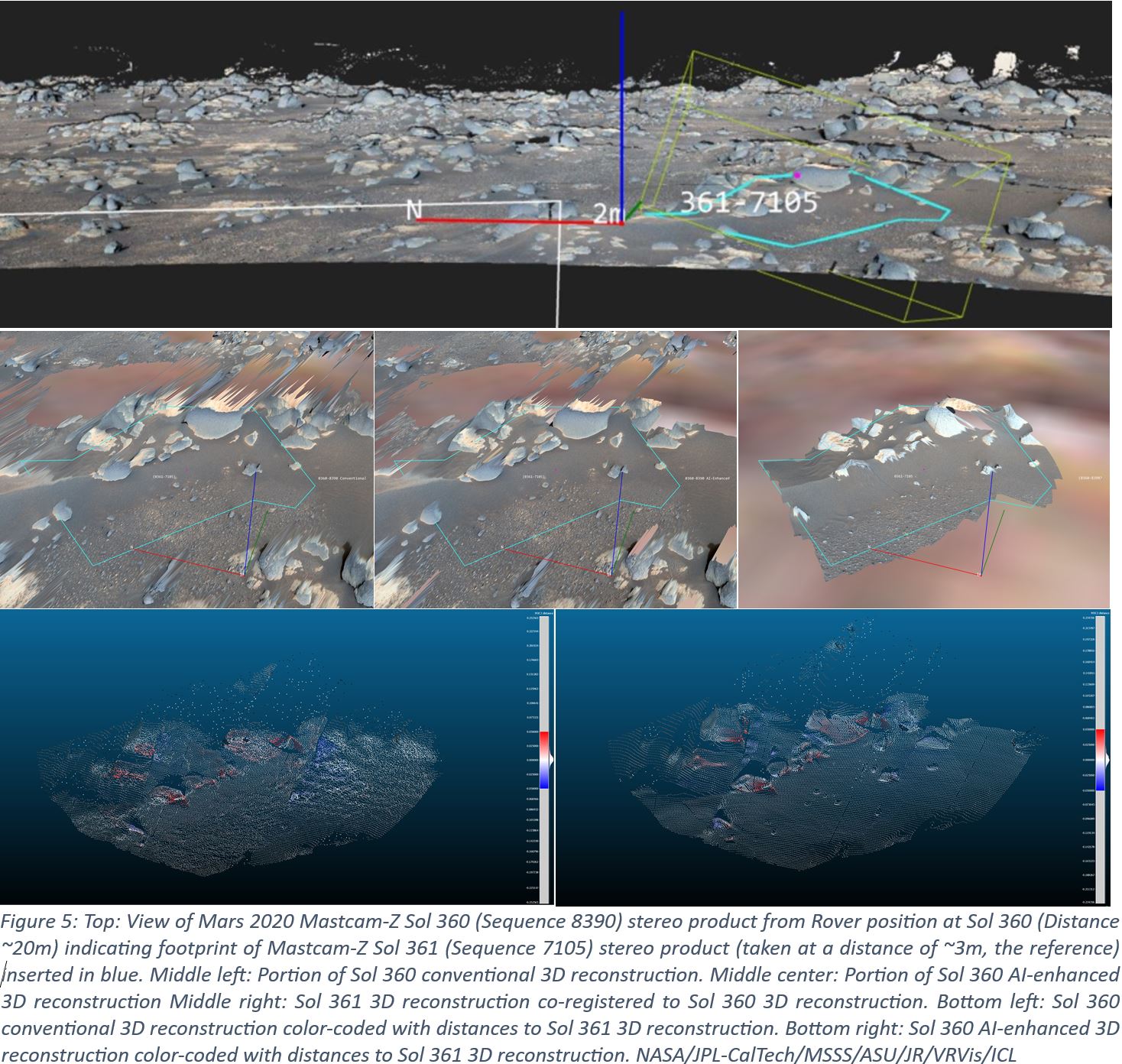

- 3D-comparison between AI-enhanced surface reconstruction using stereo pairs viewed from medium distance, with “conventional” stereo reconstructions of the same scene viewed from close-range (including co-registration to minimize systematic errors from localization and camera calibration) – see Figure 5, top and middle

- Color-coding the reference surface according to the distance to the other one using the distance between surface points by using the up-vector or surface normals (Figure 5, bottom)

- 3D-comparison of AI-enhanced surface reconstructions from images taken with the same imaging geometry under different illumination conditions

- Independent geologic analysis on AI-enhanced and conventional Digital Outcrop Models (DOMs) and results comparison of, e.g., dip-and-strike measurements

- Analysis of shadow shapes with obtained object outlines.

Outlook

A statistically significant validation of the approach is presently in development and planned to be finalized before the joint EPSC/DPS Conference 2025.

A planned improvement of the visual comparison method between surfaces is to show color-coded deviation vectors between vertices. This works best in wireframe mode or with semi-transparent surfaces. Furthermore it should be possible to query numerical deviation values by clicking on a surface point.

Acknowledgement

This work was funded by the ASAP Project AI-Mars-3D (FFG-911920). We thank Komyo Furuya of JR for operational end-to-end implementation and documentation of the re-scaling algorithm.

References

How to cite: Paar, G., Traxler, C., Jones, A., Ortner, T., Bell, J., Gupta, S., and Barnes, R.: AI-Based Extension of Rover Camera Stereo Range – Starting Validation on Mars 2020 Mastcam-Z Geologic Use Cases, EPSC-DPS Joint Meeting 2025, Helsinki, Finland, 7–12 Sep 2025, EPSC-DPS2025-387, https://doi.org/10.5194/epsc-dps2025-387, 2025.