- University of Chile, FCFM, Department of Astronomy, Chile (npandey@das.uchile.cl)

The trans-Neptunian region represents a critical window into the early stages of our solar system’s formation, offering a unique opportunity to study the remnants of the planetesimals that contributed to the creation of the planets. The Deep Ecliptic Exploration Project (DEEP), a multi-year survey utilizing the Dark Energy Camera (DECam) on the 4-meter Cerro Tololo Inter-American Observatory (CTIO) Blanco telescope, has been instrumental in characterizing the faint Trans-Neptunian Objects (TNOs) population. This project has determined the size and shape distribution of TNOs, studied their physical properties in relation to their dynamical class and size, and tracked objects over multiple years to gain insights into their orbits. Using a shift-and-stack moving object detection algorithm, the DEEP survey successfully recovered over 110 new objects. While this method has been successful, it relies on computationally expensive velocity assumptions and traditional image stacking techniques. In this work, we introduce an innovative AI-based moving object detection method that offers a fresh perspective on TNO detection, providing a faster, more efficient, and robust alternative to traditional methods.

We introduce a new approach for detecting moving objects in astronomical images, called You Only Stack Once (YOSO). This method simplifies the traditional object detection technique by eliminating the need to account for the velocity vector of each object. Instead of shifting individual images to align with a presumed motion before stacking, YOSO simply stacks a sequence of time-series images without applying directional shifts. As a result, moving sources appear as linear or slightly curved trails, depending on their apparent motion during the observation. These trails are then identified using a machine learning model trained to recognize similar distinct shape and intensity profiles. This approach allows for fast, reliable detection of a wide range of moving objects, from fast Near-Earth Objects (NEOs) to the slower, fainter bodies in the Kuiper Belt.

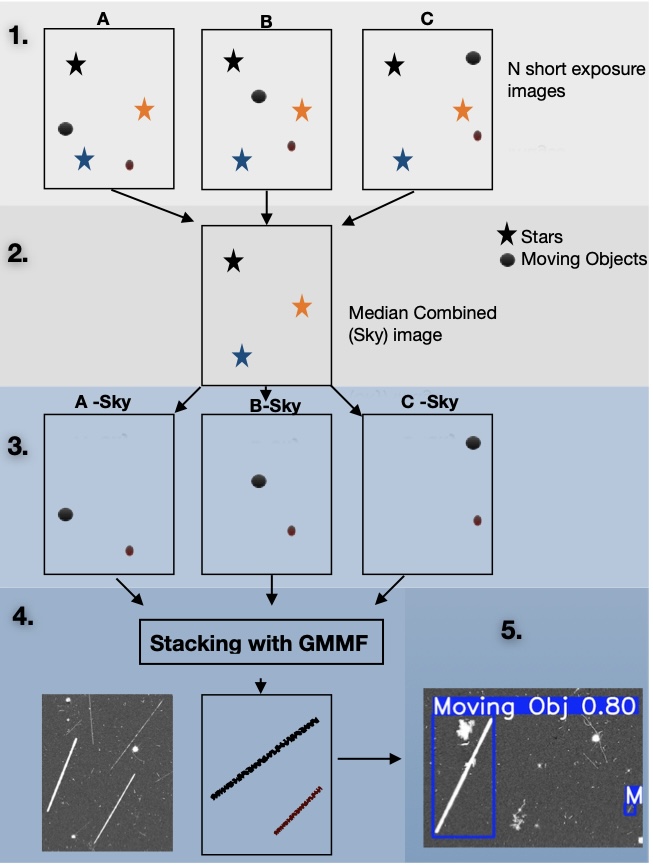

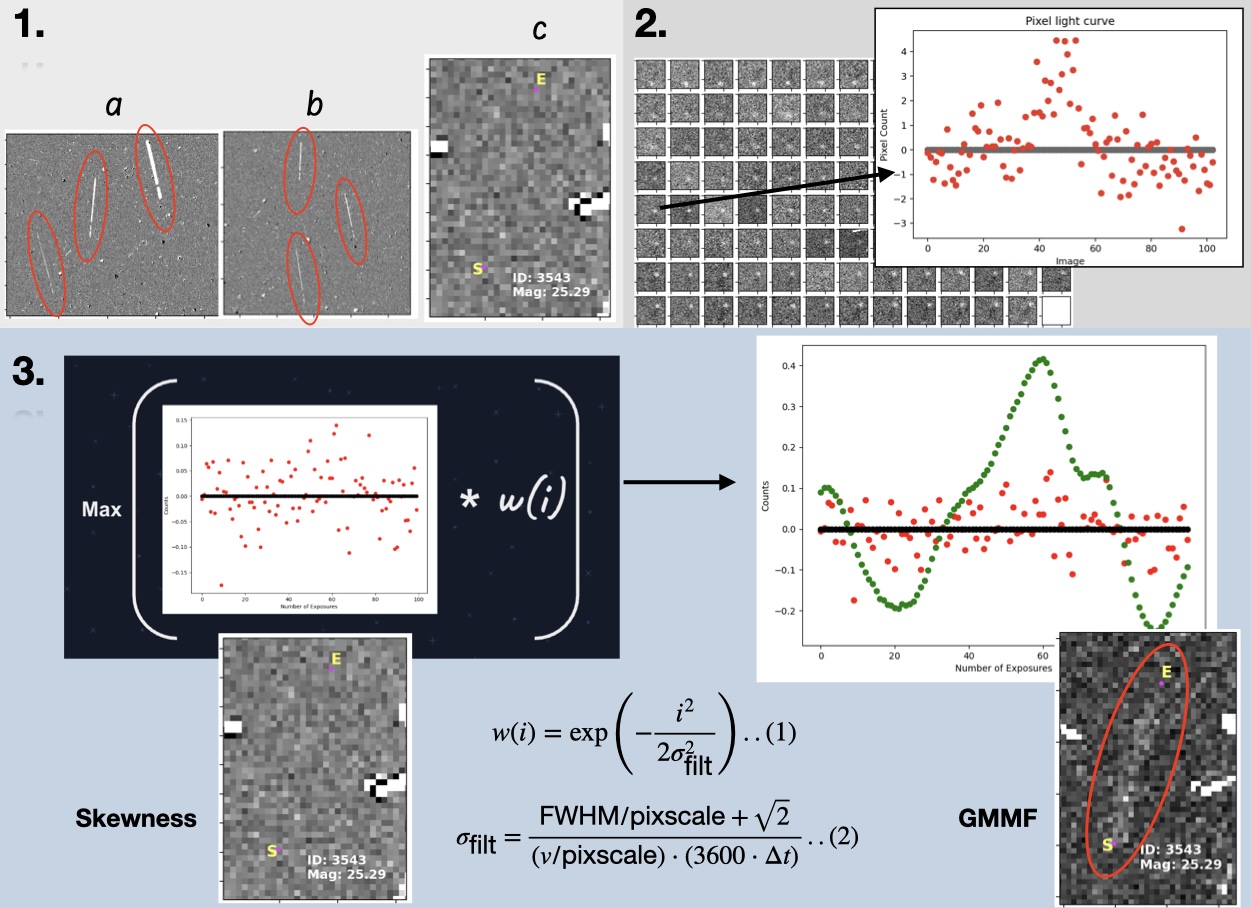

YOSO starts with a series of short-exposure images of the same sky region, typically acquired over the course of a night. After standard preprocessing (see Steps 2 and 3 in Figure 1), the images are stacked to boost the signal of any moving sources. In early versions of the pipeline, we used pixel-wise statistical measures such as mean, skewness, and kurtosis to combine the frames, but these proved limited in their ability to enhance faint sources. To improve sensitivity, we developed the Gaussian Motion Matched Filter (GMMF), a new statistics, tailored to detect the footprint of a moving object on a single pixel. Unlike conventional Gaussian smoothing, GMMF applies a Gaussian-weighted convolution along the temporal axis of each pixel stack, matching the expected motion profile of moving sources as shown in section 2 in Figure 2. GMMF can reliably detect sources with signal-to-noise ratios as low as 0.5:1.0, all without the need for a brute-force search over velocity space. The details of this filter have been shown in Figure 2.

Once potential trails have been generated, we use a deep learning model to identify and classify them. Our detection network is based on YOLOv8L, a high-performing convolutional neural network (CNN) architecture that has been pretrained on a broad set of visual data. YOLOv8L is particularly well-suited to this task because it generalizes effectively even with limited domain-specific training data, learns quickly, and handles noisy or low-contrast features robustly. For our use case, we retrained the model on a synthetic dataset designed to mimic real astronomical trails, including low SNR signals, linear trajectories, and varying brightness levels.

YOSO is a highly adaptable framework designed to optimize the detection of moving objects across a broad spectrum of telescopes, observational datasets, and populations, from Kuiper Belt Objects (KBOs) to fast-moving Near-Earth Objects (NEOs). Unlike shift-and-stack techniques that produce compact point sources, often vulnerable to false positives from random noise or disjointed detections due to tiling strategies, YOSO leverages the naturally occurring, spatially correlated trails left by moving sources. These extended structures are particularly well-suited for detection via machine learning models, which excel at identifying such coherent patterns.

A key advantage of YOSO is its ability to significantly suppress false positives. The deep learning model is trained to recognize elongated, linear features, enabling it to discriminate real object trails from stochastic noise or unrelated pixel artifacts. Whereas traditional surveys often require stringent signal-to-noise thresholds (e.g., above 5σ) to maintain reliability, YOSO is capable of operating at lower thresholds down to 4σ by combining statistical image stacking with deep learning-based confidence assessments. Once a candidate trail is identified, the model outputs a bounding box around the detection (as illustrated in Step 5 of Figure 1), which can then be used to extract the trail and estimate its apparent motion and direction.

The primary goal of this method is to achieve near real-time detection of moving objects as observational data becomes available. This presentation will outline the core methodologies behind the YOSO framework and highlight key challenges encountered during its development, particularly emphasizing the role of the trail-based search in enabling fast and effective detection of trans-Neptunian objects. I will also discuss its potential application to LSST deep drilling fields and other wide-field surveys, where it may significantly improve the discovery rate of Solar System objects, especially those with sky motions that are not well-suited to traditional deep drilling strategies.

Figure 1: The illustration of the YOSO pipeline, the process has been applied to 103 time series of observations of Kuiper Belt Object search (120 sec exposure each image).

Figure 2: Schematic illustrating the Gaussian Motion Matched Filter (GMMF) process applied to 103 time series of observations of Kuiper Belt Object search (120 sec exposure each image).

How to cite: Pandey, N.: You Only Stack Once (YOSO): Fast TNO Detection via Gaussian Motion Matched Filtering and Deep Learning, EPSC-DPS Joint Meeting 2025, Helsinki, Finland, 7–12 Sep 2025, EPSC-DPS2025-961, https://doi.org/10.5194/epsc-dps2025-961, 2025.