- Université Lyon 1, LGL-TPE, Villeurbanne, France (matthieu.volat@univ-lyon1.fr)

Introduction

Launched in 2006 aboard NASA/Jet Propulsion Laboratory's Mars Reconnaissance Orbiter spacecraft, the HiRISE (High-Resolution Imaging Science Experiment)[1] has provided the highest resolution (up to 25cm/pixel) of orbital imagery of Mars for more than twenty years. It features a set of 3 different color filtered CCDs: the RED array has a range of 570-830nm, while the blue-green (BG) and near-infrared (IR) filters covers respectively less than 580nm and greater than 790nm. Color imagery provide valuable information to create geological maps, such as was done by Mandon et al[2].

The RED sensor provides a wide angle of view, but the "color" sensors (BG, IR) have a narrower coverage of 20% of the center of the RED swath. This limitation, aggravated by the already low global coverage of the HiRISE dataset, means planetary scientists must work with very limited data.

The overlap of the RED and colored datasets mean we have a strong target set to train a deep neural network (DNN) to extrapolate the NIR and BG channels and provide an extended colored dataset. While there have been various work on using such algorithm to colorize imagery, we are not aware of previous work targeting martian orbital data.

Architecture

We use a straightforward U-Net model, which are based on autoencoders. An autoencoder is the result of a function that convert its input into a different representation (coded) by a function that transform coded data into the original representation. When applied on imagery, the encoding function often take the form of layers of convolution filters, and the decoder of transpose convolutional filters. The U-Net architecture concatenate each encoding layer to the result of the corresponding decoding layer to steer the process, as defined by Ronneberger et Al[3].

We define the images size to 256x256 pixels to run the model on individual data that provide a rich feature set. We define 5 levels of encoding and decoding to account for the input data size.

One signifiant difference with previous works is that given the different nature of HiRISE channels compared to "normal" imagery, we do not convert the RGB data into a perceptual colorspace as it would be usually done, as our channels do not have the same relationship as RGB channels have. We set the model target to be the NIR and BG channels of an HiRISE image.

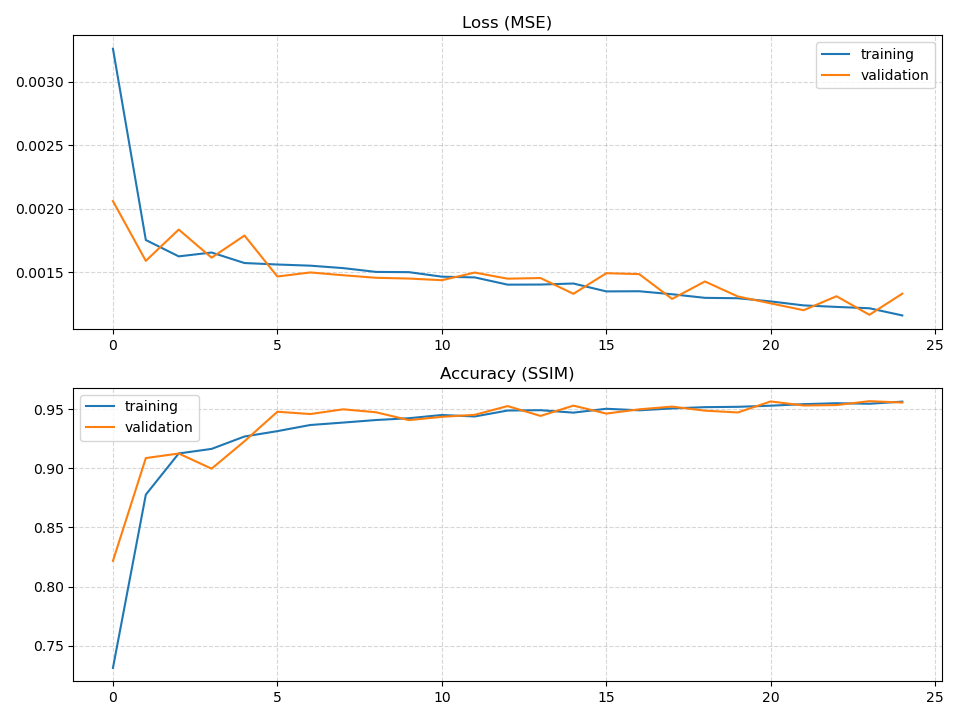

To train this model, we evaluate the validation results using L1 norm, mean square error (MSE), binary cross-entropy (BCE) function as loss function. When evaluating the structural similarity (SSIM) of validation step, we observe very close results with the MSE providing slightly better results.

Training dataset

The “color” dataset of HiRISE provide us a needed training target. In theory, we could randomly select datasets across the complete catalog, but we found that operational condition randomness leaves us with varying levels of image quality.

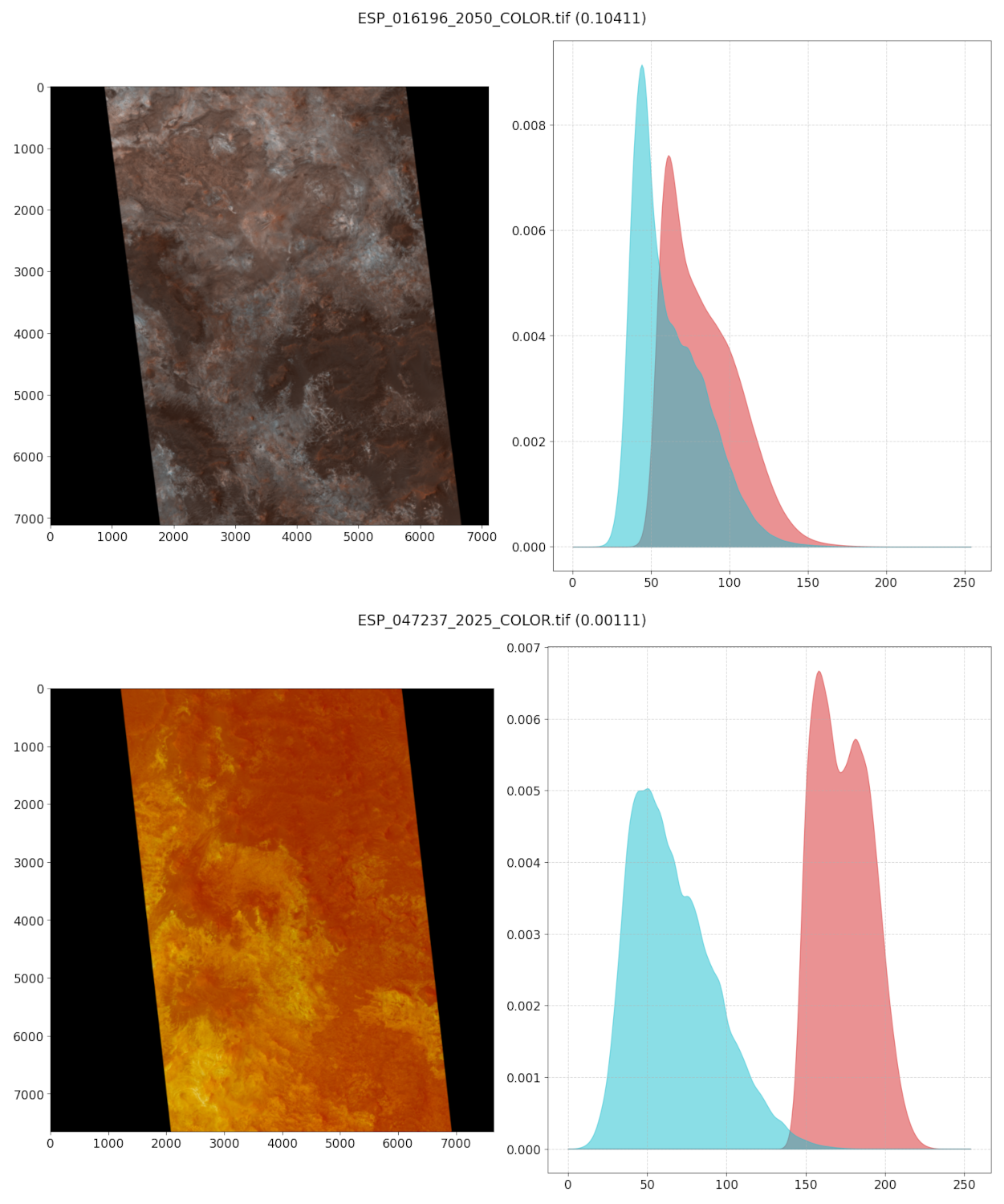

A first step in our training was to establish a criteria to find “good” references images. We decided to approach this issue based on how the RED and BG are correlated: both those channels are first normalized and 256 buckets histograms are computed. The histograms values are also normalized by the number of input pixels to that image size do not impact the values. We smooth those histograms with a gaussian filter and compute the product of both histograms and sum the resulting values.

For a first attempt, we selected and scored 228 images over the Mawrth Vallis region using the MarsSI[4] portal to select and process data, both due to the large amount of observation over this area and the diversity of terrain of this area. We selected the 35 best-scored images and split them in 256x256 tiles, our objective being to train the model to run at 1:1 scale. This resulted in a training dataset of 27,555 images, of whom 23,421 where used for loss function computation and 4,134 for validation during the training. With this dataset, we find that the model will converge over 25 epochs.

Results

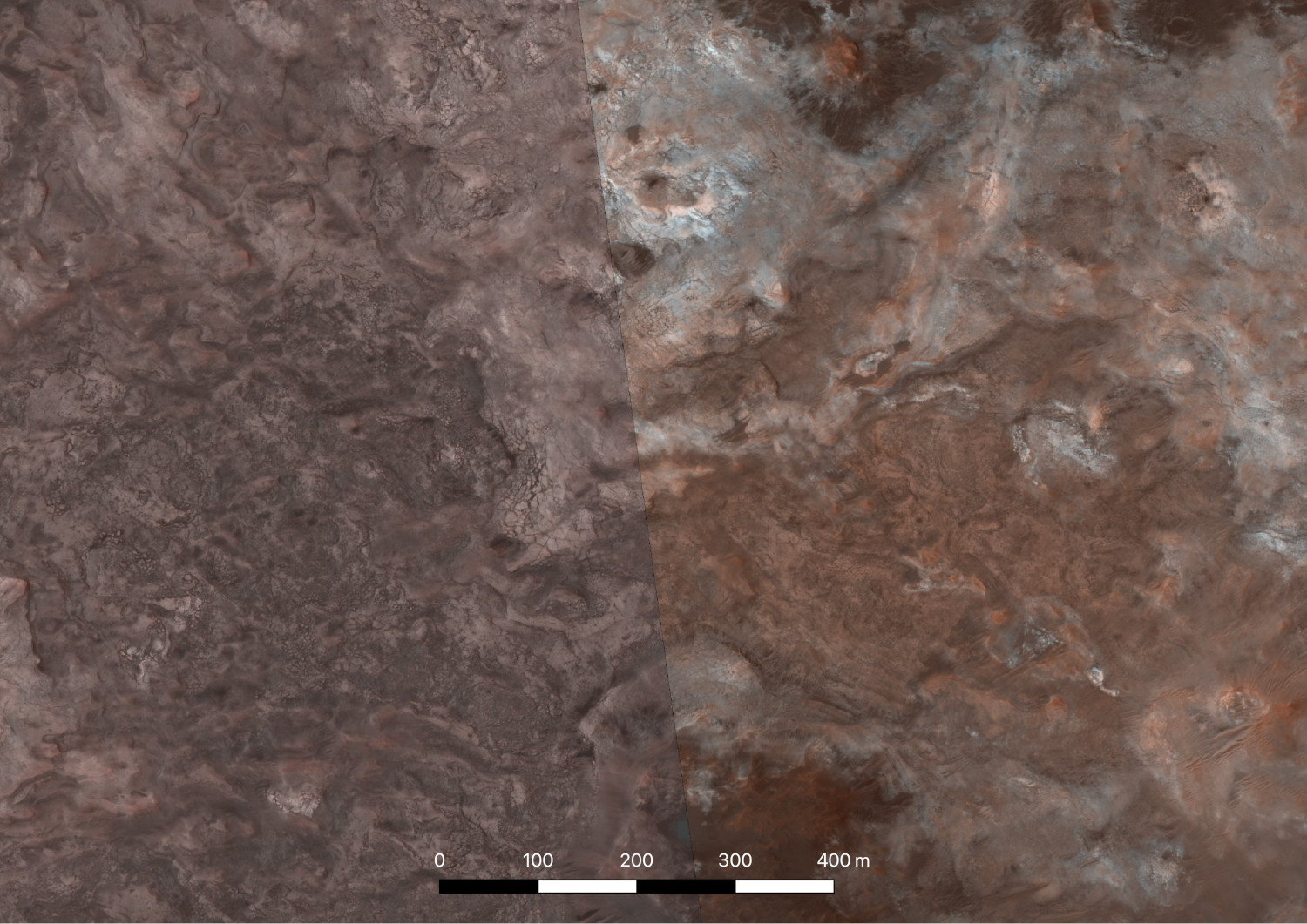

As a first step, we expect to validate the results of our colorizer on images that would have highly correlated R/BG histograms as this is the kind of data we selected for our training. At this step of our study, we only evaluate the BG channel generation as the “natural colors” images evaluation comes easier to the human eye (analytic evaluation is already done during the training).

First results are encouraging revealing that color contrasts can be well reproduced forming image products directly usable for mapping. The red vs blue balance of some units is not always perfectly reproduced like the dark capping unit of the image below, redder in the training set while bluish in the results. Our perspective is to play with the training set and to apply the pipeline to RB color.

Closeup sample of recolorized (left) and COLOR (right) version of image ESP_016196_2050

References

[1] McEwen, Alfred S., et al. "Mars reconnaissance orbiter's high resolution imaging science experiment (HiRISE)." Journal of Geophysical Research: Planets 112.E5 (2007).

[2] Mandon, Lucia, et al. "Morphological and spectral diversity of the clay-bearing unit at the ExoMars landing site Oxia Planum." Astrobiology 21.4 (2021): 464-480.

[3] Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18. Springer international publishing, 2015.

[4] Quantin-Nataf, C., et al. "MarsSI: Martian surface data processing information system." Planetary and Space Science 150 (2018): 157-170.

How to cite: Volat, M., Quantin-Nataf, C., and Millot, C.: HiRISE image colorization with a U-Net deep neural network, EPSC-DPS Joint Meeting 2025, Helsinki, Finland, 7–12 Sep 2025, EPSC-DPS2025-969, https://doi.org/10.5194/epsc-dps2025-969, 2025.