Oral presentations and abstracts

Modern space missions, ground telescopes and modeling facilities are producing huge amount of data. A new era of data distribution and access procedures is now starting with interoperable infrastructures and big data technologies. Long term archives exist for telescopic and space-borne observations but high-level functions need to be setup on top of theses repositories to make Solar and Planetary Science data more accessible and to favor interoperability. Results of simulations and reference laboratory data also need to be integrated to support and interpret the observations.

The Virtual Observatory (VO) standards developed in Astronomy may be adapted in the field of Planetary Science to develop interoperability, including automated workflows to process related data from different sources. Other communities have developed their own standards (GIS for surfaces, SPASE for space plasma, PDS4 for planetary mission archives…) and an effort to make them interoperable is starting.

Planetary Science Informatics and Data Analytics (PSIDA) are also offering new ways to exploit the science out of planetary data through modern techniques such as: data exploitation and collaboration platforms, visualisation and analysis applications, artificial intelligence and machine learning, data fusion and integration supported by new big data architecture and management infrastructure, potentially being hosted by cloud and scalable computing.

We call for contributions presenting progresses in the fields of Solar and Planetary science databases, tools and data analytics. We encourage contributors to focus on science use cases and on international standard implementation, such as those proposed by the IVOA (International Virtual Observatory Alliance), the OGC (Open Geospatial Consortium), the IPDA (International Planetary Data Alliance) or the IHDEA (International Heliophysics Data Environment Alliance), as well as applications linked to the EOSC (European Open Science Cloud) infrastructure.

Session assets

Scope

Planetary robotic missions contain vision instruments for various mission-related and science tasks such as 2D and 3D mapping, geologic characterization, atmospheric investigations, or spectroscopy for exobiology. One major application for computer vision is the characterization of scientific context, and the identification of scientific targets of interest (regions, objects, phenomena) for being investigated by other scientific instruments. Due to high variability of appearance of such potentially scientific targets it requires well-adapted yet flexible techniques, one of them being Deep Learning. Machine learning and in particular Deep Learning (DL) is a technique used in computer vision to recognize content in images, categorize it and find objects of specific semantics.

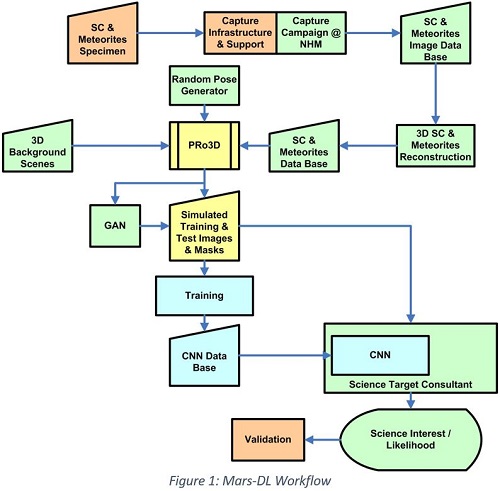

In its default workflow, DL requires large sets of training data with known / manually annotated objects, regions or semantic content. Within Mars-DL (Planetary Scientific Target Detection via Deep Learning), training focuses on a simulation approach, by virtual placement of known targets in a true context environment.

Mars-DL Approach

The Mars-DL workflow is depicted in Figure 1, with following details:

- 3D background for the training & validation scenes is taken from 3D reconstructions using true Mars rover imagery (e.g. from the MSL Mastcam instrument).

- Scientifically interesting objects to be virtually placed in the scene are chosen to be 3D reconstructions of shatter cones (SCs) and/or Meteorites as representative set. The SCs used for 3D reconstruction are from different terrestrial impact structures and cover a wide range of lithologies, including limestone, sandstone, shale and volcanic rocks. Typical features of SCs such as striations and horsetail structure are well developed in all selected specimens and allow a clear identification. The involved stony and iron meteorites are museum quality specimens (NHM Vienna) with fusion crust and/or regmaglypts.

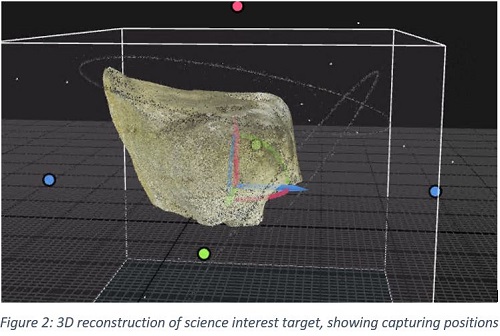

- A capturing campaign at the NHM Vienna covered more than a dozen SC and meteorite specimen, imaged from various positions under approximate ambient illumination conditions. This was followed by a 3D reconstruction process using photogrammetric techniques (Figure 2) to obtain a seamless watertight high resolution mesh and albedo map for each object, gaining a 3D data base of objects to be randomly placed in the realistic scenes.

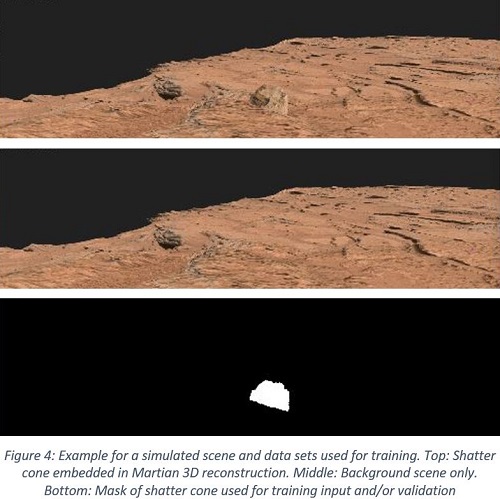

- Simulation of rover imagery for training is based on PRo3D, a viewer for the interactive exploration and geologic analysis of high-resolution planetary surface reconstructions [1]. It was adapted to efficiently render large amounts of images in batch mode controlled by a JSON file. SCs are automatically positioned on a chosen surface region with a Halton sequence, whereby collisions are avoided. The SCs are color adjusted to perfectly blend into the scene and in a future version will also receive and cast shadows. Special GPU shaders provide additional types of training images such as masks and depth maps. A typical rendering data set to be used for training is depicted in Figure 4.

- A random pose generator selects representative yet random positions and fields-of-view for the training images.

- Unsupervised generative-adversarial neural image-to-image translation techniques (GAN; [2]) are then applied on the background of the generated images, thus producing realistic landscapes drawn from the domain of actual Mars rover datasets while preserving the integrity of the high-resolution shatter cone models.

- The candidate meta-architectures for the machine learning approach are Mask R-CNN (instance segmentation) and Faster R-CNN (object detection), as these currently belong to the best performing architectures for these tasks. These meta-architectures can be configured with different backbone networks (e.g. ResNet-50, Darknet-53), which provide a tradeoff between network size and convergence rate.

- A remaining unused training set will be used for evaluation.

Summary and Outlook

So far science autonomy has been addressed only recently with increased mobility on planetary surfaces and the upcoming need for planetary rovers to react autonomously to given science opportunities, well in view of limited data exchange resources and tight operations cycles.

An Austrian Consortium in the so-called "Mars-DL" project is targeting the adaptation and test of simulation and deep learning mechanisms for autonomous detection of scientific targets of interest during robotic planetary surface missions.

For past and present missions the project will help explore & exploit further existing millions of planetary surface images that still hide undetected science opportunities.

The exploratory project will assess the feasibility of machine-learning based support during and after missions by automatic search on planetary surface imagery to raise science gain, meet serendipitous opportunities and speed up the tactical and strategic decision making during mission planning. An automatic “Science Target Consultant” (STC) is realized in prototype form, which, as a test version, can be plugged in to ExoMars operations once the mission has landed.

In its remaining runtime until end of 2020, Mars-DL training and validation will explore the possibility to search scientifically interesting targets across different sensors, investigate the usage of different cues such as 2D (multispectral / monochrome) and 3D, as well as spatial relationships between image data and regions thereon.

During mission operations of forthcoming missions (Mars 2020, and ExoMars & Sample Fetch Rover in particular) the STC can help avoid the missing of opportunities that may occur due to tactical time constraints preventing in-depth check of image material.

Acknowledgments

This abstract presents the results of the project Mars-DL, which received funding from the Austrian Space Applications Programme (ASAP14) financed by BMVIT, Project Nr. 873683, and project partners JOANNEUM RESEARCH, VRVis, SLR Engineering, and the National History Museum Vienna.

References

[1] Barnes R., Sanjeev G., Traxler C., Hesina G., Ortner T., Paar G., Huber B., Juhart K., Fritz L., Nauschnegg B., Muller J.P., Tao Y. and Bauer A. Geological analysis of Martian rover-derived Digital Outcrop Models using the 3D visualisation tool, Planetary Robotics 3D Viewer - PRo3D. In Planetary Mapping: Methods, Tools for Scientific Analysis and Exploration, Volume 5, Issue 7, pp 285-307, July 2018

[2] Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros; Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. The IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2223-2232

How to cite: Paar, G., Traxler, C., Nowak, R., Garolla, F., Bechtold, A., Koeberl, C., Fernandez Alonso, M. Y., and Sidla, O.: Mars-DL: Demonstrating feasibility of a simulation-based training approach for autonomous Planetary science target selection, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-189, https://doi.org/10.5194/epsc2020-189, 2020.

The H2020 Europlanet-2020 programme, which ended on Aug 31st, 2019, included an activity called VESPA (Virtual European Solar and Planetary Access), which focused on adapting Virtual Observatory (VO) techniques to handle Planetary Science data [1] [2]. The outcome of this activity is a contributive data distribution system where data services are located and maintained in research institutes, declared in a registry, and accessed by several clients based on a specific access protocol. During Europlanet-2020, 52 data services were installed, including the complete ESA Planetary Science Archive, and the outcome of several EU funded projects. Data are described using the EPN-TAP protocol, which parameters describe acquisition and observing conditions as well as data characteristics (physical quantity, data type, etc). A main search portal has been developed to optimize the user experience, which queries all services together. Compliance with VO standards ensures that existing tools can be used as well, either to access or visualize the data. In addition, a bridge linking the VO and Geographic Information Systems (GIS) has been installed to address formats and tools used to study planetary surfaces; several large data infrastructures were also installed or upgraded (SSHADE for lab spectroscopy, PVOL for amateurs images, AMDA for plasma-related data).

In the framework of the starting Europlanet-2024 programme, the VESPA activity will complete this system even further: 30-50 new data services will be installed, focusing on derived data, and experimental data produced in other Work Packages of Europlanet-2024; connections between PDS4 and EPN-TAP dictionaries will make PDS metadata searchable from the VESPA portal and vice versa; Solar System data present in astronomical VO catalogues will be made accessible, e.g. from the VizieR database. The search system will be connected with more powerful display and analysing tools: a run-on-demand platform will be installed, as well as Machine Learning capacities to process the available content. Finally, long-term sustainability will be improved by setting VESPA hubs to assist data providers in maintaining their services, and by using the new EU-funded European Open Science Cloud (EOSC). In addition to favoring data exploitation, VESPA will provide a handy and economical solution to Open Science challenges in the field.

The Europlanet 2020 & 2024 Research Infrastructure project have received funding from the European Union's Horizon 2020 research and innovation programme under grant agreements No 654208 & 871149.

[1] Erard et al 2018, Planet. Space Sci. 150, 65-85. 10.1016/j.pss.2017.05.013. ArXiv 1705.09727

[2] Erard et al. 2020, Data Science Journal 19, 22. doi: 10.5334/dsj-2020-022.

How to cite: Erard, S., Cecconi, B., Le Sidaner, P., Rossi, A. P., Brandt, C., Rothkaehl, H., Tomasik, L., Ivanovski, S., Molinaro, M., Schmitt, B., Génot, V., André, N., Vandaele, A. C., Trompet, L., Scherf, M., Hueso, R., Määttänen, A., Millour, E., Schmidt, F., and Waldmann, I. and the VESPA team: Virtual European Solar & Planetary Access (VESPA): Progress and prospects , Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-190, https://doi.org/10.5194/epsc2020-190, 2020.

The European Space Agency conducts planetary science and heliophysics investigations with a set of interplanetary missions such as Mars Express and ExoMars 2016 –currently in operations-, Rosetta, Venus Express, SMAR-1, Huygens and Giotto –in legacy phase- and will do so with BepiColombo, Solar Orbiter, ExoMars 2020, Jupiter Icy Moons Explorer (JUICE) and Hera missions. To support the study phase, planning, operations, data analysis and exploitation processes, the availability of ancillary data and other geometrically relevant models (spacecraft and related natural bodies trajectory and orientation, reference frames, digital shape models, science payload modeling, etc.) is a must.

SPICE is an information system the purpose of which is to provide scientists the observation geometry needed to plan scientific observations and to analyze the data returned from those observations. SPICE is comprised of a suite of data files, usually called kernels, and software -mostly subroutines. A customer incorporates a few of the subroutines into his/her own program that is built to read SPICE data and compute needed geometry parameters for whatever task is at hand. Examples of the geometry parameters typically computed are range or altitude, latitude and longitude, phase, incidence and emission angles, instrument pointing calculations, and reference frame and coordinate system conversions. SPICE is also very adept at time conversions.

The ESA SPICE Service (ESS) leads the SPICE operations for ESA missions. The group generates the SPICE Kernel datasets (SKD) for missions in missions in and legacy). ESS is also responsible for the generation of SPICE Kernels for Solar Orbiter and Hera. The generation of these datasets includes the operation software to convert ESA orbit, attitude and spacecraft clock correlation data into the corresponding SPICE format. ESS also provides consultancy and support to the Science Ground Segments of the planetary missions, the Instrument Teams and the science community. ESS works in partnership with the Navigation and Ancillary Facility (NAIF), a group at the Jet Propulsion Laboratory (JPL/NASA) originator and responsible of evolving and maintaining the SPICE system components.

The quality of the data contained on a SKD is paramount. Bad SPICE data can lead to the computation of wrong geometry and wrong geometry can jeopardize science results. ESS, in collaboration with NAIF is focused on providing the best SKDs possible. Kernels can be classified as Setup Kernels (Frame Kernels that describe Reference Frames of a given S/C, Instrument Kernels that describe a given sensor FoV and other characteristics, etc.) and Time-varying Kernels (SPK and CK kernels that provide Trajectory and Orientation data, SCLK that provide Time Correlation Data, etc.). Setup Kernels are iterated with the different agents involved in the determination of the data contained in those kernels (Instrument Teams the Science Ground Segment, etc.) and Time-varying kernels are automatically generated by the ESS SPICE Operational pipeline to feed the Operational kernels that are used in the day-to-day work of the missions in operations (planning and data analysis). These Time-varying kernels are peer-reviewed a posteriori for the consolidation of SKDs that are archived in the PSA and PDS. This contribution will outline the status of the SKDs maintained by ESS.

ESS offers other services beyond the generation and maintenance of SPICE Kernels datasets, such as configuration and instances for WebGeocalc (WGC) and SPICE-Enhanced Cosmographia (COSMO) for the ESA Missions WGC provides a web-based graphical user interface to many of the observation geometry computations available from SPICE. A WGC user can perform SPICE computations without the need to write a program; the user need have only a computer with a standard web browser. COSMO is an interactive tool used to produce 3D visualizations of planet ephemerides, sizes and shapes; spacecraft trajectories and orientations; and instrument field-of-views and footprints. This contribution will outline how the Cosmographia and WGC instances for ESA Planetary missions can be used, out of the box, for the benefit of the science community.

How to cite: Costa Sitjà, M., Escalante López, A., and Arviset, C.: An update of SPICE for ESA solar system exploration missions, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-204, https://doi.org/10.5194/epsc2020-204, 2020.

The ESAC Science Data Centre (ESDC) is in the process of registering Digital Object Identifiers (DOIs) for datasets or group of datasets accessible across the ESA Space Science Archives managed by ESDC. These DOIs are persistent URL that point to DOI landing pages setup and managed by ESDC, actually located at https://www.cosmos.esa.int/web/esdc/doi

In the heliophysics domain, the first step has been to register DOIs related to the datasets measured by experiments onboard ESA heliophysics spacecraft. Around 60 experiments are flying or have been flown so far onboard ESA heliophysics missions. At the moment, 47 DOI have been registered with CrossRef pointing to DOI landing pages for each experiment onboard SOHO, Proba-2, Cluster, Double Star, Ulysses and ISS-Solaces and are publicly accessible at https://www.cosmos.esa.int/web/esdc/doi/heliophysics. Discussions are on-going with editors of major journal to promote and acknowledge the use of data from any of these experiments by citing these DOIs. Eventually, this would improve the traceability of the usage of datasets from these experiments.

Additionally, a JavaScript Object Notation (JSON) script has been added to these DOI landing pages to make them discoverable through Google Dataset Search (GDS). GDS is a new search engine that helps users to find datasets online. Twenty five millions datasets were already indexed and searchable when this service was launched in early 2020, after a beta version released in late 2018. Thanks to a close interaction with the PI teams, DOIs for ESA heliophysics experiments are now easily discoverable through GDS and not only by looking for a particular experiment name. The use of structured metadata, based on schema.org, has enabled the search through physical processes investigated by these experiments, the type of experiments, the time coverage, the PI names or their affiliation… Various examples will be provided while the next steps of development will be outlined.

How to cite: Masson, A., De Marchi, G., Merin, B., Henar, M., and Wenzel, D.: Google Dataset Search and DOI for data in the ESA Space Science Archives, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-285, https://doi.org/10.5194/epsc2020-285, 2020.

Solar System Treks Mosaic Pipeline (SSTMP) is a new, open-source tool for generation of planetary DEM and orthoimage mosaics. Opportunistic stereo reconstruction from pre-existing orbital imagery has in the past typically required significant human input, particularly in the pair selection and spatial alignment steps. Previous stereo mosaics incorporate myriad human decisions, compromising the reproducibility of the process and complicating uncertainty analysis. Lack of a common framework for recording operator input has hindered the community's ability to collaborate and share experience to improve stereo reconstruction techniques. SSTMP provides a repeatable, turnkey, end-to-end solution for creating these products. The user requests mosaic generation for a bounding box or polygon, initiating a workflow which results in deliverable mosaics usable for site characterization, science, and public outreach.

The inital release of SSTMP focuses on production of elevation and orthoimage mosaics using data from the Lunar Reconaissance Orbiter's Narrow Angle Camera (LRO NAC). SSTMP can automatically select viable stereo pairs, complete stereo reconstruction, refine alignments using data from the LRO's laser altimeter (LOLA), and combine the data to produce orthoimage, elevation, and color hillshade mosaics.

SSTMP encapsulates the entire stereo mosaic production process into one workflow, managed by Argo Workflow opensource Kubernetes-based software. Each process runs in a container including all tools necessary for production and geospatial analysis of mosaics, ensuring a consistent computing environment. SSTMP automatically retrieves all necessary data. For processing steps, it leverages free and open-source software including Ames Stereo Pipeline, USGS ISIS, Geopandas, GDAL, and Orfeo toolbox.

How to cite: Curtis, A., Law, E., Malhotra, S., Day, B., Trautman, M., Gallegos, N., and Nainan, C.: The Solar System Treks Mosaic Pipeline (SSTMP), Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-425, https://doi.org/10.5194/epsc2020-425, 2020.

Abstract

In this work, we demonstrate techniques and results of a 3D mosaic of the whole of the Valles Marineris (VM) area of Mars using stereo images from the Mars Express High Resolution Camera (HRSC), as well as some examples of multi-resolution orthoimage and 3D mapping of 3 selected sites where recurring slope lineae (RSL) are common, at Coprates Montes, Nectaris Montes, and Capri Chaos, using Mars Reconnaissance Orbiter (MRO) Context Camera (CTX), High Resolution Imaging Science Experiment (HiRISE) and the Compact Reconnaissance Imaging Spectrometer for Mars (CRISM).

Introduction

Valles Marineris is the largest system of canyons on Mars that is more than 4,000 km long, 200 km wide, and up to 10 km deep. 3D mapping is essential to improving our understanding of the geological environment of Valles Marineris. Typically, deriving a co-aligned and mosaiced 3D base map covering the whole area is generally the starting point of such studies.This work focuses on a demonstration of multi-resolution 3D mapping and co-alignment of different data sources over the VM area using a co-registered and nested HRSC-CTX-CRISM-HiRISE dataset.

3D modelling

Previously, within the EU FP-7 iMars (http://www.i-mars.eu) project, we developed an automated multi-resolution Digital Terrain Model (DTM) processing chain for NASA CTX and HiRISE stereo-pairs, called the Co-registration ASP-Gotcha Optimised (CASP-GO), based on the open source NASA Ames Stereo Pipeline (ASP) [1], tie-point based multi-resolution image co-registration [2], and the Gotcha [3] sub-pixel refinement method. The CASP-GO system guarantees global geo-referencing congruence with respect to the aerographic coordinate system defined by HRSC, level-4 products and thence to the MOLA DTM, providing much higher resolution stereo derived DTMs.

3D area mosaic using HRSC

HRSC is now on its ~5,700thorbit onboard Mars Express, (totalling>20,000 HRSC products) covering ~98% of the Martian surface at a spatial resolution higher than 100 m/pixel. Among these, DLR has processed HRSC stereo images (level 4 DTMs, [4]), at 50-150m/pixel resolution, that now cover ~50% of the planet’s surface. At Valles Marineris, the DLR HRSC level 4 DTMs have covered a large portion of the canyons, leaving several large gaps unprocessed or having missing data.

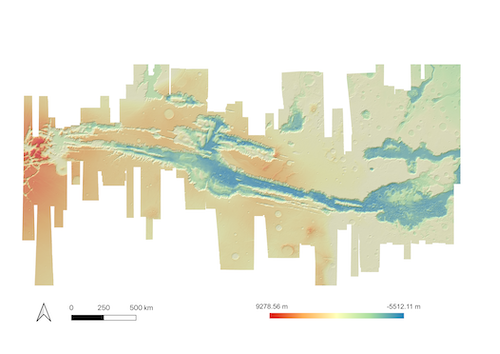

We created a further 11 HRSC single strip DTMs and Orthorectified images (ORIs) using HRSC level 2 stereo images to cover these gaps. These are processed using CASP-GO [2] and co-registered with the existing DLR HRSC level 4 DTMs and ORIs to allow seamless mosaicing for the whole of Valles Marineris. Also, to eliminate the need for bundle block adjustment, both UCL and DLR HRSC level 4 DTMs are co-aligned with the MOLA DTM using an iterative closest point co-alignment which is part of ASP [1]. Figure 1 shows a colourised and hill-shaded DTM of the whole area. HRSC level 4 ORIs are then generated using this mosaiced DTM are radiometrically corrected and brightness/contrast adjusted to produce a corresponding HRSC ORI mosaic [5].

Figure 1 HRSC DTM merged DLR + UCL mosaic of VM at 50m/gridpoint

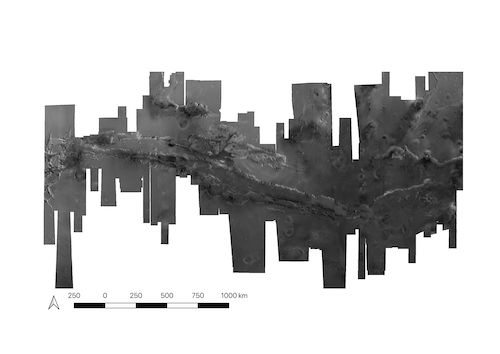

Figure 2 HRSC ORI mosaic of VM generated at 12.5m after brightness adjustment [5]

Automated co-registration of CTX, HiRISE and CRISM

In order to study RSL features, we produce stacks of multi-resolution products using the HRSC DTM and ORI as the basemap. We here show an example of one of the CTX DTMs and ORIs for Capri Chaos which is one of the three selected study sites. The CTX ORIs are co-registered with the HRSC ORI mosaic using the tie-point based image co-registration pipeline described in [2]. The CTX DTMs are then spatially adjusted according to the ORI tie-point and co-aligned vertically to the HRSC DTM mosaic using a B-Spline fitting method to eliminate any residual jitter. The spatial agreement between CTX ORIs and HRSC ORI mosaic is at subpixel level of the base image (~5m). The vertical agreement between CTX DTMs and HRSC/MOLA DTM mosaic can be reduced from several hundreds of metres to less than 10 m (on average) and 50 m (at maximum).

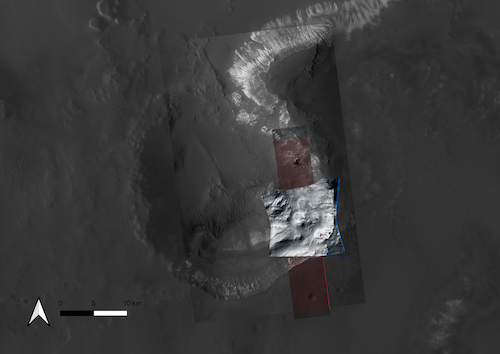

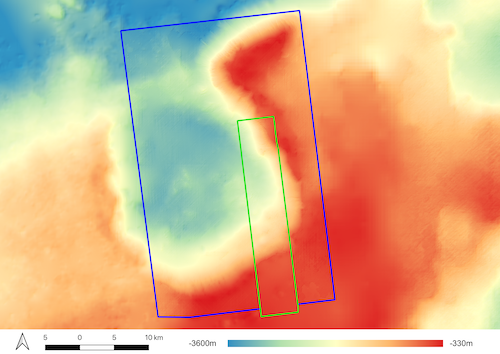

Subsequently, we have also corrected the UoA HiRISE ORIs and DTMs using the same joint image co-registration and vertical alignment method to CTX basemap. The CRISM data is also co-registered with the CTX ORI using a modified version of the tie-point based image co-registration pipeline, bringing several hundreds of metres of spatial misalignment to less than 18 metres. An example of the co-registered nested stack is shown in Figure 3 and of the CTX DTM in Figure 4.

Figure 3 Co-registered HiRISE ORI (at 90% transparency) and unco-registered UoA HiRISE ORI (in red), co-registered CRISM (at 90% transparency) and unco-registered raw CRISM (blue) image, shown on top of the UCL CTX ORI and HRSC ORI mosaic at Capri Chaos in the Valles Marineris.

Figure 4 Colourised and hillshaded figures of co-aligned and nested HiRISE DTM (in green bounding box), CTX DTM (in blue bounding box), and HRSC DTM mosaic at Capri Chaos at VM.

Conclusion

Precisely co-registered HiRISE, CRISM, CTX and HRSC data will be used as a basis for future comprehensive analyses of RSL sites using: time-series data to track RSL development, topographic analysis to constrain the slopes over which they propagate combined with information from compositional data derived from spectral and photometric data.

Acknowledgements

The research leading to these results is receiving funding from the UKSA Aurora programme (2018-2021) under grant no. ST/S001891/1. The research leading to these results also received partial funding from the European Union’s Seventh Framework Programme (FP7/2007-2013) under iMars grant agreement n˚ 607379. SJC is grateful for the financial support of CNES in support of her HiRISE work.

References

[1] Beyer, R., O. Alexandrov, S. McMichael (2018), Earth and Space Science, vol 5(9), pp.537-548. [2] Tao, Y., J.-P. Muller, W. Poole (2016), Icarus, vol 280, pp.139-157. [3] Shin, D. and J.-P. Muller (2012), Pattern Recognition, vol 45(10), pp.3795 -3809. [4] Gwinner et al., (2016) PSS, vol. 126, pp. 93-138 [5] Michael, G.G. et al. (2016), PSS, vol 121, pp. 18-26.

How to cite: Tao, Y., Michael, G., Muller, J.-P., and Conway, S.: 3D multi-resolution mapping of Valles Marineris to improve the understanding of RSLs , Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-430, https://doi.org/10.5194/epsc2020-430, 2020.

In the era of big data and cloud storage and computing, new ways for scientists to approach their research are emerging, which impact directly how science progresses and discoveries are made. This development has led the European Space Agency (ESA) to establish a reference framework for space mission operation and exploitation by scientific communities: the ESA Datalabs (EDL). The guiding principle of the EDL concept is to move the user to the data and tools, and to enable users to publish applications (e.g. processors, codes, pipelines, analysis and visualisation tools) within a trusted environment, close to the scientific data, and permitting the whole scientific community to discover new science products in an open and FAIR approach.

In this context we will present a proto-type science application (aka Sci-App) for the exploration and visualization of Mars and Venus using the SPICAM/V Level-2 data available from the ESA Planetary Science Archive (PSA). This demonstrator facilitates the extraction and compilation of scientific data from the PSA and ease their integration with other tools through VO interoperability thus increasing their scientific impact. The tool’s key modular functionalities are 1) interactive data query and retrieval (i.e. search archive metadata), 2) interactive visualisation (i.e. geospatial info of query results, data content display of spectra, atmospheric vertical profiles), 3) data manipulation (i.e. create local maps or data cubes), and 4) data analysis (in combination with other connected VO tools). The application allows users to select, visualise and analyse both Level 2A products, which consist of e.g. transmission and radiance spectra, and level 2B products, which consist of retrieved physical parameters, such as atmospheric aerosol properties and vertical density profiles for (trace) gases in the Martian or Venusian atmosphere.

Our goal is to deploy the (containerised) Sci-App to the EDL and similar initiatives for uptake by the space science community. In the future, we expect to incorporate access to other Mars/Venus atmospheric data sets, particularly the measurements obtained with the NOMAD and ACS instruments on the ExoMars Trace Gas Orbiter. The community can also use this application as a starting point for their own tool development for other data products/missions.

How to cite: Cox, N., Bernard-Salas, J., Ferron, S., Vergely, J.-L., Blanot, L., and Mangin, A.: Mars and Venus Exploration: A mapping, visualisation, and data analysis application for SPICAM/V high-level science data products, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-759, https://doi.org/10.5194/epsc2020-759, 2020.

Abstract

With new missions being selected, missions moving to post-operations, and missions starting their journey to various targets in the Solar System, the European Space Agency’s Planetary Science Archive [1] (http://psa.esa.int) (PSA) is in constant evolution to support the needs of the projects and of the scientific community.

What happened since last year?

The past year has been good for the European Space Agency (ESA) Solar System missions and the PSA, with the successful flyby of Earth by the BepiColombo mission to Mercury. The ExoMars 2016 mission is performing nominally and is quickly delivering numerous scientific observations. As is common for ESA missions, access to the data is protected and reserved to members of the science team for the first months of the mission. Once the products are ready to go public, the PSA performs a scientific peer-review to ensure that the products to be made public are of excellent quality for all future users.

During the first half of 2020, the PSA has successfully peer-reviewed the CaSSIS and NOMAD observations. Those products are now being made public on a systematic basis once the proprietary period elapses (generally between 6 and 12 months).

Early in 2020, filters to search data with geometrical values (i.e., longitude, phase angle, slant distance, etc.) were enabled. For now this service works for Mars Express and Rosetta, but will be soon extended to other missions.

One of the main new services provided to the scientific community in 2020 is the Guest Storage Facility (GSF), which allows users to archive derived products. Products such as geological maps, Digital Terrains Models, new calibrated files, and others can be stored in the GSF in the format most used by the users. Contact us to preserve your science!

Finally, by the end of 2020 users of the PSA will have access to new services based on Geographical Information Systems.

You can contribute to the PSA!

At the PSA we constantly interact with our users to ensure that our services are in line with the expectations and needs of the community. We encourage feedback from community scientists through:

- PSA Users Group: A group of scientific experts advising the PSA on strategic development;

- Direct interactions: Scientists from the PSA are available and eager to receive your comments and suggestions;

- ESA missions: If you are part of a mission archiving its data at the PSA, tell us how your data should best be searched and used.

Acknowledgement

The authors are very grateful to all the people who have contributed over the last 17 years to ESA's Planetary Science Archive. We are also thankful to ESA’s teams who are operating the missions and to the instrument science teams who are generating and delivering scientific calibrated products to the archive.

References

[1] Besse, S. et al. (2017) Planetary and Space Science, 10.1016/j.pss.2017.07.013, ESA's Planetary Science Archive: Preserve and present reliable scientific data sets.

How to cite: Besse, S., Barbarisi, I., de Marchi, G., Merin, B., Arenas, J., Bentley, M., de Castro, S., Docasal, R., Coia, D., Fraga, D., Grotheer, E., Heather, D., Lim, T., Martinez, S., Montero, A., Osinde, J., Raga, F., Ruano, J., and Saiz, J.: ESA’s Planetary Science Archive, updates since last year!, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-921, https://doi.org/10.5194/epsc2020-921, 2020.

VESPA (Virtual European Solar and Planetary Access, Erard et al. EPSC2020-190, 2020) is a network of interoperable data services covering all fields of Solar System Sciences. It is a mature project, developed within EUROPLANET-FP7 and EUROPLANET-2020-RI. The latter ended in Aug. 2019. It is further supported under the EUROPLANET-2024-RI project (started in Feb. 2020).

The VESPA data providers are using a standard API (based on the Table Access Protocol of IVOA (International Virtual Observatory Alliance) and EPNcore, a common dictionary of metadata developed by the VESPA team). The VESPA services consist in searchable metadata tables, with links (URLs) to science data products (files, web-services...). The VESPA metadata includes relevant keywords for scientific data discovery, such as data coverage (temporal, spectral, spatial...), data content (physical parameters, processing level...), data origin (observatory, instrument, publisher...) or data access (format, URL, size...). VESPA hence provides a unified data discovery service for Solar System Sciences.

The architecture of the VESPA network is distributed (the metadata tables are hosted and maintained by the VESPA providers), but it is not redundant. The hosting and maintenance of VESPA provider's servers has proved to be a single point failure for small teams with little IT support. The VESPA-Cloud project with EOSC-Hub will greatly facilitate the sustainability of data sharing from small teams as well as teams, whose institutions have restrictive firewall policies (like labs hosted by space agencies, e.g., DLR in Germany). Most of the VESPA data provider are using the same server software, namely DaCHS (Data Centre Helper Suite), developed by the Heidelberg team included in the project.

VESPA-Cloud provides a cloud-hosted facility to host VESPA compliant metadata tables in a controlled and maintained software environment. The VESPA providers will focus on the science application configuration, whereas the VESPA core team will support them with the maintenance of the deployed instances. The development of the VESPA provider’s data service will be done using a git versioning system (github or institute gitlab).

An instance of the VESPA query interface portal will also be implemented on EOSC-hub provided virtual machine.

The community AAI (Authorization and Authentication Infrastructure) is provided by GÉANT, through its eduTEAMS service. In the context of EOSC-hub, the EGI Federation is providing virtual machine services from IN2P3 and CESNET while data storage and registry services will be provided by EUDAT.

In the course of the VESPA-Cloud project, we will implement in the DaCHS framework cloud-storage API connectors (such as Amazon S3, iRODS, etc.) to read data in the cloud and ingesting metadata. Since DacHS is used worldwide by many datacenters to share astronomical and solar system data collections, many teams will benefit from this development.

The Europlanet-2024 Research Infrastructure project has received funding from the European Union's Horizon 2020 research and innovation programme under grant agreement No 871149. This work used the EGI Infrastructure with the dedicated support of IN2P3-IRES and CESNET-MCC. The eduTEAMS Service is made possible via funding from the European Union's Horizon 2020 research and innovation programme under Grant Agreement No. 856726 (GN4-3).

How to cite: Cecconi, B., Philippe, H., Shih, A., Le Sidaner, P., Grenier, B., Atherton, C., Stéphane, E., Demleitner, M., Molinaro, M., and Rossi, A. P.: VESPA-Cloud, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1015, https://doi.org/10.5194/epsc2020-1015, 2020.

ABSTRACT

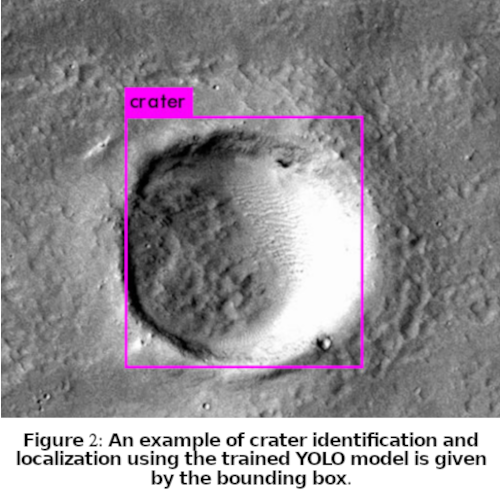

Impact crater identification and localization are vital to age estimation studies of all planetary surfaces. This study illustrates the utility of object detection based approach for impact crater identification. We demonstrate this using the You Only Look Once (YOLO) object detection technique to localize and identify impact craters on the imagery from Context Camera onboard the Mars Reconnaissance Orbiter. The model was trained on a chipped and augmented dataset of 1450 images for 13000 iterations and tested on 750 images. Accuracy and sensitivity studies reveal a Mean Average Precision of 78.35%, precision of 0.81, recall (sensitivity) of 0.76 and F1 score of 0.78. We conclude that object detection techniques such as YOLO may be employed for crater counting studies and database generation.

INTRODUCTION

Cumulative crater size-frequency distribution studies have been widely used in establishing the age of planetary landforms [1,2]. The crater identification process for such studies has been largely manual wherein impact craters are digitized on GIS platforms. Recent studies have explored the potential of using automatic crater detection techniques such as Canny Edge Detection and Circular Hough Transform [3], machine learning classifiers such as random forest [4] and support vector machines [5], and deep learning-based semantic classification [6,7]. These techniques are often computationally intensive[6,7] or result in lower accuracy[3,4,5] values. We have implemented a different approach utilizing bounding box based object detection. Here, we present an automatic crater detection algorithm that utilizes the YOLO object detection technique[8] implemented on the Google Colaboratory cloud service platform.

DATA PREPARATION

15 datasets near the Hesperia Dorsa and Tartarus Montes regions of the Martian surface imaged by the Context Camera payload onboard the Mars Reconnaissance Orbiter satellite were used for our study. Impact craters larger than 50m were annotated as circles using the QGIS software and attributes of centroid coordinates, minimum and maximum annotation extents and diameter were extracted. Clusters of craters were identified using the Density-based spatial clustering of applications with noise(DBSCAN)[9] algorithm. 512x512 pixel image chips containing the crater clusters were extracted. A dataset of 528 image chips was generated after clustering and chipping. We next performed data augmentation using the Keras image data generator class and generated a dataset of 2200 images. This dataset was split into 2:1 train and test sets and used for training the darknet implementation of YOLO for the custom dataset.

OBJECT DETECTION

The darknet implementation of YOLO was used for object detection. The images were subjected to a convolutional neural network-based feature extraction utilizing the Relu activation. A learning rate of 0.001 was employed and the dataset was divided into batches of 64 images with a mini-batch size of 16. The model was trained for 16000 iterations using Google Colaboratory with a GPU hardware accelerator.

RESULTS

Results were validated by computing the Mean Average Precision (mAP) and F1 scores for the test set every 1000 iterations. Training for 13000 iterations has resulted in optimal F1 and mAP as evident from Figure 1. The average loss reduced to 0.235. The detection resulted in an F1 score of 0.78, mAP (for Intersection over Union of 0.5 and higher) of 78.35%, precision of 0.81 and recall of 0.76. The weights computed at the end of 13000 iterations were utilized for detection. A sample detection is depicted in Figure 2.

DISCUSSION

This study establishes the utility and compatibility of object detection algorithms such as YOLO for automatic crater detection. Improvements to the model can be attempted by incorporating multi-source detection and improving the number and variety of training samples. This technique can also be extended for use with existing global databases for a well-tuned pre-trained model which can be utilized by researchers for crater identification over any region of their study. Further, they can be useful in augmenting and updating planetary databases with minimal manual intervention.

REFERENCES

1. Ivanov, B. A., et al. "The comparison of size-frequency distributions of impact craters and asteroids and the planetary cratering rate." Asteroids III 1 (2002): 89-101.

2. Michael, G. G., and Gerhard Neukum. "Planetary surface dating from crater size-frequency distribution measurements: Partial resurfacing events and statistical age uncertainty." Earth and Planetary Science Letters 294.3-4 (2010): 223-229.

3. Galloway, M. J., et al. "Automated Crater Detection and Counting Using the Hough Transform and Canny Edge Detection." LPICo 1841 (2015): 9024.

4. Yin, Jihao, Hui Li, and Xiuping Jia. "Crater detection based on gist features." IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 8.1 (2014): 23-29.

5. Salamunićcar, Goran, and Sven Lončarić. "Application of machine learning using support vector machines for crater detection from Martian digital topography data." cosp 38 (2010): 3.

6. Silburt, Ari, et al. "Lunar crater identification via deep learning." Icarus 317 (2019): 27-38.

7. Lee, Christopher. "Automated crater detection on Mars using deep learning." Planetary and Space Science 170 (2019): 16-28.

8. Redmon, Joseph, et al. "You only look once: Unified, real-time object detection." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

9. Ester, Martin, et al. "A density-based algorithm for discovering clusters in large spatial databases with noise." Kdd. Vol. 96. No. 34. 1996.

How to cite: Chaudhary, V., Mane, D., Anilkumar, R., Chouhan, A., Chutia, D., and Raju, P.: An Object Detection Approach to Automatic Crater Detection from CTX Imagery, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1029, https://doi.org/10.5194/epsc2020-1029, 2020.

The advent of new wide field, ground-based and multiwavelength space based sky surveys will lead to a large amount of data that needs to be efficiently processed, archived and disseminated. In addition, differently from astrometric observations which have a centralized data repository acting under IAU mandate (the MPC), the outcome of ground-based NEO observations devoted to NEO physical characterization are sparsely distributed. It appears then desirable to have data on NEO physical characterization available through a centralized access able to guarantee their long-term archiving, as well as to ensure the maintenance and the evolution of the corresponding data products.

Within the NEOROCKS EU project (“The NEO Rapid Observation, Characterization and Key Simulations” - SU-SPACE-23-SEC-2019 from the Horizon 2020), as part of WP5 (Data Management) activities, we propose the implementation of a unique NEO Physical Properties database hosting all different data products resulting from NEO observations devoted to physical characterization, in order to ensure an efficient data products dissemination and their short/long-term storage and availability. The NEOROCKS database, will be designed by means of an EPNCore derived data model (see [1]) ready for the EPN-TAP service implementation, and thus able to store, maintain and regularly update all different levels of processing, from raw data to final products (e.g. size, rotation, spectral type) beyond the duration of the project as an reliable source of services and data on NEO physical properties hosted at ASI SSDC.

The NEOROCKS database will import NEO orbital elements from the Near-Earth Object Dynamics Site (NEODyS), while NEO physical parameters will be partly provided by NEOROCKS users, partly imported from external data source. In particular, the NEO physical properties database available at the ESA NEO Coordination Center, hosting since 2013 the legacy of the European Asteroid Research Node (EARN) and which will host Solar System Objects (SSO) NEO physical properties in the Gaia DR3 expected for the second half of 2021, will be imported and integrated into the NEOROCKS Physical Properties Database. Thus, a single query interface will allow to display both dynamical and physical properties of any given NEO, or to search for samples within the NEO population satisfying certain requirements (e.g. targets for astronomical observations and mission analysis).

Acknowledgements: The LICIACube team acknowledges financial support from Agenzia Spaziale Italiana (ASI, contract No. 2019-31-HH.0 CUP F84I190012600).

References

[1] Erard S., Cecconi B., Le Sidaner P., Berthier J., Henry F., Molinaro M., Giardino M., Bourrel N., Andre N., Gangloff M., Jacquey C., Topf F. 2014. The EPN-TAP protocol for the Planetary Science Virtual Observatory (2014). Astronomy And Computing, vol. 7-8, p. 52-61, ISSN: 2213-1337, doi: 10.1016/j.ascom.2014.07.008

How to cite: Giunta, A., Giardino, M., Perozzi, E., Polenta, G., and Zinzi, A. and the NEOROCKS: A NEO Physical Properties database within the NEOROCKS EU project, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1047, https://doi.org/10.5194/epsc2020-1047, 2020.

The planetary community has access to a wealth of raw research data by using central data distribution platforms such as the Planetary Data System (PDS) [1], the Planetary Science Archive (PSA) [2] or specific mission archives. This research data becomes usable through its contents, i.e., the measurement, but also through the definition of extensive metadata descriptions without which raw data would be incomplete or even useless.

Beyond these archives, the International Planetary Data Alliance (IPDA) is responsible for the maintenance of the quality and performance of data from space instruments [3]. Established by NASA and the planetary science community in 2014 the Mapping and Planetary Spatial Infrastructure Team (MAPSIT), originally named the Cartography Research Assessment Group (CRAG), takes care of the American objectives in space by ensuring the usage of planetary data for scientific and engineering communities [4]. Beside these, further efforts focus on e.g. data access system [e.g. 5], on interoperability [e.g. 6] and infrastructure topics [e.g. 7] related to mission data.

When it comes to data products derived from these raw data, such as processed image products, terrain models, modelled spectral information, maps, diagrams, data tables etc., there is a considerable lack of central archives allowing the research community to store and find derived research-data products. To enable a healthy planetary research-product life cycle (cf. [8]), questions on re-usability of planetary research products need to be addressed and a coordination of organizational processes is required. This involves questions regarding individual entities dealing with the modification, review, or dissemination of data. In order to facilitate such processes, concepts and initiatives such as OPEN data principles [9] and FAIR data [10] have been developed, and infrastructure frameworks have been conceptualized and implemented. Spatial Data Infrastructures (SDI) have spawned in the 1990s due to growing amount of data and the need to make decisions based on reliably maintained data [11].

The SDI Directive INSPIRE of the European Commission represents one of the largest SDIs and was established 2007 on the European level, to enable sharing of environmental spatial information [12]. It builds on established standards such as the OGC [13] and ISO [14] for metadata and services, and serves with data models, vocabularies and other mainly technical specifications [15]. In the Earth sciences infrastructures have been developing organically over the years and adapted to ever growing needs. This approach differs from developments in the planetary sciences that we are currently witnessing, but yet it presents an extremely valuable base of knowledge and experience in order to avoid facing similar problems and deal with challenges right from the beginning.

In order to learn from experiences in Earth observations and mapping, to be able to adopt structures into a provision and reuse of planetary research products, we aim to streamline the discussion within the planetary community. We here discuss the common planetary research-data life cycle and highlight, how the existing life cycle could be positively affected and enriched from a user- and process-centric view, by translating established SDI experience and workflows of INSPIRE into the planetary domain. We will discuss this process from using a discrete research data product: the map, due to its high level of abstraction and complexity. We will subsequently abstract this research product and transfer its characteristics to a wider range of different research product types.

References:

[1] Planetary Data System (PDS), 2020, https://pds.nasa.gov/

[2] Planetary Science Archive (PSA), 2020, https://archives.esac.esa.int/psa/#!Home%20View

[3] International Planetary Data Alliance, IPDA, 2020, https://planetarydata.org/

[4] Mapping and Planetary Spatial Infrastructure Team (MAPSIT), 2020 https://www.lpi.usra.edu/mapsit/

[5] Erard, S. et al, 2018, VESPA: A community-driven Virtual Observatory in Planetary Science. PSS 150, doi.org/10.1016/j.pss.2017.05.013

[6] Hare, T. et al, 2018, Interoperability in planetary research for geospatial data analysis, PSS 150, doi.org/10.1016/j.pss.2017.04.004

[7] Laura, J. et al. 2017, Towards a Planetary Spatial Data Infrastructure, ISPRS Int. J. Geo-Inf. 6, 181, doi:10.3390/ijgi6060181

[8] Office of Information, Knowledge and Library Services, 2020, Research data life cycle, https://blogs.ntu.edu.sg/lib-datamanagement/data-lifecycle/

[9] OPEN Knowledge Foundation, 2020, Open Data Handbook. http://opendatahandbook.org/guide/en/

[10] Wilkinson, M. et al., 2016, The FAIR Guiding principles for scientific data management and stewardship. Scientific Data, doi:10.1038/sdata.2016.18

[11] Global Spatial Data Infrastructure (GSDI), 2001, The SDI Cookbook version 1.1, Editor: D. Nebert, Technical Working Group Chair

[12] INSPIRE as Knowledge Base 2020. https://inspire.ec.europa.eu/

[13] Open Geospatial Consortium (OGC), 2020, https://www.iso.org/standards.html

[14] International Organization for Standardization (ISO), 2020, https://www.iso.org/standards.html

[15] INSPIRE, 2007, Directive 2007/2/EC of the European Parliament and of the Council of 14 March 2007 establishing an Infrastructure for Spatial Information in the European Community (INSPIRE)

How to cite: Nass, A., Asch, K., van Gasselt, S., and Rossi, A. P.: Spatial and Open Research Data Infrastructure for Planetary Science - Lessons learned from European developments, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1058, https://doi.org/10.5194/epsc2020-1058, 2020.

The majority of planetary missions return only one thing: data. The volume of data returned from distant planets is typically minuscule compared to Earth-based investigations, volume decreasing further from more distant solar system missions. Meanwhile, the data produced by planetary science instruments continue to grow along with mission ambitions. Moreover, the time required for decisional data to reach science and operations teams on Earth, and for commands to be sent, also increases with distance. To maximize the value of each bit, within these mission time and volume constraints, instruments need to be selective about what they send back to Earth. We envision instruments that analyze science data onboard, such that they can adjust and tune themselves, select the next operations to be run without requiring ground-in-the-loop, and transmit home only the most interesting or time-critical data.

Recent developments have demonstrated the tremendous potential of robotic explorers for planetary exploration and for other extreme environments. We believe that science autonomy has the potential to be as important as robotic autonomy (e.g., roving terrain) in improving the science potential of these missions because it directly optimizes the returned data. On- board science data processing, interpretation, and reaction, as well as prioritization of telemetry, therefore, comprise new, critical challenges of mission design.

We present a first step toward this vision: a machine learning (ML) approach for analyzing science data from the Mars Organic Molecule Analyzer (MOMA) instrument, which will land on Mars within the ExoMars rover Rosalind Franklin in 2023. MOMA is a dual-source (laser desorption and gas chromatograph) mass spectrometer that will search for past or present life on the Martian surface and subsurface through analysis of soil samples. We use data collected from the MOMA flight-like engineering model to develop mass-spectrometry- focused machine learning techniques. We first apply unsupervised algorithms in order to cluster input data based on inherent patterns and separate the bulk data into clusters. Then, optimized classification algorithms designed for MOMA’s scientific goals provide information to the scientists about the likely content of the sample. This will help the scientists with their analysis of the sample and decision-making process regarding subsequent operations.

We used MOMA data to develop initial machine learning algorithms and strategies as a proof of concept and to design software to support intelligent operations of more autonomous systems in development for future exploratory missions. This data characterization and categorization is the first step of a longer-term objective to enable the spacecraft and instruments themselves to make real-time adjustments during operations, thus optimizing the potentially complex search for life in our solar system and beyond.

How to cite: Da Poian, V., Lyness, E., Trainer, M., Li, X., Brinckerhoff, W., and Danell, R.: Science Autonomy and Intelligent Systems on the ExoMars Mission, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1114, https://doi.org/10.5194/epsc2020-1114, 2020.

At 2017 and 2018 EPSC meetings we presented a functioning version of the NEO Data Exchange and Collaboration Service (NEODECS) which is the

platform enabling collaboration between NEO researchers. This tool is also open for other Small Solar System Bodies like MBAs, TNOs, etc. Observers

can use this service to store, exchange or publish data from their observations, seek collaborators, broadcast observing plans and offer free

telescope time. This tool has been recently updated, based on feedback from researchers. We included functions to save telescopes, offer free telescope time and search for telescope offers. We also improved the process with registration to be more user-independent: in previous versions the tool required administrative input. Another improvement is providing tooltips describing most important functions showed just by moving cursor over them in menu. To provide help to new users we created a Welcome Tour for newcomers not experienced with NEODECS. It is provided in form of instruction videos showing how to use the most important functions. The goal of this presentation is to show practical usage of the NEODECS tool and encourage observers to use it.

How to cite: Jasik, D.: NEODECS - presentation of improved service, Europlanet Science Congress 2020, online, 21 Sep–9 Oct 2020, EPSC2020-1129, https://doi.org/10.5194/epsc2020-1129, 2020.

Please decide on your access

Please use the buttons below to download the presentation materials or to visit the external website where the presentation is linked. Regarding the external link, please note that Copernicus Meetings cannot accept any liability for the content and the website you will visit.

Forward to presentation link

You are going to open an external link to the presentation as indicated by the authors. Copernicus Meetings cannot accept any liability for the content and the website you will visit.

We are sorry, but presentations are only available for users who registered for the conference. Thank you.