Super-3D: Subpixel-Scale Topography Retrieval of Mars Using Deep Learning

- 1University College London, Mullard Space Science Laboratory, Space and Climate Physics, Holmbury St Mary, United Kingdom of Great Britain – England, Scotland, Wales (j.muller@ucl.ac.uk)

- 2CNRS, Laboratoire de Planétologie et Géodynamique, 2 rue de la Houssinière, Nantes, France

Introduction

High-resolution digital terrain models (DTMs) play an important role in studying the formation processes involved in generating a modern-day planetary surface such as Mars. However, it has been a common understanding that DTMs derived from a particular imaging dataset can only achieve a lower, or at best, similar effective spatial resolution compared to the input images, due to the various approximations and/or filtering processes introduced by the photogrammetric and/or photoclinometric pipelines. With recent successes in deep learning techniques, it has now become feasible to improve the effective resolution of an image using super-resolution restoration (SRR) networks [1], retrieving pixel-scale topography using single-image DTM estimation (SDE) networks [2], and subsequently, combining the two techniques to produce subpixel-scale topography (Super-3D) from only a monocular-view input image [3].

Methods

Here we present our recent work [3] on combining the UCL (University College London) MARSGAN (multi-scale adaptive-weighted residual super-resolution generative adversarial network) SRR system [1] with the MADNet (multi-scale generative adversarial U-net based single-image DTM estimation) SDE system [2] to produce single-input-image-based DTMs at subpixel-scale spatial resolution [3]. Our study site is within the 3-sigma ellipse of the Rosalind Franklin ExoMars rover’s selected landing site (centred near 18.275°N, 335.368°E) at Oxia Planum.

We use the 4 m/pixel ESA Trace Gas Orbiter Colour and Stereo Surface Imaging System (CaSSIS) “PAN” band images and the 25 cm/pixel NASA Mars Reconnaissance Orbiter High Resolution Imaging Science Experiment (HiRISE) “RED” band images as the test datasets. We apply MARSGAN to the original CaSSIS and HiRISE images, and subsequently, we apply MADNet SDE to the resultant 1 m/pixel CaSSIS SRR images and the 6.25 cm/pixel HiRISE SRR images, to produce CaSSIS SRR-DTMs at 2 m/pixel and HiRISE SRR-DTMs at 12.5 cm/pixel, respectively. We show qualitative assessments for the resultant CaSSIS and HiRISE SRR-DTMs. We also provide quantitative assessments (refer to [3]) for the CaSSIS SRR-DTMs using the DTM evaluation technique that is described in [4].

Results

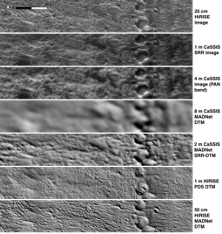

In this work, we perform qualitative assessment (visual inspections) for both the resultant 2 m/pixel CaSSIS SRR MADNet DTM mosaic and 12.5 cm/pixel HiRISE SRR MADNet DTMs. A small exemplar area (refer to “Zoom-in Area-1” in Figure 1 for its location) of the CaSSIS SRR MADNet DTM mosaic that overlaps with the HiRISE PDS DTM (DTEEC_039299_1985_047501_1985_L01), demonstrating the different level of details of the 25 cm/pixel HiRISE PDS ORI, the 1 m/pixel CaSSIS SRR image, the 4 m/pixel original CaSSIS PAN band image (MY34_004925_019_2_PAN), the shaded relief images of the 8 m/pixel CaSSIS MADNet DTM, 2 m/pixel CaSSIS SRR MADNet DTM, 1 m/pixel HiRISE PDS DTM, and the 50 cm/pixel HiRISE MADNet DTM, as shown in Figure 2.

Figure 1. Locations of the exemplar zoom-in areas are demonstrated next. Left: 25 cm/pixel HiRISE PDS ORI (ESP_039299_1985_RED_A_01_ORTHO) superimposed on top of the resultant 1 m/pixel CaSSIS SRR image mosaic [3], superimposed by the 1-sigma (red) and 3-sigma (dark-blue) ellipses of the Rosalind Franklin ExoMars rover’s planned landing site at Oxia Planum; Right: multi-level zoom-in views of the same HiRISE PDS ORI.

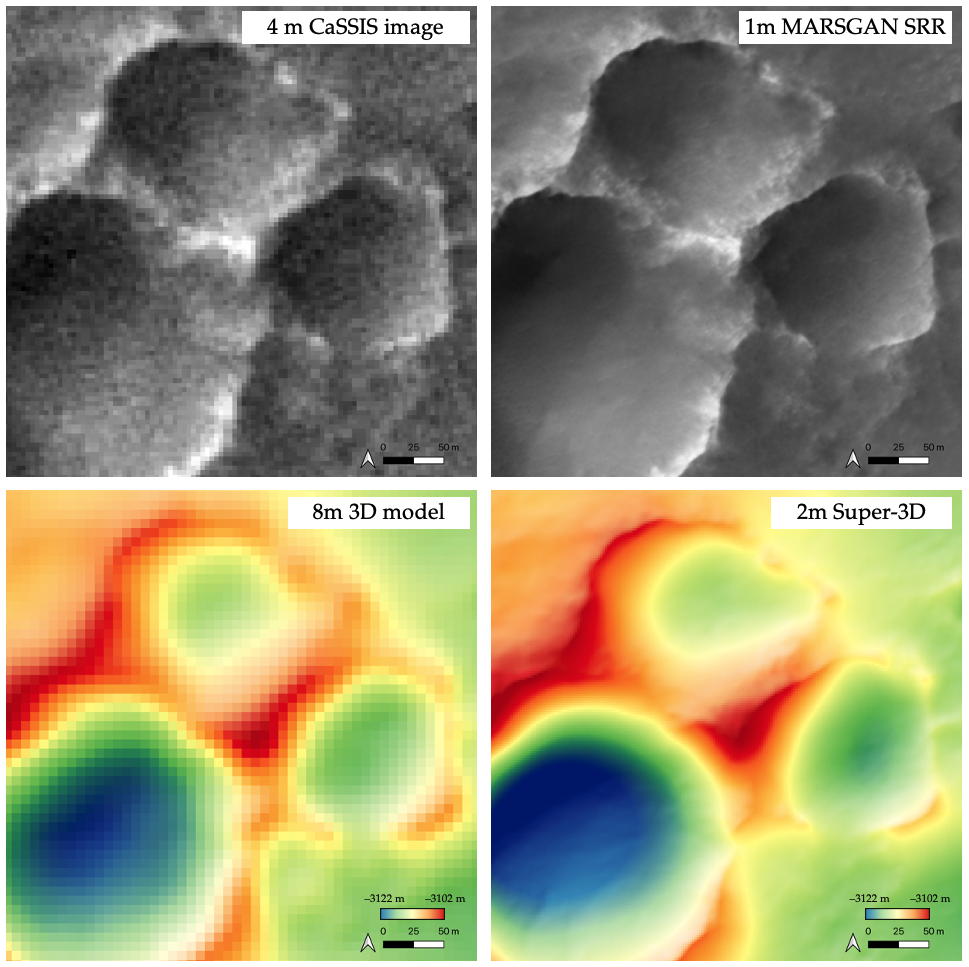

Figure 3 shows an example of Super-3D applied to CaSSIS imagery firstly in terms of SRR using MARSGAN and then using the SDE to produce this new Super-3D product.

Figure 2. Visual comparisons of a small exemplar area (i.e., “Zoom-in Area-1”) of the reference 25 cm/pixel HiRISE ORI, the resultant 1 m/pixel CaSSIS SRR image, the input 4 m/pixel CaSSIS PAN band image, shaded relief images of the resultant 8 m/pixel CaSSIS MADNet DTM, the resultant 2 m/pixel CaSSIS SRR MADNet DTM, the reference 1 m/pixel HiRISE PDS DTM, and the reference 50 cm/pixel HiRISE MADNet DTM (from top to bottom).

Conclusions

In this work, we show that we can use coupled MARSGAN SRR and MADNet SDE techniques to produce subpixel-scale topography from single-view CaSSIS and HiRISE images. The resultant CaSSIS and HiRISE SRR MADNet DTMs are published through the ESA Guest Storage Facility (GSF) at https://www.cosmos.esa.int/web/psa/ucl-mssl_meta-gsf. We recommend that readers download full-size full-resolution SRR and DTM results and look at their details.

Acknowledgements

The research leading to these results is receiving funding from the UKSA Aurora programme (2018–2021) under grant ST/S001891/1. S.C. is grateful to the French Space Agency CNES for supporting her HiRISE related work.

Figure 3. An example in the same region of Oxia Planum showing the original 4M CaSSIS image and the corresponding 1m MARSGAN SRR and the retrieved height models from the images shown above.

References

[1] Tao Y et al. Remote Sens. 2021, 13, 1777.

[2] Tao, Y. et al. MADNet 2.0:. Remote Sens. 2021, 13, 4220.

[3] Tao, Y. et al. Remote Sens. 2022, 14, 257.

[4] Kirk, R.L. et al. Remote Sens. 2021, 13, 3511.

How to cite: Tao, Y., Muller, J.-P., and Conway, S.: Super-3D: Subpixel-Scale Topography Retrieval of Mars Using Deep Learning, Europlanet Science Congress 2022, Granada, Spain, 18–23 Sep 2022, EPSC2022-473, https://doi.org/10.5194/epsc2022-473, 2022.