Blender modeling and simulation testbed for solar system object imaging and camera performance

- 1Department of Physics, University of Helsinki, Finland

- 2Institute of Geology of the Czech Academy of Sciences, Prague, Czech Republic

- 3VTT Technical Research Centre of Finland Ltd, Espoo, Finland

- 4Department of Geography and Geophysics, University of Helsinki, Finland

Introduction: The camera performance for space missions should be verified before flight. Exposure times with the expected fluxes should be planned, resulting signal-to-noise ratios (SNR) computed, and the overall image quality evaluated. This can be done in a specialized laboratory but having a quick simulation tool to help the design in early phase would be an asset.

We have developed a software based on Blender and Python post-processing for the abovementioned purpose. The software [1] is used with the ASPECT hyperspectral camera (Milani CubeSat, ESA’s Hera mission), the HyperScout hyperspectral camera (Hera mission), and the MIRMIS hyperspectral camera unit (ESA’s Comet Interceptor mission).

Modeling and rendering an asteroid or a comet nucleus: Blender is an open-source rendering software that is widely used in many fields [2]. Capabilities in modeling the 3D geometry and rendering the result makes it also suitable for simulating how an asteroid or a comet nucleus would appear when imaged.

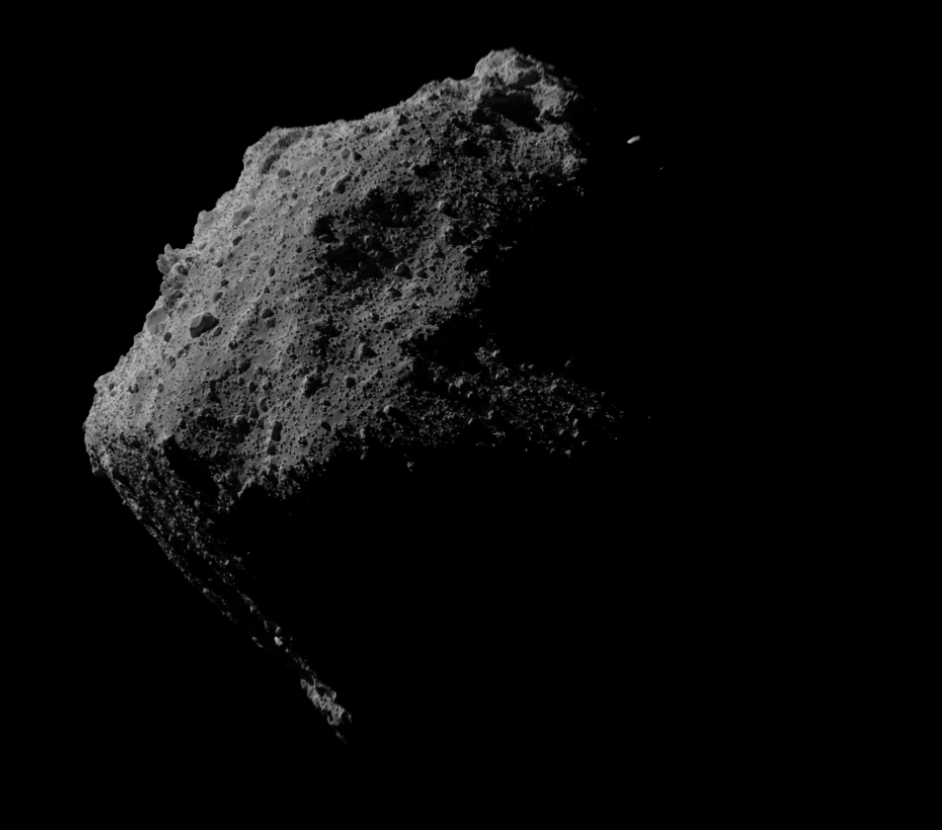

For camera testing purposes, the geometry of the target does not need to be completely correct. It is sufficient that the overall size and shape matches the target, and the surface roughness and boulder distribution are representative. Blender has functions for introducing procedural geometric features randomly on the underlying shape, and we use this to introduce boulders of different sizes on the target. For the overall target shape, either low-resolution models derived from lightcurve or radar observations or high-resolution shape models from previous missions can be used, such as the models for Bennu, Ryugu, or 67P/Churyumov–Gerasi¬menko (see Fig. 1).

We have implemented some common photometric models for the surface material in Blender. Blender has some limitations when compared to other ray-tracers, namely it does not really support custom scattering laws to be implemented. The internal ray-tracing loop employs only Blender’s internal shaders, i.e., scattering laws for a surface element. In other words, one can implement any phase function depending only on the phase angle in Blender, but not disk function that would depend on the incident and scattering directions.

Fortunately, Blender’s internal shaders include Lommel-Seeliger (‘volume scatter’ in Blender) and Lambertian (‘diffuse BSDF’), and one can also mix these, which covers already the common disk functions for dark and bright surfaces quite well. For phase functions we have implemented the exponential-polynomial, linear-magnitude, and ROLO functions as shown in [3]. By combining the disk and the phase functions we can implement Lommel-Seeliger, ROLO, McEwen, and Lambert photometric functions for the target.

The Blender part of our software is outputting ‘ideal’ noiseless images with a given observing geometry, camera field-of-view, detector resolution, and surface albedo. Our post-processing step subsequently introduces the effects originating from the camera and the detector physical capabilities.

Camera performance simulation: The images can be converted to real physical units (I/F, Watts, photons, electron counts on CCD). While the RGB channel values in the Blender images have arbitrary units and scale, one can render a Lambertian disk at backscattering with the same illumination intensity and target-camera distance as in the actual object image. This calibration procedure will give us I/F conversion from the RGB values. Considering the target’s distance to the Sun we can further convert these into radiant flux in Watts.

If we are dealing with a spectral instrument, we need to have a spectral image/datacube. Currently we are not changing the parameters of the photometric function with the wavelength. This implies that the received flux is only linearly dependent on the wavelength-dependent albedo of the target, and that we can just multiply one rendered image with the normalized spectra of the target’s surface material for a spectral datacube.

With spectral flux for each image pixel, we can apply the transmission of the camera optics and the spectral filter (i.e., the Fabry-Perót interferometer in ASPECT and MIRMIS cameras). Watts can be converted into photon counts for each wavelength, and finally the detector quantum efficiency curve can be used to achieve electron elementary charges at the detector.

Once the electron charges per time unit on the detector has been solved, we can introduce a reasonable dark field pattern, dark current noise (Poisson), readout noise (Gaussian), and photon shot noise (Poisson) for a given exposure time. This will give us the final, simulated camera image or a hyperspectral datacube of the target, together with the SNR estimate.

Discussion: The SSO object simulated imaging and camera performance tool can be used to produce expected camera data, with realistic noise, for space mission and instrument design. Especially with (hyper)spectral cameras this tool can be used to verify how different spectral and/or spatial details could be resolved with certain exposures, noise levels, and optics/camera transmissions.

We have started with application to atmosphereless, relatively homogeneous targets such as an asteroid or a comet nucleus. Variability to surface properties (local albedo or color, for example) can be introduced using Blender’s procedural modeling tools. Simple atmospheres and comet gas/dust environments could be added in the future. To some extent, this is what is done in the SISPO project [4]. We verify our results against the NASA Planetary Spectrum Generator [5]. For visualizing views to an asteroid or a comet with a given spacecraft flight path, possibly given with a SPICE kernel, we acknowledge the shapeViewer [6] tool.

Figure: Blender-visualization of the high-resolution shape model of asteroid Ryugu with added boulders on the surface.

References: [1] Git project for the Blender/Python imaging simulations. https://bitbucket.org/planetarysystemresearch/workspace/projects/SSO_PHOTOMETRY. [2] Blender software, https://www.blender.org/. [3] Golish D. R. et al. (2021) Icarus, 357, 113724. [4] Pajusalu M. et al. (2021) arXiv, astro-ph.IM, 2105.06771. [5] NASA Planetary Spectrum Generator, https://psg.gsfc.nasa.gov/ [6] Vincent J.-B. (2014) ACM conference, Helsinki.

How to cite: Penttilä, A., Palos, M. F., Näsilä, A., and Kohout, T.: Blender modeling and simulation testbed for solar system object imaging and camera performance, Europlanet Science Congress 2022, Granada, Spain, 18–23 Sep 2022, EPSC2022-788, https://doi.org/10.5194/epsc2022-788, 2022.