Sensing Modalities on a UAS for Future Science Missions

- Southwest Research Institute, Robotics Department, United States of America (logan.elliott@swri.org)

NASA’s Ingenuity helicopter marked the first experimental evidence for the utility of uncrewed aerial systems (UAS) for future non-terrestrial missions. Despite Ingenuity’s relatively simple data capture, like that of its navigation camera shown in Figure 1, it proved that UAS on other planets was indeed possible and useful. Future missions such as Dragonfly hope to expand the data capture possible by UAS by expanding the sensors to include more metrology and spectroscopy sensors. However, the existing sensors used by robotic systems for navigation can also gather scientific data. The mobility of a UAS allows for the placement or sensing of events in short temporal windows. This abstract will discuss various perception sensors including lidar, time-of-flight, visible light, thermal, and neuromorphic cameras, along with their applications in both robotic systems and scientific missions.

Figure 1. View from Ingenuity’s navigation camera

Due to the growth of the commercial UAS industry, terrestrial applications have become a test bed for future space applications. Compared to terrestrial applications, however, space-rated sensors have much stricter requirements to survive the journey and even more so to perform in situ. Radiation, shock, and vibration can damage fragile sensors. Size, Weight, and Power (SWaP) constraints limit the potential sensor output. When considering future space science missions, it is also important to account for the potential damage that could be done to unstudied non-terrestrial environments. For example, passive sensors (like most cameras) do not emit any radiation into the environment, but their active counterparts (e.g., current lidar and time of flight [TOF] sensors) emit infrared radiation. The danger of low-wattage infrared or similar radiation in an environment is likely minimal, but its potential effects must be considered when doing an analysis of potential hazards.

Visible spectrum cameras are the most common perception sensors that we have seen to date, both terrestrially and in space. Cameras have proven for the last 80 years that they are robust enough to handle the journey and perform well in space. However, these camera sensors are not without their drawbacks. The downsides of cameras are most evident in mapping applications, where the maps often have perspective issues, discontinuities, and improper stitching. Cameras do an excellent job of providing a 2D representation of an environment, but extrapolating depth is particularly challenging because scale is hard to extract from a 2D image. As we look at using cameras for science missions, we have seen a wealth of proposed applications ranging from joint planners that can communicate points of interest back to base to algorithms that can extract nonstandard data for future examination. This illustrates several of the potential ways that cameras can be applied for UAS in space.

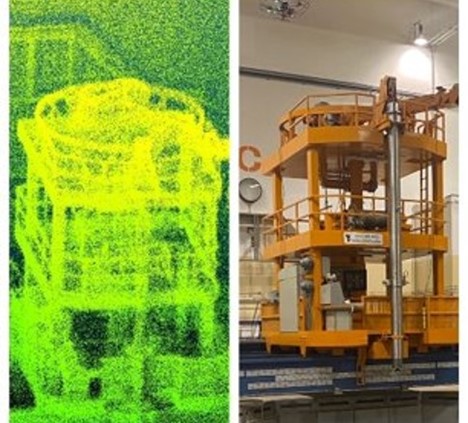

Lidar is the second most popular sensor used in terrestrial UAS applications and only second to cameras in proposals for space missions. It provides a dense 3D point cloud at ranges of 1–200 m with a refresh rate in the 10s of milliseconds. These point clouds are often used to create dense, high-resolution maps that are challenging with traditional cameras (shown in Figure 2). Unfortunately, an off-the-shelf lidar has a much higher power consumption compared to a camera. Additionally, most conventional lidars with a wide field of view are mechanically rotating, which often fail the vibration and shock testing needed for space applications. Solid-state lidars and space rated lidars are possible solutions, but still need more time to be proven. Similarly, TOF sensors are considerably lower in weight and in power consumption compared to lidar but have a considerably lower range (1-2m vs 1-200m).

Figure 2. UAS captured pointcloud shown next to the original structure

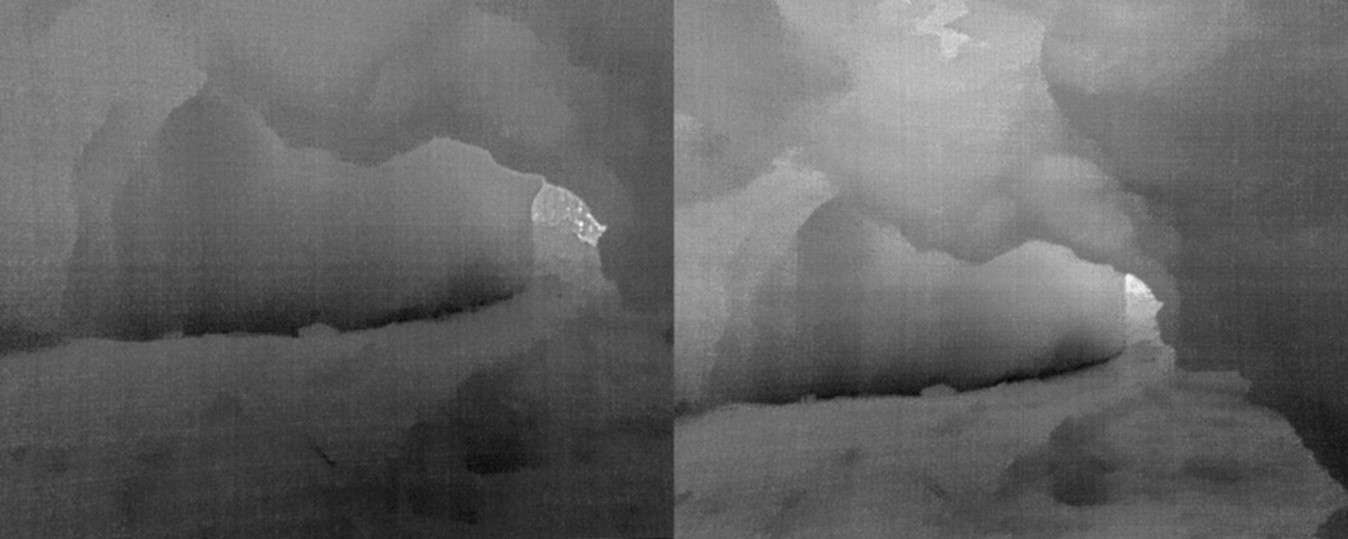

SwRI hypothesized that thermal cameras would make better perception sensors than traditional visible light cameras in low-light applications like caves. Demonstrating this, SwRI built a thermal stereo simultaneous localization and mapping (SLAM) algorithm.

Figure 3. Thermal stereo

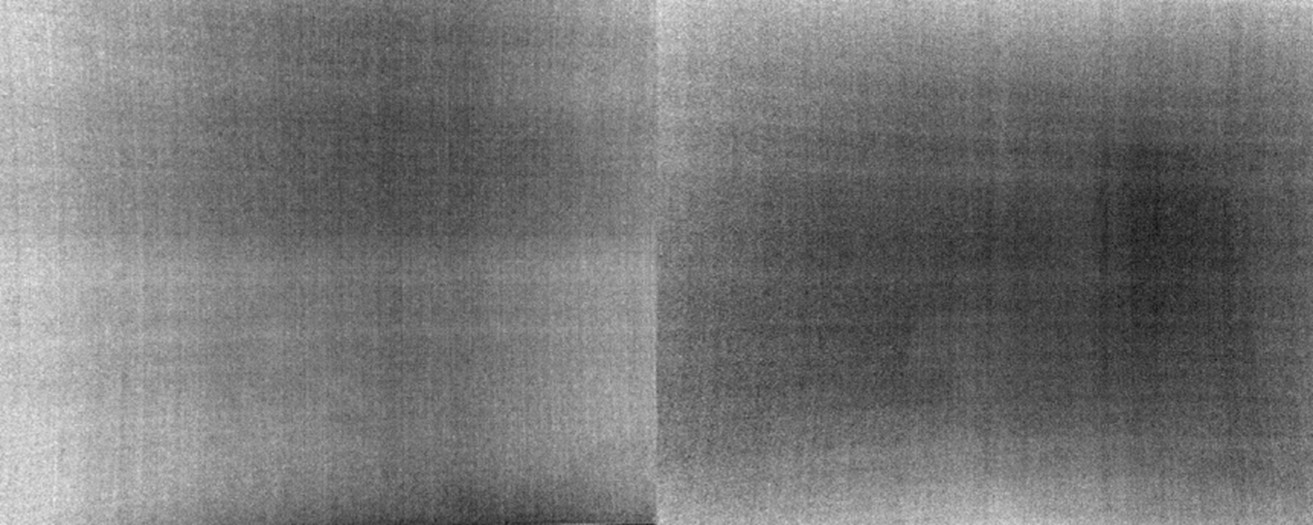

This SLAM algorithm proved effective at the entrance of the cave, demonstrating that much more data is available than by visible light cameras (as shown in Figure 3). However, deeper in the cave, in the twilight and dark zones, caves reach thermal equilibrium. Figure 4 demonstrates that once thermal equilibrium is reached, the cameras provide no useful information. Attempts to illuminate the area for a thermal camera will be much less power-efficient than traditional lighting payloads for visible-light cameras. Nevertheless, one advantage of these cameras is that one could look for interesting thermal features like water seepage or organisms of sufficient size in areas have not reached thermal equilibrium.

Figure 4. Thermal equilibrium with thermal cameras in a cave

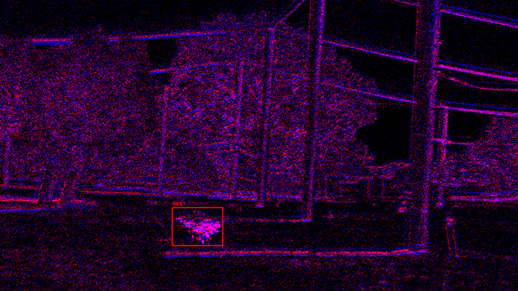

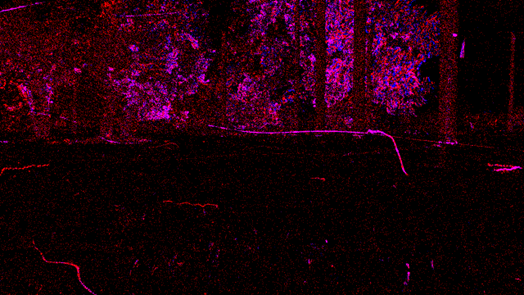

Neuromorphic cameras have similar advantages to thermal and RGB cameras but provide a much different type of data. Neuromorphic cameras provide a per-pixel update every time said pixel changes. This leads to a near continuous flow of data as pixel of the image changes and a faster update rate than traditonal rolling or global shutter cameras. Aggregating the pixel changes results in images like Figure 5.. The downside is that the data sent can quickly overwhelm a communication bus or processor. Figure 6 shows the path of bugs moving in the image. Fast-moving objects are much easier to identify and track than with traditional cameras. Current computer vision techniques parse the data by aggregating it into traditional images, negating many of the advantages of the neuromorphic data. However, techniques that take full advantage of the neuromorphic data are still experimental but have strong potential for future applications.

Figure 5. UAS tracking another UAS via neuromorphic camera

Figure 6. Faster update neuromorphic data with bug moving in the frame

When considering the low SWaP constraints of future UAS missions, it is important to maximize the utility of the payloads. Considering how sensors contribute to scientific missions as well as robotic perception can create a more holistic and useful platform. Science missions will be able to utilize this dual nature of robot perception sensors to gather data more effectively on non-terrestrial environments.

How to cite: Elliott, L. and Wagner, A.: Sensing Modalities on a UAS for Future Science Missions, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-138, https://doi.org/10.5194/epsc2024-138, 2024.