- 1Centre National d'Etudes Spatiales (CNES), France (nicolas.theret@cnes.fr)

- 2THALES Services Numériques, France

INTRODUCTION

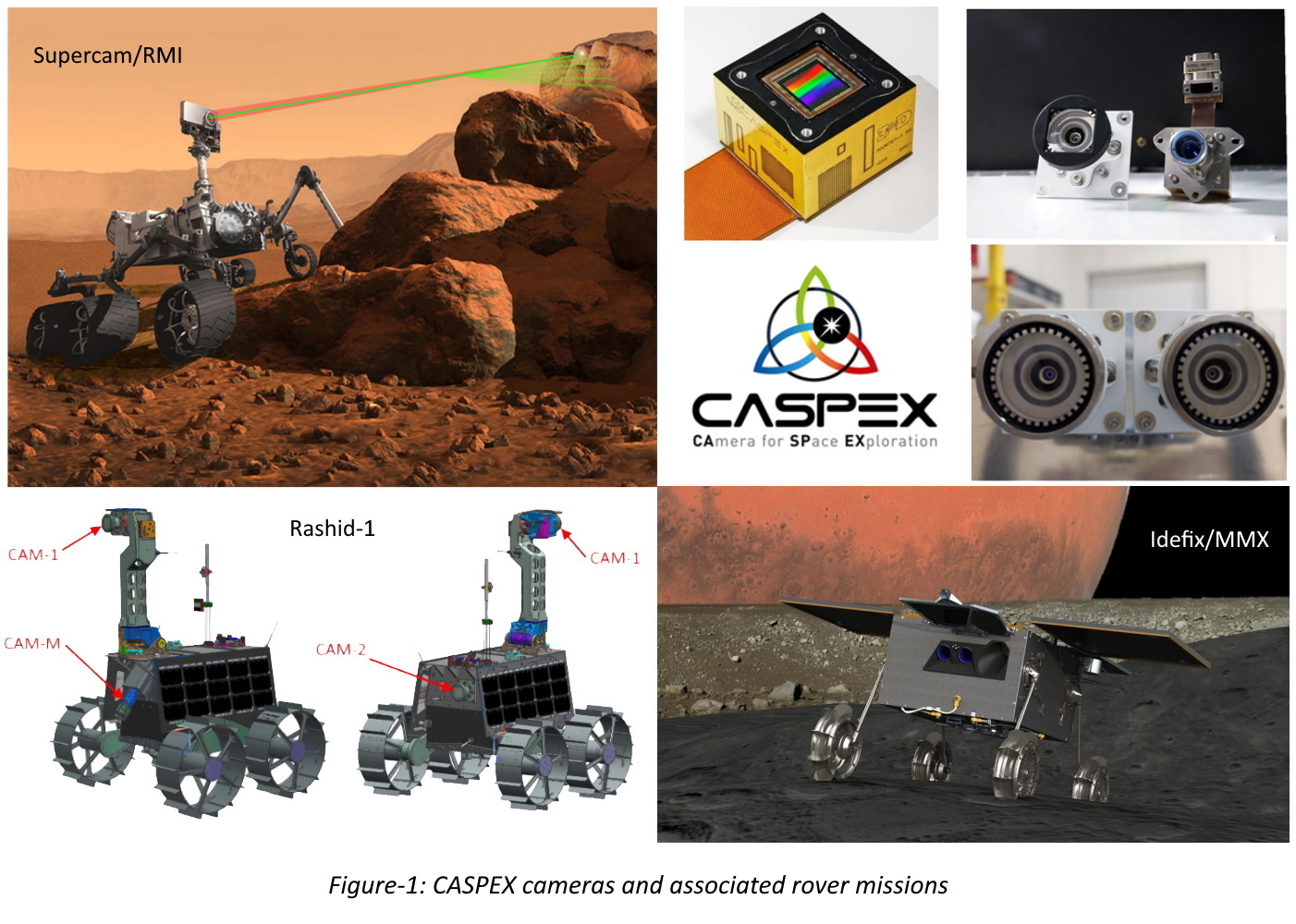

To meet the needs of space exploration missions, CNES and its industrial partner 3D+ have developed a series of dedicated cameras called CASPEX[1] (Figure-1). These cameras consist of two parts:

- A detector unit with different numbers of pixels and spectral ranges, as well as filters deposited on it (color, polarization),

- An optical unit mounted on the detector, with different functional points, such as wide-angle cameras for navigation, or tilted proximity cameras to study the composition of the ground under millimeter resolution.

Such cameras are used on Supercam/RMI aboard Perseverance[2] and will be used on the MBRSC Rashid[3][5] rovers and on the CNES/DLR Idefix[4] rover aboard the JAXA MMX mission.

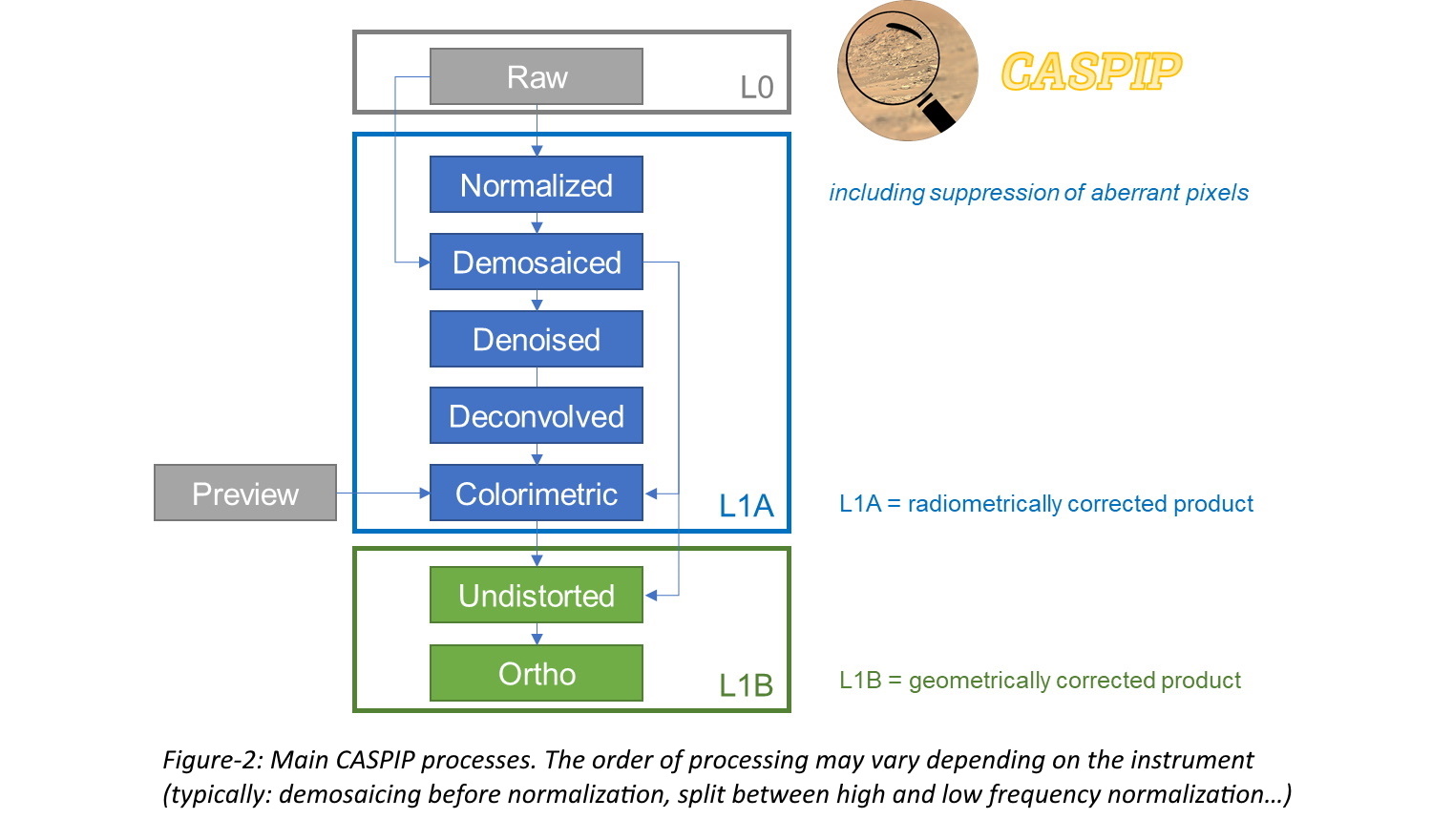

CASPEX Image Processing (CASPIP) is a tool used for the first-level correction processing of the raw images captured by these cameras (Figure-2).

CASPIP SOFTWARE DESCRIPTION

CASPIP is an “easy-to-use” Python library developed at CNES and distributed on https://www.connectbycnes.fr/en/caspip. It works on Linux and Windows. The configuration parameters are grouped in a “camera” object, so that it is possible to define several cameras in one run. The calling interface is step-by-step and as simple as follows:

processed_image = caspip_function(my_camera, image_to_process, (optional parameters))

In addition to the main functions shown in Figure-2, some tools are added, such as direct and inverse localization functions and distance measurements, as well as a GUI visualization tool.

CASPIP can be used also for enhanced image processing[5], such as 3D reconstruction.

MAIN FUNCTIONS

Radiometric processing

CASPEX detectors have a PRNU (Photon Response Non Uniformity) of less than 1.35%[1] and very few defective pixels[1]. Despite such low levels, CASPIP can use ground data to correct – if needed – PRNU effects, as well as interpolate missing pixels in the image.

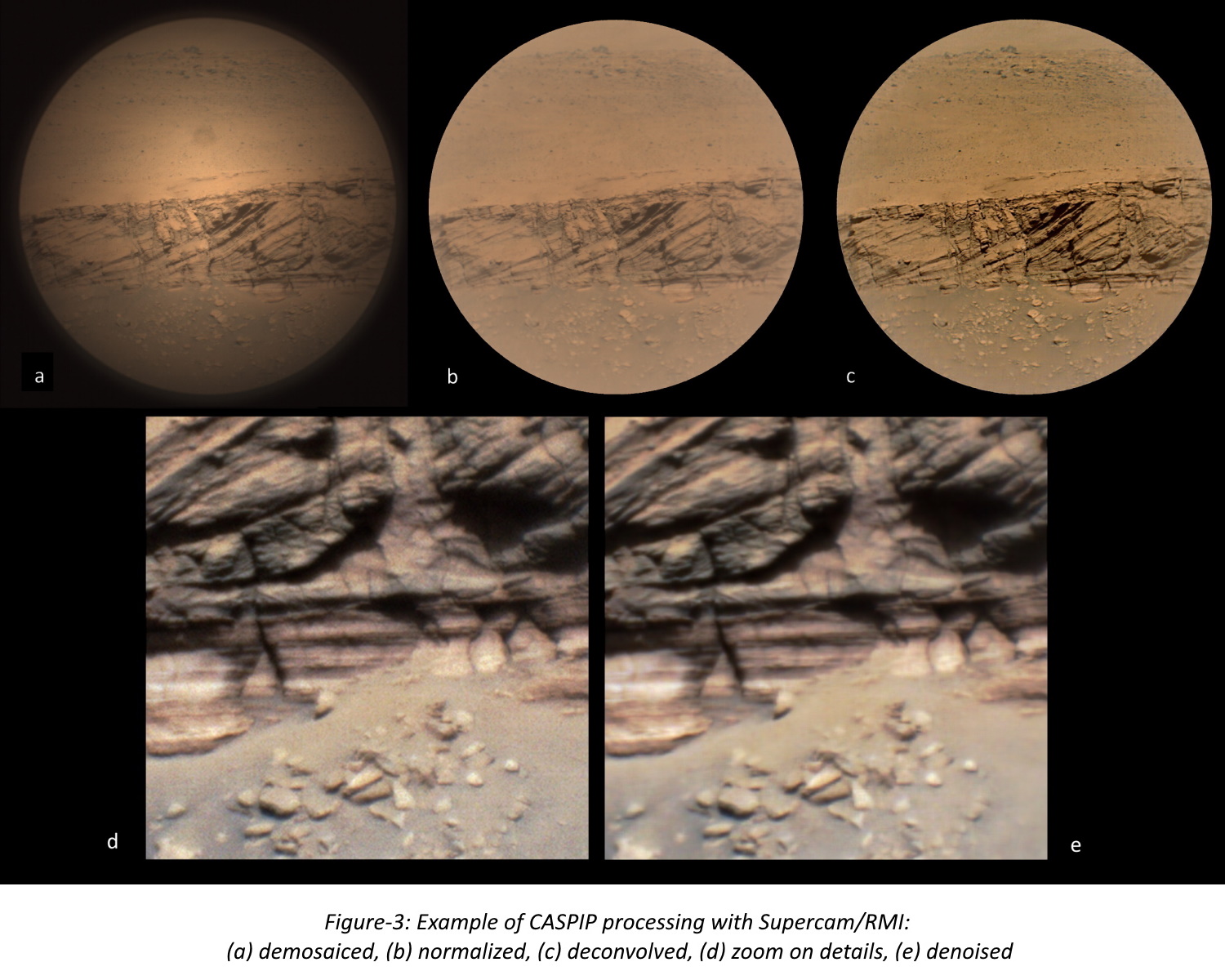

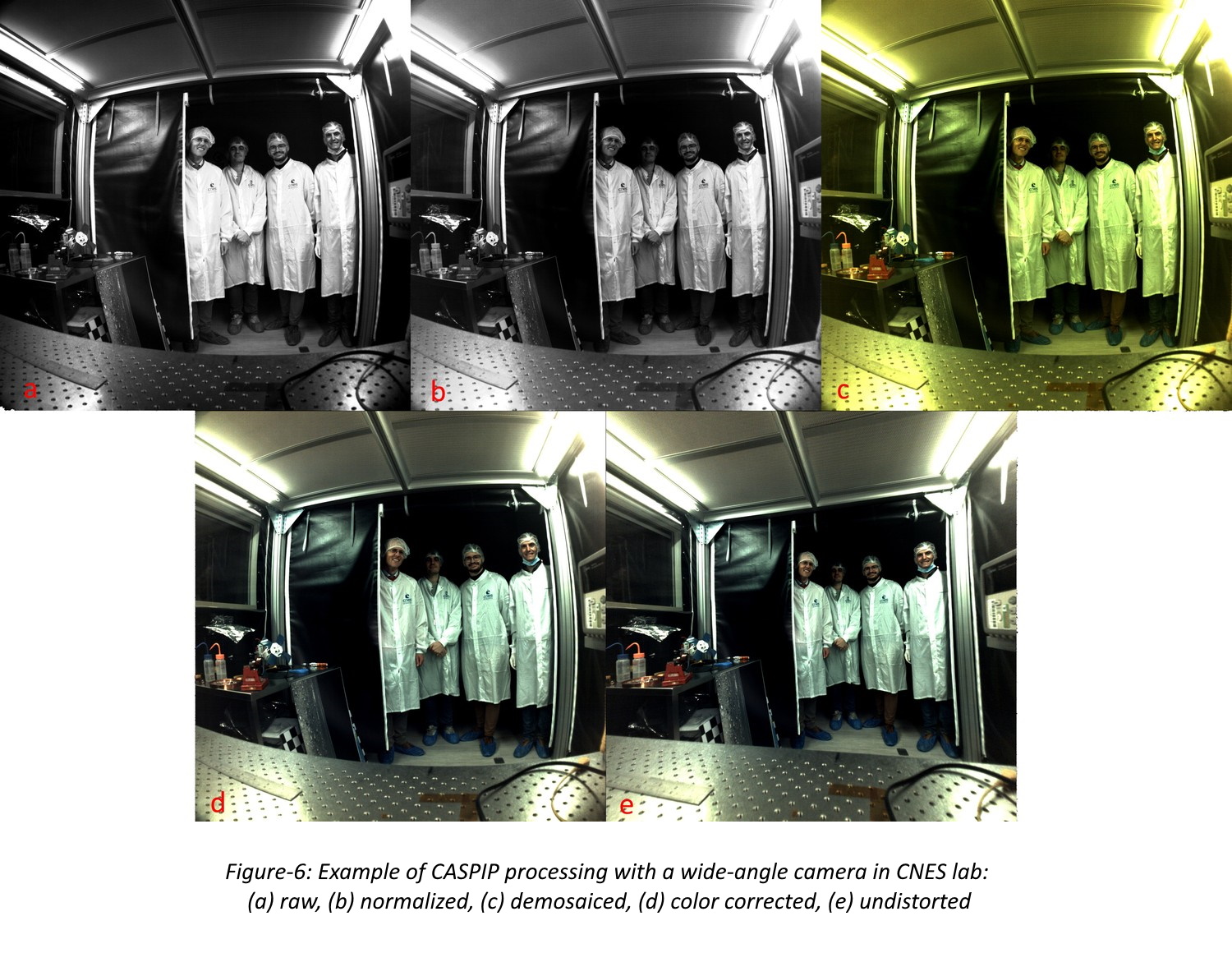

The wide-angle cameras induce a strong low-frequency vignetting effect (Figure-6, a→b), which can also be observed on various cameras, such as Supercam/RMI (Figure-3, a→b). The vignetting is corrected by dividing by a flat-field reference image.

Typical color filter arrays provide a sparse representation of the spectral bands using a Bayer pattern, alternating red, blue and green pixels. It is important to perform a ‘demosaicing’ step, to ensure that the final RGB image has the same resolution as the detectors. Several methods can be used for demosaicing, with the most common being based on interpolation. CASPIP implements the Hamilton-Adams[7] (Figure-6, b→c) and Malvar[8] algorithms by default, although several other techniques can be implemented instead, such as Bayesian approaches[6], but with increased computational complexity.

Knowing the Modulation Transfer Function (MTF) of the instrument, preferably by in-situ measurements, it is possible to add a deconvolution step to reverse instrument blurring and sharpen the final images. Depending on the camera and the optical design, this step may be necessary (Supercam/RMI – Figure-3, b→c – in this case, the image is oversampled regarding the optical resolution).

Before deconvolving, it is necessary to reduce the noise, which should be increased by the processing: CASPIP implements a NL-Bayes denoising technique[6] (Figure-3, d→e).

Finally, a colorimetric correction has to be applied for the image to be expressed in a standard camera-independent color space. This is done by applying a correction matrix calculated following the ISO/CIE 11664-3 standard.

Geometric corrections

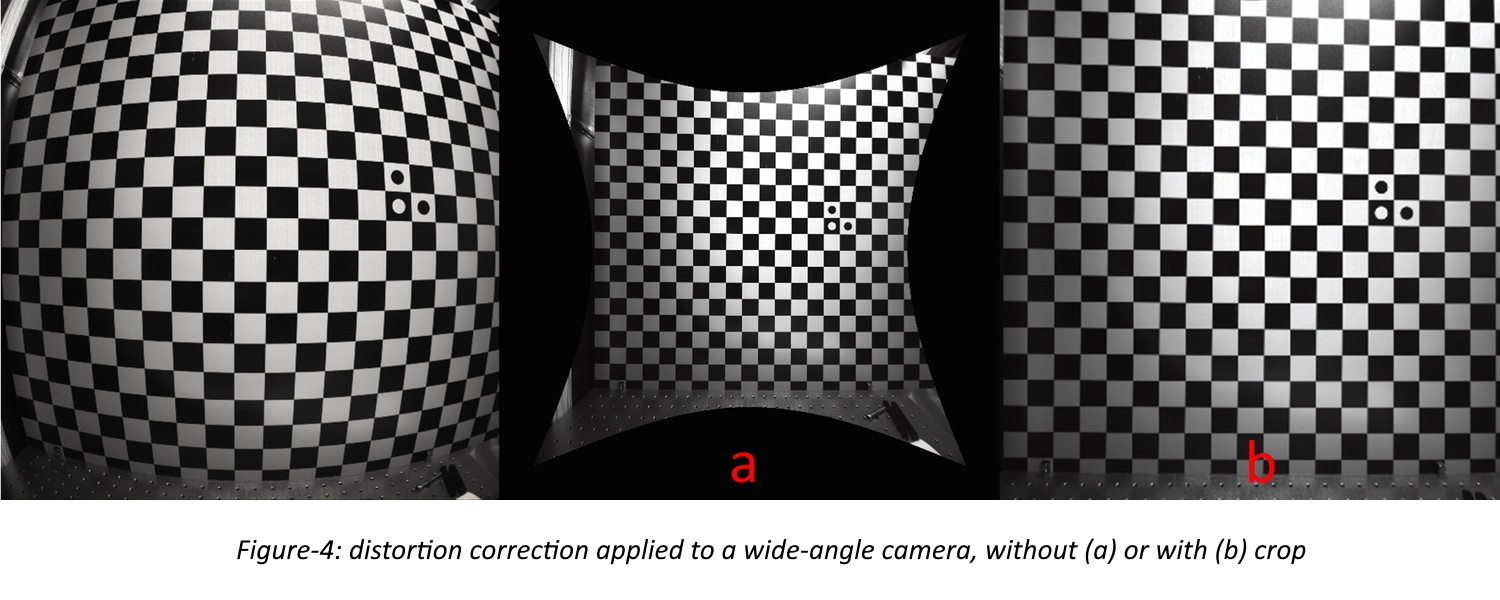

Wide-angle cameras cause an important distortion effect that can be corrected by applying a polynomial[5] to the (x,y) coordinates (Figure-6, d→e). This processing causes a deformation of the image, which is no longer on a regular square grid: we can either crop it or keep the whole image (Figure-4), depending on our needs. The resulting image is nominally regularly sampled with a pixel size in the center of the field still equal to the original resolution.

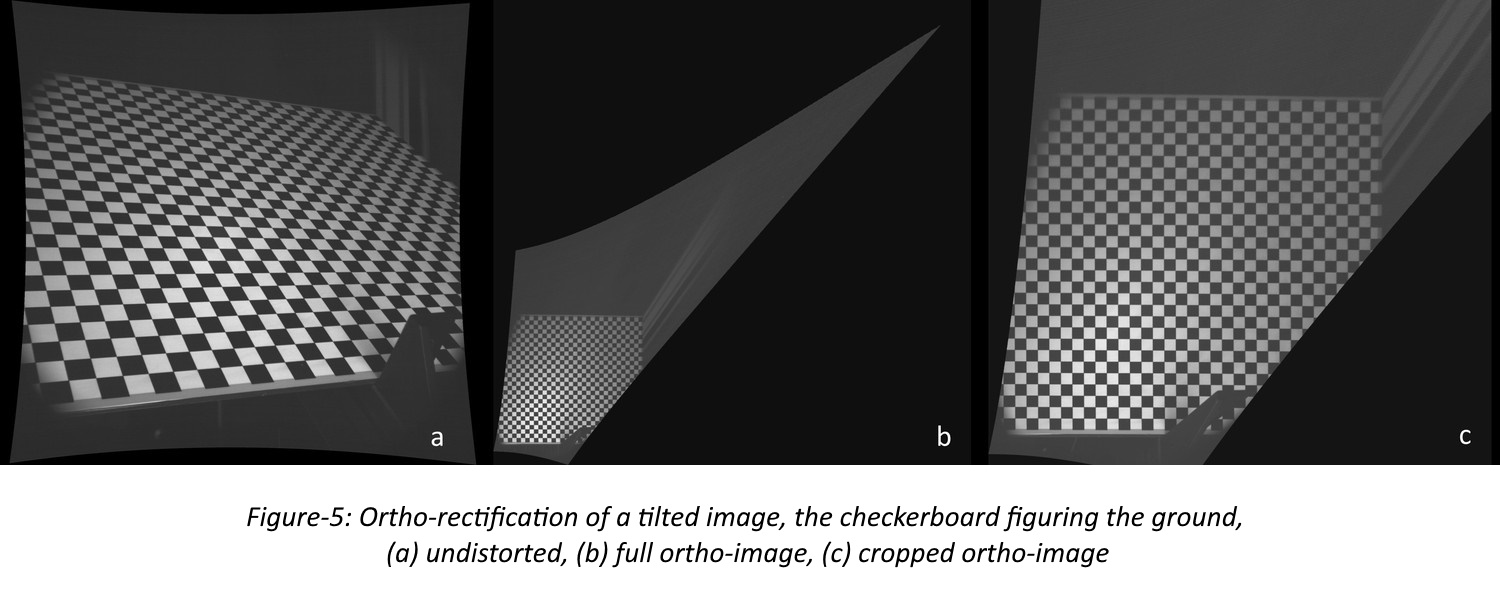

Observing the microscopic structure of the ground near the rover requires pointing the camera in a tilted direction, which results in a distorted image because the observed plane is not perpendicular to the pointing direction. This is the case for CAM-M on Rashid-1 and for the MMX wheel cameras, which observe the interaction between the wheels and the regolith. CASPIP allows the calculation of "ortho-images" using the value of the tilt angles (Figure-5). If the ground surface is not flat, residual distortion effects are still expected on the result.

FURTHER DEVELOPMENTS

Since proximity cameras have a very small depth of field, an object (rock) or a hole will cause defocus to increase in the field of view. We will then develop a function to make the deconvolution variable across the detector field of view.

Future CASPEX cameras will also include complex filter arrays[9] with typically 8 or 25 spectral bands, which will require a specific "demosaicing" function. We are working on new solutions that will be implemented in CASPIP. Such cameras and processing will allow both a spectral capability and a good spatial resolution without moving parts such as filter wheels.

Other developments can be considered in the framework of the collaboration with CNES for new space exploration missions.

REFERENCES

[1] Virmontois, C. et al, CASPEX: Camera for space exploration, in preparation (2024)

[2] Wiens, R.C., Maurice, S. et al, Space Sci. Rev. 217, 4 (2021),

https://doi.org/10.1007/s11214-020-00777-5

[3] Els, S.G., Almarzooqi, H. et al, Space Sci. Rev, under submission (2024)

[4] Michel, P. et al, Earth, Planets and Space 74(1), 2 (2022),

https://doi.org/10.1186/s40623-021-01464-7

[5] Théret, N. et al, Space Sci. Rev, under submission (2024)

[6] M. Lebrun et al, IPOL, vol.3, pp.1-42 (2013), http://dx.doi.org/10.1137/120874989

[7] Hamilton J.F.Jr., Adams J.E.Jr., Patent US5629734A (1996),

https://patents.google.com/patent/US5629734A/en

[8] Malvar, H.S. et al, International Conference on Acoustics, Speech, and Signal Processing,

pp.iii-485 (2004), https://doi.org/10.1109/ICASSP.2004.1326587

[9] Cucchetti, E. et al, Color and Imaging Conf., pp205-212 (2022),

https://doi.org/10.2352/CIC.2022.30.1.36

How to cite: Théret, N., Douaglin, Q., Cucchetti, E., Robert, E., Lucas, S., Lalucaa, V., and Virmontois, C.: CASPEX Image Processing: a tool for space exploration, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-15, https://doi.org/10.5194/epsc2024-15, 2024.