Small Planetary Body Shape Modeling Using a Sparse Image Set

- 1Institute of Geodesy and Geoinformation Science, Technische Universität Berlin, Germany (hao.chen.2@campus.tu-berlin.de)

- 2Institute of Planetary Research, German Aerospace Center (DLR), Berlin, Germany

- 3Institute of Space Technology & Space Applications (LRT 9.1), University of the Bundeswehr Munich, Neubiberg, Germany

- Introduction

Image-based surface or shape modeling is a fundamental task for small body exploration within the solar system. While Deep Learning methods have been rapidly applied to retrieve topographic models of planets (Chen et al., 2022a; 2022b), stereo-photogrammetry (SPG) and stereo-photoclinometry (SPC) are still the two primary methods employed for shape modeling. To achieve detailed global shape modeling, SPG and SPC usually rely on a large number of images. In this study, we introduce a neural implicit shape modeling method utilizing a sparse set of images. Our approach diverges from traditional explicit representation methods like SPG, which utilizes discrete points to interpolate a surface, by employing a continuous implicit representation function to describe body surfaces. The performance is validated on the asteroid Ryugu explored by Hayabusa2 (Watanabe et al., 2019).

- Method

The method implicitly models surface details and the overall shape using the signed distance function (SDF) (Chen et al. (2024a). The 3D scene of the target is represented in the form of neural implicit functions, encoded by multi-layer perceptrons (MLP), to derive the SDF and color (image gray intensity) from inputs (Chen et al. (2024b). To train the SDF and color network parameters, a volume rendering scheme is employed to render images from the proposed SDF-based representation. We include surrounding points with multi-scale receptive fields as additional input to train the network and design a mask-based classification strategy to capture detailed features on the surface and avoid over-smoothing.

- Dataset

We selected 70 images with a resolution of 2.2 m captured by the Optical Navigation Camera Telescope (ONC-T; Kameda et al., 2017) to reconstruct the shape model of Ryugu. All images are obtained from camera viewpoints near the equatorial plane. Previously Watanabe et al. (2019) established a Ryugu shape model applying about three times as many images than used in this study with a better image resolution (about 0.7 m) than used here. Estimation of the exterior camera parameters is achieved within a preprocessing step applying structure from motion techniques.

- Experiment Results

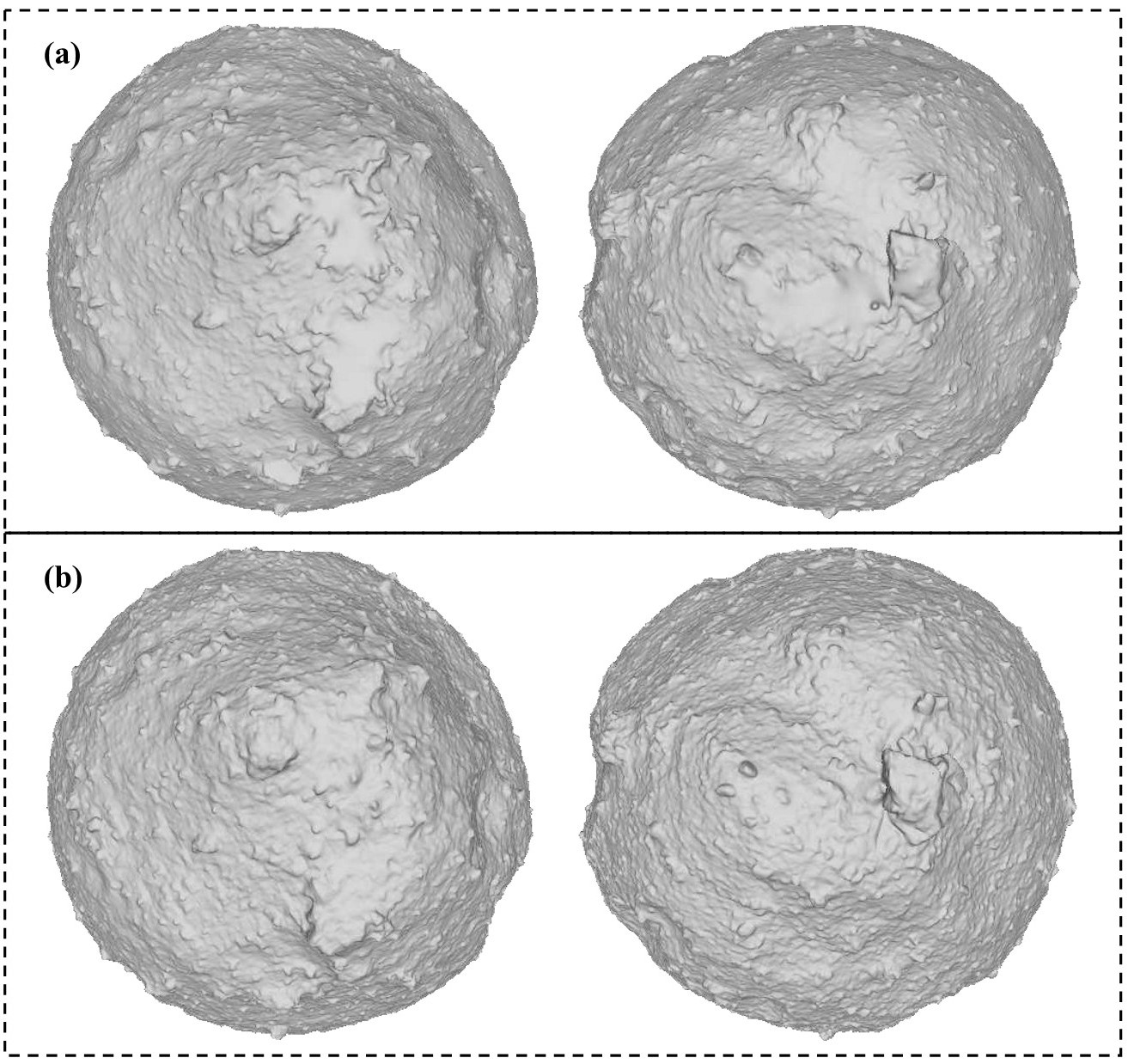

Fig. 1a shows the model derived by Watanabe et al. (2019) using SFM + multi-view stereo (MVS) in direct comparison with the shape model derived by our method (Fig. 1b). While the latter utilizes about 1/3 of the images with lower-resolution, the results still exhibit detailed features consistent with the model derived by Watanabe et al. (2019). Besides, our results demonstrate robustness even in areas with limited camera coverage. For instance, in polar regions where the SFM + MVS method falls short of retrieving some boulders, our approach successfully accomplishes this task.

Fig. 1. Polar views of the shape models of Ryugu reconstructed by Watanabe et al. (2019) (a) and this study (b).

- Conclusion

We introduced a novel neural implicit shape modeling method utilizing a sparse set of images. It can effectively derive detailed features on the surface. Based on current experiments it appears to be a promising tool to support shape modeling in future small body explorations.

- References

Chen et al., 2022a. Pixel-resolution DTM generation for the lunar surface based on a combined deep learning and shape-from-shading (SFS) approach. In: ISPRS 2022. Nice, France.

Chen et al., 2022b. CNN-based large area pixel-resolution topography retrieval from single-view LROC NAC images constrained with SLDEM. IEEE JSTARS 15, 9398–9416. https://doi.org/10.1109/JSTARS.2022.3214926.

Chen et al., 2024a. Image-based small body shape modeling using the neural implicit method. In EGU 2024, Vienna, Austria.

Chen et al., 2024b. Neural implicit shape modeling for small planetary bodies from multi-view images using a mask-based classification sampling strategy. ISPRS Journal of Photogrammetry and Remote Sensing, 212, 122-145, https://doi.org/10.1016/j.isprsjprs.2024.04.029.

Kameda et al., 2017. Preflight calibration test results for optical navigation camera telescope (ONC-T) onboard the Hayabusa2 spacecraft. Space Sci. Rev. 208, 17–31. https://doi.org/10.1007/s11214-015-0227-y.

Watanabe et al., 2019. Hayabusa2 arrives at the carbonaceous asteroid 162173 Ryugu--A spinning top–shaped rubble pile. Science 364 (6437), 268–272. https://www.science.org/doi/10.1126/science.aav8032.

How to cite: Chen, H., Willner, K., Hu, X., Ziese, R., Damme, F., Gläser, P., Neumann, W., and Oberst, J.: Small Planetary Body Shape Modeling Using a Sparse Image Set, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-151, https://doi.org/10.5194/epsc2024-151, 2024.