- 1Aurora Technology B.V. for ESA, European Space Astronomy Centre, Villanueva de la Cañada, Spain (bjoern.grieger@ext.esa.int)

- 2Instituto de Astrofísica de Canarias, La Laguna, Spain (jmlc@iac.es)

- 3Department of Astrophysics, University of La Laguna, La Laguna, Spain (jmlc@iac.es)

- 4Space Sciences Lab, University of California, Berkeley, California, USA (hannahgoldberg@berkeley.edu)

- 5Department of Geosciences and Geography, University of Helsinki, Helsinki, Finland (tomas.kohout@helsinki.fi)

- 6Department of Mechatronics, Optics and Engineering Informatics, Budapest University of Technology and Economics, Budapest, Hungary

- 7ESA, European Space Astronomy Centre, Villanueva de la Canãda, Spain (michael.kueppers@esa.int)

- 8Astronomical Institute of the Romanian Academy, Bukarest, Romania (cu.marcel1983@gmail.com)

- 9University of Craiova, Craiova, Romania (prodangp9@gmail.com)

On 26 September 2022, NASA’s Double Asteroid Redirection Test (DART) mission impacted Dimorphos, the moonlet of near-earth asteroid 65803 Didymos, performing the world’s first planetary defence test. ESA’s Hera mission will be launched in October 2024 and rendezvous with the Didymos system end of 2026 or beginning of 2027. It will closely investigate the system and in particular the consequences of the DART impact.

Hera carries the hyperspectral imager Hyperscout-H. Its sensor consists of 2048 × 1088 pixels which are arranged in macro pixel blocks of 5 × 5 pixels. The 25 pixels of each block are covered with filters in 25 different wavelengths where the center response ranges from 657 to 949 nm. Therefore, each of the 2048 × 1088 pixels provides only the brightness information for one wavelength and hence the theoretical 2048 × 1088 × 25 data cube is only sparsely populated.

A simple straight forward approach to replenish the sparse data cube would be to move a 5 × 5 pixel window with one pixel steps horizotally and vertically over the whole frame and assign the obtained 25 wavelength spectrum to the center pixel of the window. Besides reducing the image resolution to the quite coarse macro pixels, the accuracy of this method is limited by pixel to pixel variations of the spectra and even more by varying albedo and shading effects caused by varying surface inclination. This makes the resultant spectra very noisy.

In order to retrieve more accurate spectra with higher spatial resolution, we separate the spectrum at each micro pixel into a normalized spectrum and a brightness scaling factor. We assume the normalized spectra to be spatially smooth, but not necessarily the scaled spectra. Ratios of measured values are used to iteratively compute the normalized spectral value from adjacent pixels. After convergence, the normalized spectra are brightness scaled to reproduce the measured values. This approach, which we call de2, allows to replenish the complete data cube with full micro pixel resolution. The application to simulated test images shows that spectra are recovered much more accurately than with the direct approach and that only very little spatial detail is lost. An example spectrum for a simulated Hyperscout-H image based on data of asteroid 25143 Itokawa acquired by the Multi-band Imaging Camera (AMICA) aboard the Hayabusa spacecraft is shown in the image below.

The green line is the true (the simulated) spectrtum, the blue line is the simple direct approach, and the red line is our de2 approach.

The spectra of atmosphereless solar system bodies generally display broad absorption features across the visible to near-infrared wavelengths. The spectra are relatively smooth with no sharp absorption or emission lines. Therefore, it should be possible to find a low dimensional representation that preserves most of the information in the spectra. Such a low dimensional representation enables a concise visualization of the full data cubes, e.g., through false color images.

For the estimation of a low dimensional representation of spectra, we employ layered feed forward neural networks with backpropagation learning. These neural networks have an input layer and several processing layers of neurons. The last layer of neurons forms the output layer. Such neural networks are commonly used for supervised learning. This would require a large data set of spectra accompanied with classifications given by a few parameters. These data would be used to train the neural network to reproduce these classifications. Subsequently, the neural network should be able to perform the classification of new yet unclassified spectra. However, we do not have a large data set of classified spectra and simulating it appropriately may be quite difficult considering the unique reshaping process that the dart impact may have caused. After such recent reshaping, Dimorphos may be substantially different from any solar system body visited by spacecraft so far.

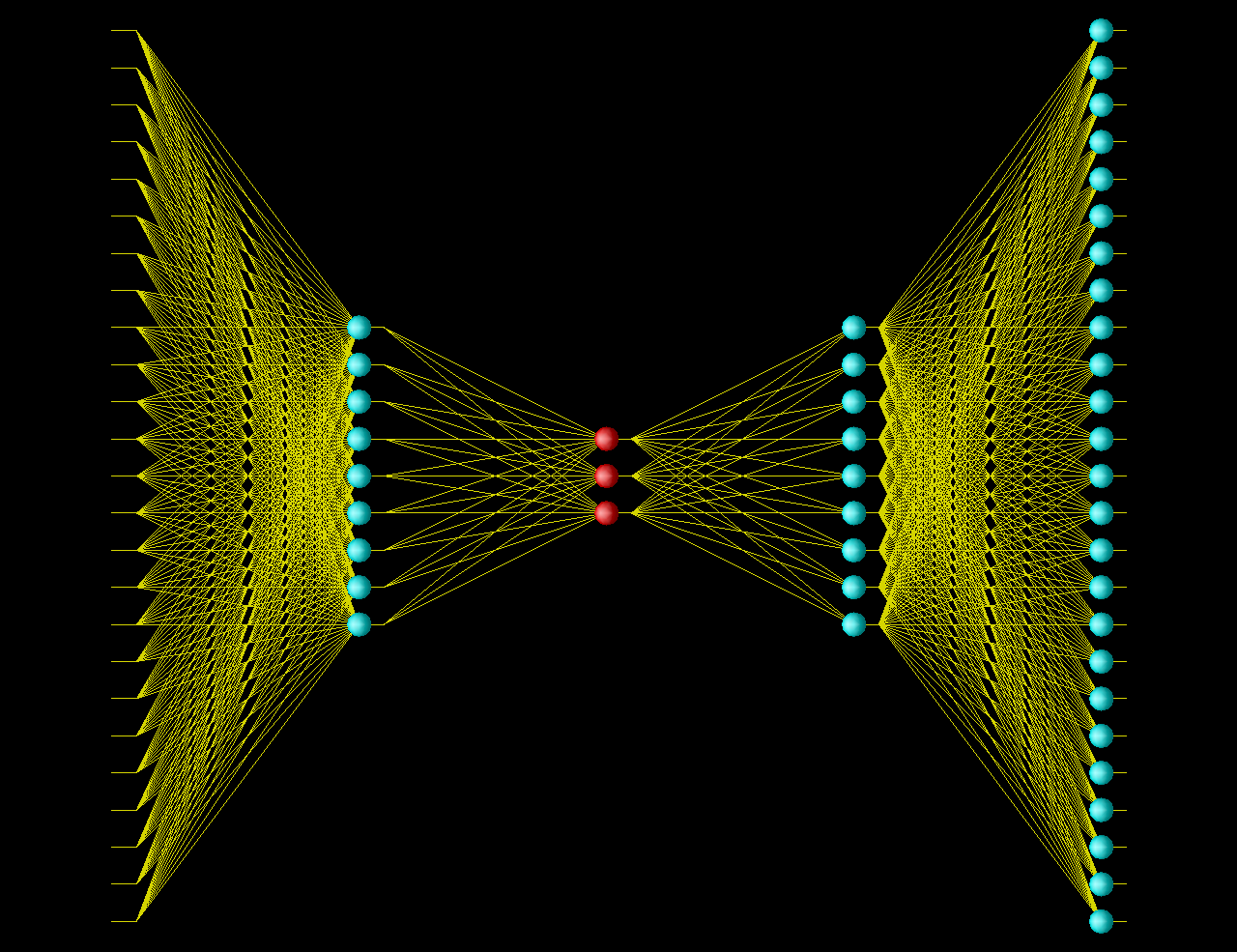

Therefore, we use a different approach and set up the layered neural network for unsupervised learning. We apply the same spectrum as input and as desired output of the neural network. The neural network has one layer with a small number of neurons, so it is forced to find a low dimensional representation of the input spectrum, the so-called latent dimensions, from which it can completely reconstruct the spectrum. The image below shows an example of such a neural network.

The network has four layers of neurons plus the input layer. Input and output layers have 25 dimensions, like our spectra. In the center there is a layer of only three neurons shown in red. This is the bottle neck through which the neural network has to convey the information in the spectra. The output values of these three neurons provide a low dimensional representation of the spectra, the latent dimensions. We show the results for the simulated Hyperscout-H image based on Itokawa data.

How to cite: Grieger, B., de León, J., Goldberg, H., Kohout, T., Kovács, G., Küppers, M., Nagy, B. V., Popescu, M., and Prodan, G.: Low dimensional representations of spectra from the Hyperscout-H hyperspectral imager aboard the Hera mission with artificial neural networks, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-506, https://doi.org/10.5194/epsc2024-506, 2024.