ChemCam rock classification using explainable AI

- 1Institute of Optical Sensor Systems, German Aerospace Center (DLR), Berlin, Germany (ana.lomashvili@dlr.de)

- 2Institut de Recherche en Astrophysique et Plan écologie (IRAP), Toulouse, France

- 3Institute of Planetary Research, German Aerospace Center (DLR), Berlin, Germany

Since 2012, NASA’s Curiosity rover has been looking for evidence of previous habitability on Mars [1,2]. For this purpose, the rover is equipped with a variety of scientific instruments. One of them is ChemCam (Chemistry and Camera), which consists of two parts: LIBS (Laser Induced Breakdown Spectrometer) and RMI (Remote Micro Imager). LIBS provides information on the elemental composition of targets and RMI takes context images [3]. More than 4000 targets have been measured by ChemCam since it landed [4]. One of the common questions asked when analyzing ChemCam targets is when similar targets were observed. The size of the data makes manual labeling tedious, and automating the process would reduce the human workload. In this work, we will explore machine learning methods to improve the classification process of RMI images in terms of rock texture.

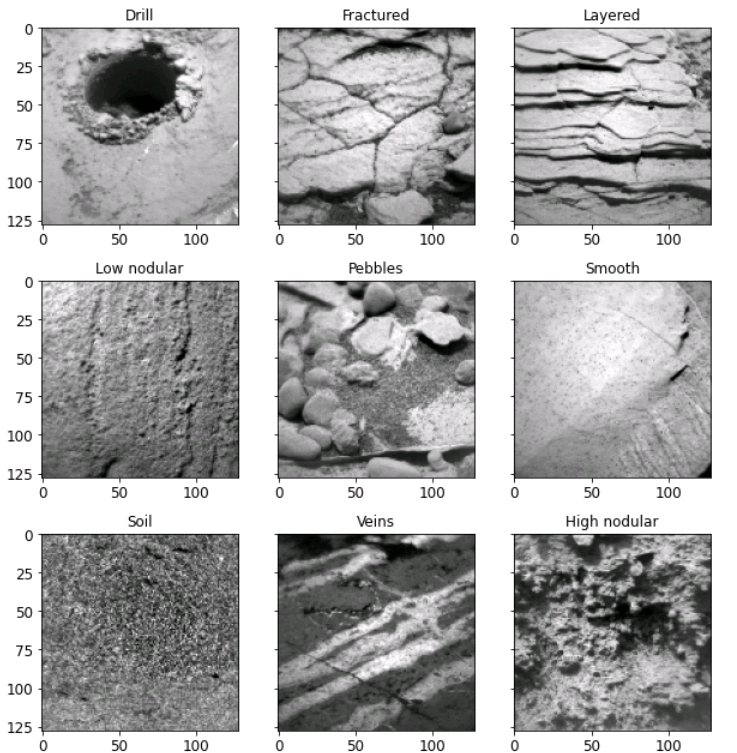

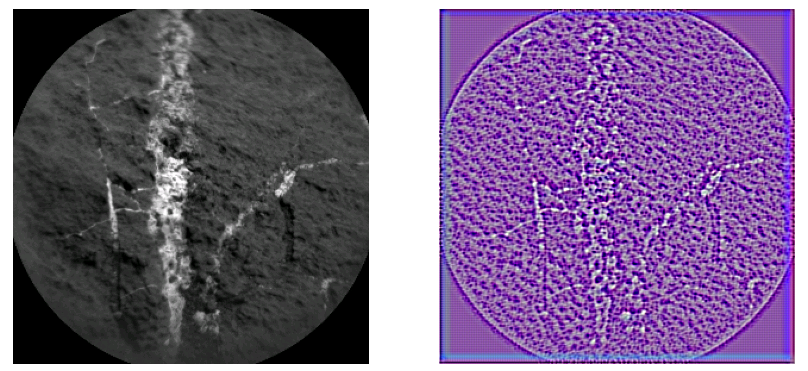

In previous works, we used unsupervised classification, k-means clustering, to derive potential labels for the images [5]. We came down to nine classes: smooth, low nodular, high nodular, fractured, veins, layered, pebbles, soil, and drill shown in Fig 1. We labeled 100 images per class and used transfer learning, an already pretrained model VGG16, to make the size of the training set sufficient for convolutional neural networks [6]. The experiments showed us that more than one label applies to most ChemCam targets. This led us to multilabel classification. Although we added labels to the targets and fine-tuned models, we were not able to reach more than 80% accuracy. The model kept confusing labels, and one approach to investigate potential reasons is to employ Explainable Artificial Intelligence (XAI) methods to understand what was learned by the model. There are various methods to visualize learned patterns of each layer in deep neural networks. In order to address the so-called “black box”, we employed Guided Backpropagation [6]. The technique is a combination of backpropagation and the deconvolutional network. By setting negative gradients to zero it highlights the most activated pixels by each layer. An example of using guided backpropagation on the 10th layer of VGG16 is illustrated in Fig 2. This method allowed us to understand which textural features were learned by the model and which needed more refining.

Additionally, XAI methods are able to estimate the importance of features in the training set. Some characteristics of the image may not influence the decision-making process of the model and can be a source of extra information making the model complex and heavy. Considering the limited capacity of resources of onboard computing in in-situ missions, making models lighter is one of the priorities. Shapley values from game theory evaluates the contribution of each feature in the model that can be translated into feature importance [7]. In this work, we evaluate the feature importance of our dataset in terms of model accuracy and remove unnecessary weight from the model.

Overall, we explore multilabel classification of ChemCam targets based on their textures captured by RMIs . The automatization of labeling allows efficient interpretation of rocks and identification of regions with similar targets. We apply XAI methods such as guided backpropagation to improve the accuracy of the classification by visualizing the learned patterns. Additionally, by estimating feature importance using Shapley values, we make the model lighter for potential in-situ operation.

Figure 1 Representative images of the clusters and corresponding labels.

Figure 2 On the left ChemCam target "Rooibank" sol 1266, displaying veins. On the right Guided backpropagation is applied to the target, highlighting veins learned by the 10th layer of VGG16.

Refs:

[1] J. P. Grotzinger, J. Crisp, A. R. Vasavada, and R. P Anderson. Mars Science Laboratory Mission and Science Investigation. Space Sci Rev, 2012.

[2] A. R. Vasavada. Mission Overview and Scientific Contributions from the Mars Science Laboratory Curiosity Rover After Eight Years of Surface Operations. Space Sci Rev, 2022.

[3] R. C. Wiens, S. Maurice, B. Barraclough, M. Saccoccio, and W. C. Barkley. The ChemCam Instrument Suite on the Mars Science Laboratory (MSL) Rover: Body Unit and Combined System Tests. Space Sci Rev, 2012

[4] O. Gasnault et al. Exploring the sulfate-bearing unit: Recent ChemCam results at Gale crater, Mars. EPSC, 2024.

[5] A. Lomashvili et al. Rock classification via transfer learning in the scope of ChemCam RMI image data. LPSC, 2023.

[6] J. T. Springenberg, A. Dosovitskiy, T. Brox, and M. Riedmiller. Striving for Simplicity: The All Convolutional Net. ICLR 2015.

[7] S. Lundberg and S. I. Lee. A Unified Approach to Interpreting Model Predictions. NIPS 2017.

How to cite: Lomashvili, A., Rammelkamp, K., Gasnault, O., Bhattacharjee, P., Clavé, E., Egerland, C. H., and Schroeder, S.: ChemCam rock classification using explainable AI, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-780, https://doi.org/10.5194/epsc2024-780, 2024.