Multiple terms: term1 term2

red apples

returns results with all terms like:

Fructose levels in red and green apples

Precise match in quotes: "term1 term2"

"red apples"

returns results matching exactly like:

Anthocyanin biosynthesis in red apples

Exclude a term with -: term1 -term2

apples -red

returns results containing apples but not red:

Malic acid in green apples

hits for "" in

Network problems

Server timeout

Invalid search term

Too many requests

MITM3

Session assets

The application of machine learning techniques in astronomy has been rapidly growing, addressing various challenges such as predicting orbital stability, classifying celestial objects, and analysing images. However, the emerging trend of using large language models (LLMs) presents a novel approach that relies on natural language processing and explicit task definitions, rather than on statistical algorithms or probabilistic models. In this talk, I'll demonstrate the exceptional capabilities of LLMs, specifically GPT-4 as well as some other open source alternatives, in analyzing visual patterns and accurately classifying asteroids as resonant or non-resonant without any training, fine-tuning, or coding beyond writing an appropriate prompt in natural language. By leveraging the power of LLMs, it is possible to achieve an accuracy, precision, and recall of 100% in differentiating between pure libration, circulation, and mixed (transient) cases of resonant angles.

This approach introduces a new paradigm in astronomical data analysis, where complex tasks requiring human expertise can be completed with minimal effort and resources. The implications of this study extend beyond the identification of mean-motion resonances, as the methodology can be applied to a wide range of astronomical problems that involve pattern recognition, outlier detection, and decision-making tasks. This presentation will discuss the experimental design, results, and potential applications of LLMs in astronomy, highlighting the significance of this innovative approach in advancing astronomical research.

How to cite: Smirnov, E.: Effortless and accurate time series analysis in astronomy using Large Language Models, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-11, https://doi.org/10.5194/epsc2024-11, 2024.

Prompt Patterns provide general and reusable solutions to commonly occurring problems within specific contexts while interacting with a Large Language Model (LLM) such as GPT4. A catalog of generic prompt engineering patterns [1] has been published to help users improve LLM results and to promote further research into prompt engineering. Software engineering software patterns are an analog to prompt patterns, providing reusable solutions to common problems in a particular context.

The catalog defines six categories of prompt patterns including Input Semantics, Output Customization, Error Identification, Prompt Improvement, Interaction, and Context Control. Prompt patterns from two of the categories, Input Semantics and Output Customization have been applied to problems found in information modeling for the Planetary Data System (PDS).

How to cite: Hughes, J., Padams, J., Deen, R., and Joyner, R.: Prompt Patterns For PDS4 Information Modeling, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-76, https://doi.org/10.5194/epsc2024-76, 2024.

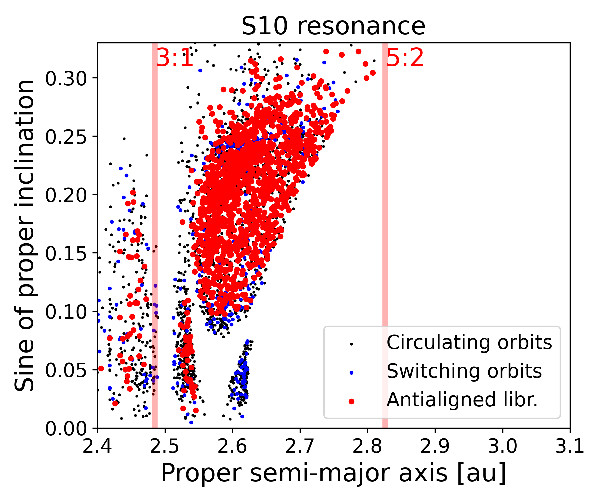

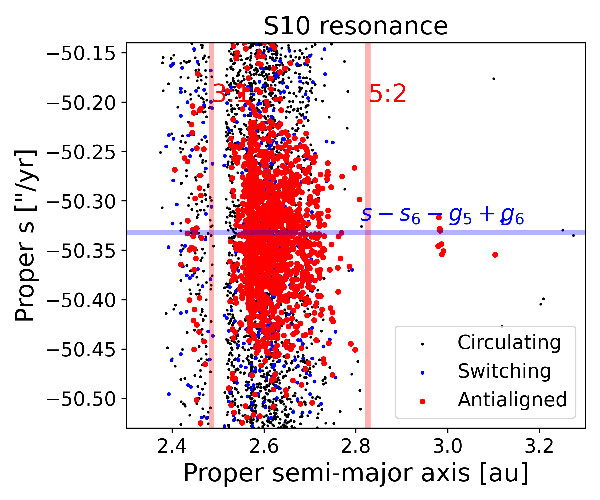

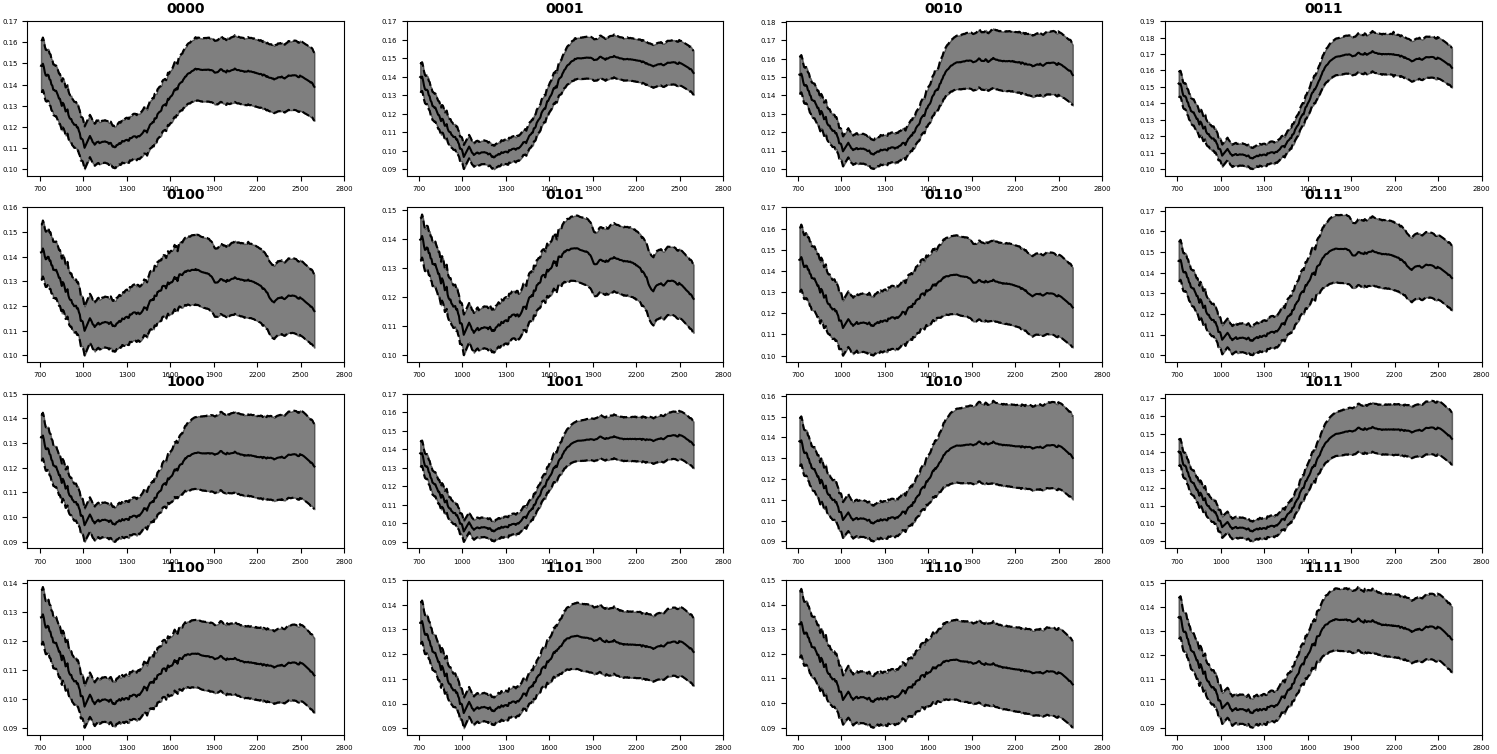

Node secular resonances, or s-type secular resonances, occur when the precession frequencies of the node of an asteroid and some planets are commensurable. They are important for changing the proper inclination of asteroids interacting with them. Traditionally, identifying an asteroid's resonant status was mostly performed by visually inspecting plots of the time series of the asteroid resonant argument to check for oscillations around an equilibrium point. More recently, deep learning methods based on convolutional neural networks (CNN) for the automatic classification of images have become more popular for these kinds of tasks, allowing for the classification of thousands of orbits in a few minutes. Convolutional layers are the main component of CNNs. They apply a set of learnable filters, or convolutional kernels, to the input tensor. Each filter is a small matrix that slides over the input tensor, performing element-wise multiplication and summing the results to produce a single value. Examples of CNN models are the Visual Geometry Group (VGG) (Simonyan & Zisserman 2014), the inception (Szegedy et al. 2015), and ResNet (He et al. 2015) models. In this work, we study 11 s-type resonances in the asteroid main belt and the Hungaria region (Knežević, 2021), and focus on the four most diffusive ones. Table (1) displays these resonances in terms of their resonant frequencies and as a combination of the arguments of linear resonances. The suffix in the frequencies identify the perturbing planet, 5 for Jupiter, 6 for Saturn, etc. The linear ν16 resonance has a resonant argument s-s6.

|

Resonance Identification |

Res. Argument (frequencies) |

Res. Argument (linear resonances) |

|

S2 |

2 · s − s4 − s6 |

ν16 + ν14 |

|

S4 |

s − 2 · s6 + s7 − g6 + g8 |

2 · ν16 − ν17 + ν6 − ν8 |

|

S10 |

s − s6 − g5 + g6 |

ν16 + ν5 − ν6 |

|

S11 |

s − s6 − 2 · g5 + 2 · g6 |

ν16 + 2 · ν5 − 2 · ν6 |

Table (1): The most diffusive s-type secular resonances in the main belt, according to this study. We report the resonant argument regarding frequencies and combinations of linear secular resonances.

We selected asteroids more likely to interact with each given resonance because of their proper s values, and, by visual inspection of their resonant argument, we identified the asteroids whose resonant argument oscillated around an equilibrium point (“librating” orbits), circulates from 0o to 360o (“circulating” orbits) and alternated phases of circulation and libration (“switching” orbits). Since we are interested in secular effects, we can apply a low-pass filter in frequency domains, like the Butterworth filter (Butterworth 1930), to allow low-frequency signals to pass through while attenuating high-frequency signals. Examples of asteroids on circulating and librating orbits without (left panels) and with the low-pass filter (right panels) are shown in Figure (1).

Figure(1): The left panels display images of the osculating resonant argument of the s-s6+g6−g5 secular resonance, while the right panels do the same for the filtered arguments.

We then applied CNN models for databases of unfiltered and filtered elements and computed their efficiency using standard machine learning metrics such as accuracy, Precision, Recall, and F1-score. Data for the S10 resonance are summarized in Table (2) and shown in Figure (2). Our results show that CNN models for filtered images are much more effective than traditional models applied to images of osculating resonant arguments.

|

Model |

Accuracy (%) |

Precision (%) |

Recall (%) |

F1-score (%) |

|

VGG (U) |

72 |

61.5 |

80 |

69.5 |

|

VGG (F) |

92 |

86.4 |

95 |

90.5 |

|

Inception (U) |

74 |

63 |

85 |

72.3 |

|

Inception (F) |

98 |

95.2 |

100 |

97.6 |

|

ResNet (U) |

62 |

51.6 |

80 |

62.7 |

|

ResNet (F) |

90 |

90 |

90 |

90 |

Table (2): Classification in terms of accuracy, precision, recall, and F1-score for the results obtained by CNN models for samples of unfiltered (U) and filtered (F) images of the S10 resonant arguments.

|

|

Figure (2): Proper (a,sin (i)) and (a,s) projections of numbered asteroids with errors in proper s < 0.2 arcsec yr−1 for bodies in the S10 resonance.

Filtered resonant arguments should be preferentially used to identify asteroids interacting with secular resonances. This work is currently under consideration by MNRAS.

References

Butterworth S., 1930, Wireless Engineer, 7, 536.

Carruba V., et al. 2021, CMDA, 133, 38.

Carruba V. et al. 2024, MNRAS, submitted.

He K., Zhang X., Ren S., Sun J., 2015, Deep Residual Learning for Image Recognition, doi:10.48550/ARXIV.1512.03385, https://arxiv.org/abs/1512.03385

Knežević Z., 2021, Serbian Academy of Sciences and Arts.

Lyapunov A. 1892, Annals of Mathematics and Mechanics, 17, 1.

Simonyan K., Zisserman A., 2014, arXiv e-prints, p. arXiv:1409.1556.

Szegedy C., et al., 2015, in Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1–9.

How to cite: Carruba, V., Aljbaae, S., C. Domingos, R., Caritá, G., and Alves, A.: Deep learning classification of asteroids in s-type secular resonances, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-26, https://doi.org/10.5194/epsc2024-26, 2024.

1. Introduction

The advancement of computational techniques and methods such as artificial intelligence applied to celestial mechanics, including image classification. These techniques have been applied in the identification of mean motion resonances for asteroids (Smirnov and Markov, 2017; Carruba et al., 2021) and secular resonances for asteroids Carruba et al. (2022). The rapid growth of machine learning methods applications enables us to leverage these tools for the investigation of dynamical systems such as asteroid and planetary dynamics.

In the restricted three-body problem (R3BP), prograde orbits are those with an inclination less than 90 degrees with respect to the orbital plane of the perturber. Retrograde orbits are defined is this case with an inclination higher than 90 degrees (Morais and Namouni, 2017). These retrograde orbits can occur due to a range of astronomical events, such as collisions, close encounters and dissipation. This study aims to present a machine learning image classification method in order to search for periodic and quasi-periodic orbits, for example, retrograde orbits in the R3BP.

2. Resonances, resonant argument and perturbations

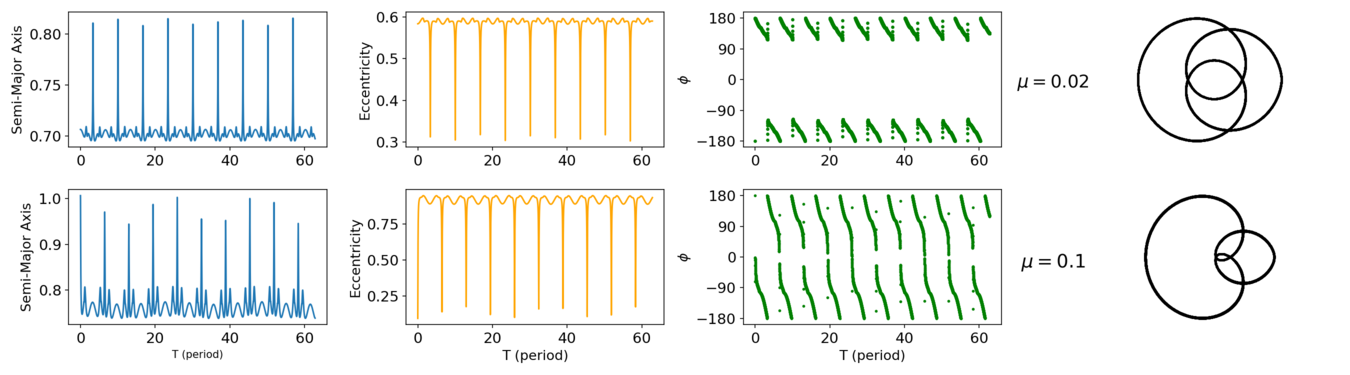

We establish the empirical case by defining μ ≤ 0.01, where μ is the mass ratio between the primaries. This threshold is determined after performing several tests involving different resonances. For values of 0.01 < μ < 0.3, there are only a few instances where the resonant argument can be reliably for identification. This observation could change based on the nature of the orbit. The chosen resonances for investigation are 1/-2, 2/-1, and 1/-1 (Morais and Namouni, 2013). The resonant argument (ϕ) in the Circular R3BP (CR3BP) is defined by ϕ = −pλp − qλ + (p + q)ϖ, where λ and λp are the respective longitudes of the massless and the secondary bodies, and ϖ represents the longitude of the pericenter of the massless body Morais and Namouni (2013). As an example we show the 2/-1 resonance presented in Figure 1, for μ = 0.02, we can still use the resonant argument as a way to identify this resonance, but we cannot determine only by the resonant argument whether it is the center of the resonant due to the amplitude. For μ = 0.1, the resonant argument cannot be analyzed for resonance identification.

Figure (1): Examples of the osculating orbital elements and resonant argument for the resonance 2/-1.

In fact, when investigating families of periodic/quasi-periodic orbits using classical approaches, we could rely on the amplitude of the resonant argument and the libration mode for identification Caritá et al. (2022). For high mass ratio cases, this analysis could lead to partial results and misinterpretation.

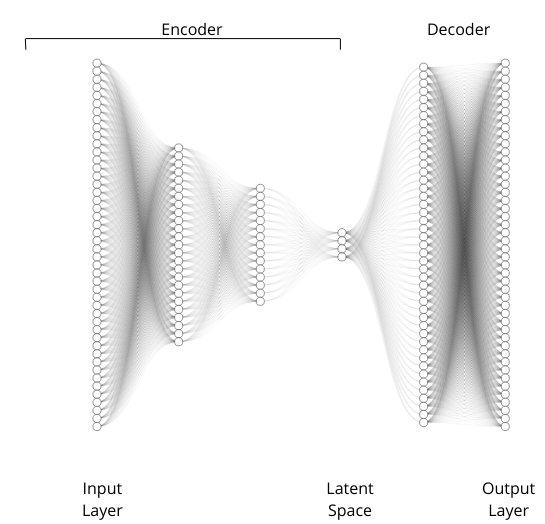

3. Convolution Neural Network Model

A Convolutional Neural Network is a type of artificial neural network commonly used for image recognition and classification, emulating human abilities. The core concept involves building convolutional layers that apply filters, followed by a non-linear function to recognize image data patterns. In this study, we aim to identify resonant families by classifying mean motion resonances using their orbits (x, y) as an image parameter.

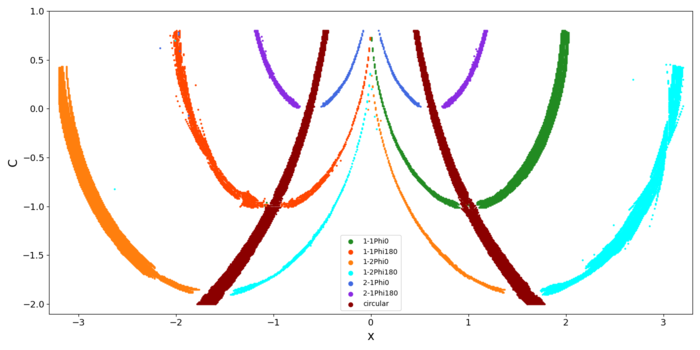

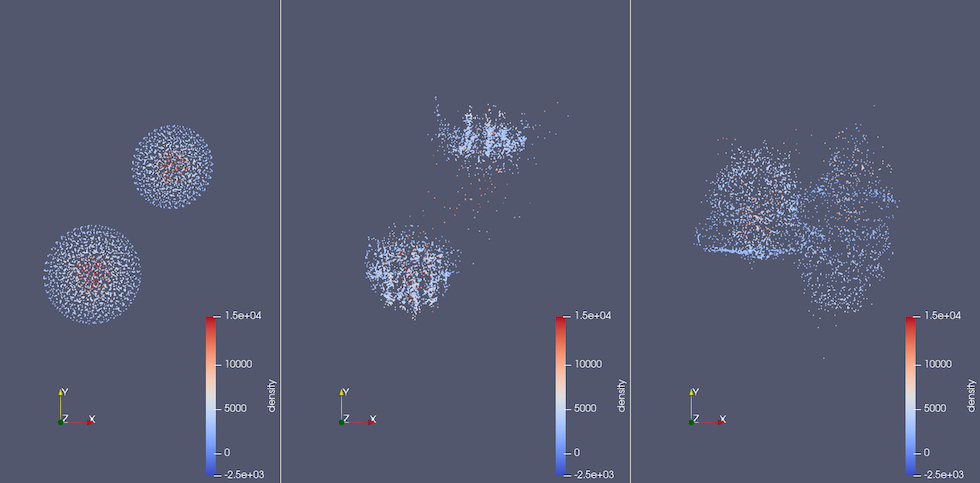

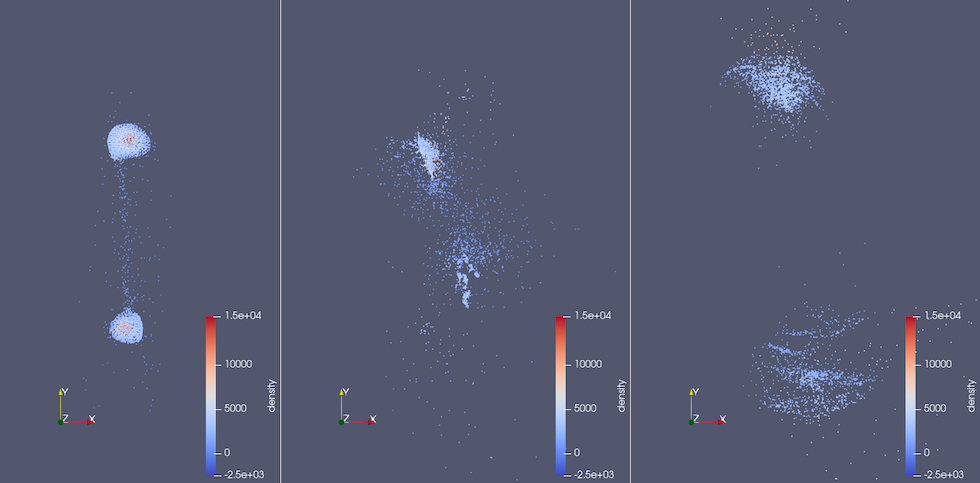

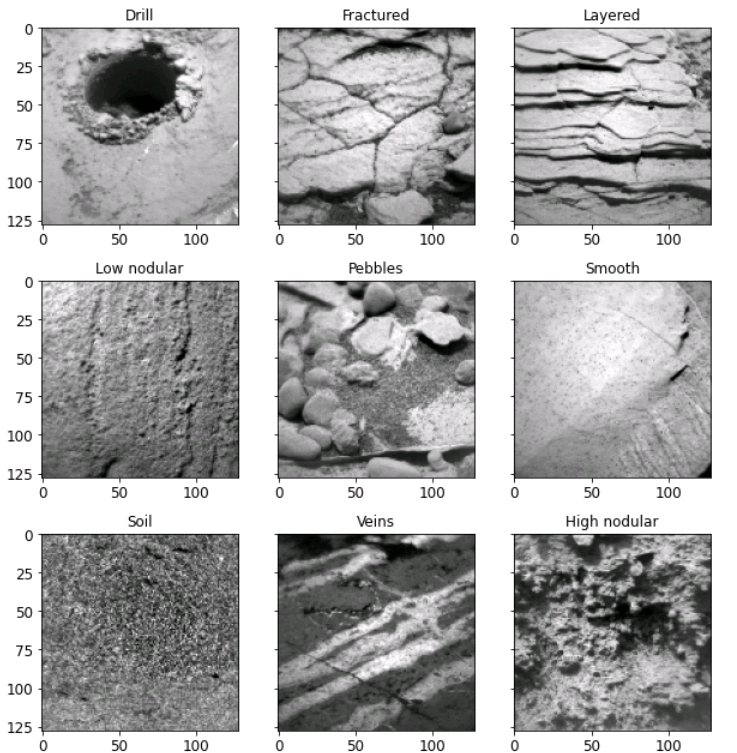

For the empirical problem of the planar CR3BP (PCR3BP) we considered the classes of the retrograde mean motion categorized as 1-1Phi0, 1-1Phi180, 1-2Phi0, 1-2Phi180, 2-1Phi0, 2-1Phi180, circular orbits and undefined. These classes represent resonances with libration at 0 or 180, and circular orbits. Figure 2 illustrates examples. Further details on the model parameters can be found in Caritá et al. (2024). As a validation benchmark, we obtained the 1/-2, 1/-1 and 2/-1 from Morais et al. (2021), Morais and Namouni (2019), and Kotoulas and Voyatzis (2020). The Figure 3 illustrates the output of the CNN prediction model successfully identifying circular, 1/-1, 1/-2, and 2/-1 resonant families.

Figure (2): Sample of the data used in the training.

Figure (3): Classification of the resonances on the right panel.

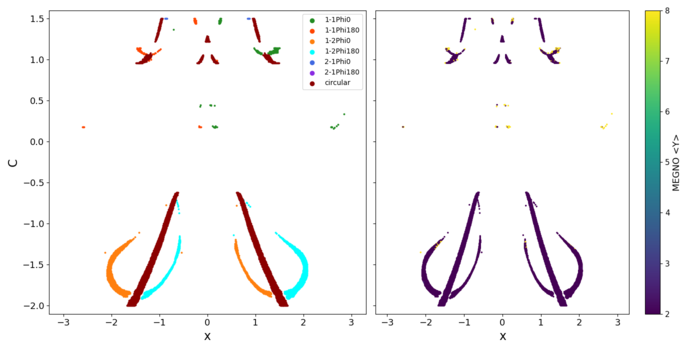

4. Image resonance classification for high mass ratios in PCR3BP

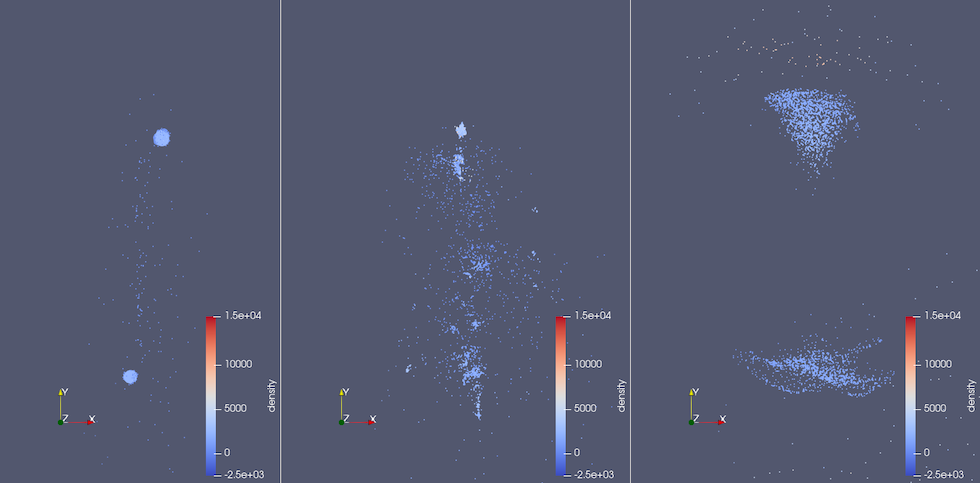

As the mass of the binary body increases beyond the range of the empirical case, identifying resonances using Keplerian orbital elements becomes challenging due to the two-body problem approximations. However, resonances still exist in the phase space of the dynamical system, and they exhibit a behavior similar to that of empirical orbits in a rotating frame as seen in (Caritá et al., 2023). In Figure 4, the left panel presents the results obtained for a grid with μ = 0.5. The right panel shows the chaotic indicator (MEGNO). We observed a few gaps between some families. The orbits are different for high mass ratios. This could imply in difficulties identifying resonant families as μ increases.

Figure (4): Classification of the resonances for the binary mass ratio of μ = 0.5.

5. Conclusions

The results obtained from the validation of our classification model demonstrate its effectiveness in accurately capturing the distinct patterns and characteristics of retrograde resonances. The model successfully identified retrograde resonances with high accuracy. The versatility of our classification model can extend beyond the constraints of the R3BP, making it adaptable to more intricate celestial environments. The model’s automated approach and efficiency offer researchers a potent tool for exploring a wide parameter space, quantifying stability, characterizing resonant behavior.

References

Smirnov, E.A., Markov, A.B.: MNRAS 469(2),2024-2031 (2017)

Carruba, V. et al, MNRAS, 504(1), 692–700 (2021)

Carruba , V. et al, MNRAS,514(4), 4803–4815 (2022)

Morais, M., Namouni, F., Nature 543(7647),635–636 (2017)

Morais, M., Namouni, F., CMDA, 117(4), 405–421 (2013)

Caritá, G.A et al MNRAS 515(2), 2280–2292 (2022)

Caritá, G.A et al CMDA 136(2), 1–24 (2024)

Morais, M., Namouni, F., CMDA, 133(5), 1-14 (2021)

Morais, M., Namouni, F., CMDA, 490(3), 3799-3805 (2019)

Kotoulas, T., Voyatzis, G., PSS, 182, 104846 (2020)

Caritá, G.A et al NODY 111(18), 17021-17035 (2023)

How to cite: Caritá, G., Aljbaae, S., Morais, M. H. M., Signor, A. C., Carruba, V., Prado, A. F. B. D. A., and Hussmann, H.: Image Classification of Retrograde Resonance in the Planar Circular Restricted Three-Body Problem, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-433, https://doi.org/10.5194/epsc2024-433, 2024.

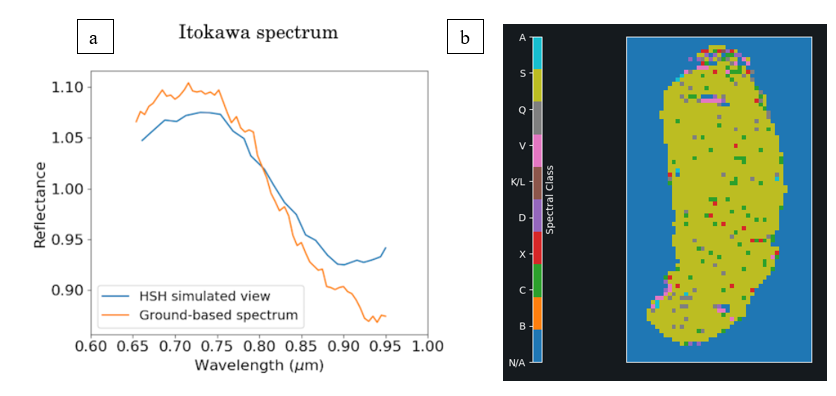

Remote-sensing spectroscopy is the most efficient observational technique to characterise the surface composition of asteroids within a reasonable timeframe. While photometry allows to characterise much fainter targets, the resolution of characteristic absorption features via spectroscopy makes this technique the method of choice for many analysis avenues. The main contributors to our spectral database of asteroids have been large surveys, such as ECAS (Eight Color Asteroid Survey, [1]), SMASS (Small Main-Belt Asteroid Spectroscopic Survey, [2]), PRIMASS (PRIMitive Asteroids Spectroscopic Survey, [3]), MITHNEOS (MIT-Hawaii Near-Earth Object Spectroscopic Survey, [4]), and ESA Gaia. A significant number of observations has further been produced by individual efforts, focusing e.g. on asteroid families (e.g. [5, 6], among many others) and particular populations (e.g. [7, 8, 9], among many others). Currently, the number of visible / near-infrared / mid-infrared remote-sensing spectra of asteroids is around 70,000. This number will increase significantly in the years to come thanks to Gaia DR4 (expected in 2026) and the SPHEREx survey (to be launched in 2025, [10]). This is a positive development for the minor body community, and it has been recognised that, as the number of spectra continues to increase, we will require more sophisticated analysis methods to make best use of it. Numerous efforts have been undertaken to exploit these datasets with modern statistical treatment, for example, to identify clusters of asteroids (taxomic classes, [11, 12, 13, 14]), to invesitage asteroid-meteorite relationships ([15, 16, 17]), and for mineralogical characterization ([18, 19]). The recent strong increase in the number of asteroids with an observed spectrum further enables the independent confirmation of results obtained with other observables with literature spectra (e.g. asteroid families from dynamical elements, [20]).

A necessary prior step for all aforementioned analyses is the collection of public spectra from survey databases for the targets under investigation. These databases include NASA’s Planetary Data System, the Centre de Données astronomiques de Strasbourg, and online repositories of Gaia, SMASS, and MITHNEOS. Achieving a complete literature look-up for any given target is therefore a tedious task, given the large number of repositories and the heterogeneous datasets, in particular when accounting for smaller repositories based on the individual observational efforts referenced above. With an increasing number of spectra, this effort will increase. Two effects are visible: (1) Authors tend to visit one or two repositories and use an incomplete subset of the available spectra of a given asteroid. This hurts the scope of the analysis and therefore the results, as it is known that asteroid spectra can be subject to intrinsic (e.g. activity, [21]) and extrinsic (e.g. phase colouring, [22]) variability. (2) Important information like the metadata (for example, the epoch of observations and therefore phase angle of target) is commonly neglected in large-scale analyses given the large effort required to extract it.

The community could therefore benefit from a data-aggregator service focused on asteroid spectra. The classy tool aims to fill this gap by directly addressing the issue of accessibility of asteroid spectra. It itself is the product of a large, dedicated effort to build and homogenise a database of asteroid spectra, in preparation for a machine-learning appication to derive an asteroid taxonomy [12]. There are two main uses cases that classy aims to solve: (1) Search for and access of asteroid spectral observations based on properties of the spectra and the targets (e.g. retrieve all NIR spectra of asteroids in the Themis family with albedos < 0.05), and (2) to classify spectra in different taxonomic schemes (Tholen [23], Bus-DeMeo [11], Mahlke [12]). In addition, classy offers important functionality for common preprocessing of spectral data: truncating, interpolating, smoothing, and feature parameterisation. These preprocessing parameters are stored in a database that can be shared among users, supporting consistent data treatment among collaboration members and the publishing of reproducible results. Users can further ingest private observations into their local classy database to work in unison with public data. classy is connected to numerous online repositories of asteroid spectra, enabling to retrieve and query among approximately 70,000 spectra. A large effort was spent to extract the observational metadata from articles to allow studies to make use of the epoch of observation and the phase angle of the target. classy is a python package with a command-line interface and a web interface.1 It provides the important connection between repositories of spectra and users, greatly facilitating machine learning and other data-driven projects. It is actively developed, with a focus on stability and regarding the number of spectra that can be retrieved. Documentation is available online.2

1 https://classy.streamlit.app/

2 https://classy.readthedocs.io/

Acknowledgments: Without observations, there is not much to do. I thank all observers who choose to make their data available to the community.

Dr Benoit Carry provided important contributions to initial development of the classy database, in preparation of Mahlke et al. 2022.

References:

[1] Zellner, B. et al. (1985), Icarus, 61, 355-416

[2] Xu, S. et al. (1995), Icarus, 115, 1-35

[3] Pinilla-Alonso, N. et al. (2022), DPS, #504.09

[4] Binzel, R. et al. (2019), Icarus 324, 41-76

[5] Mothé-Diniz, T. and Carvano, J. M. (2005), A&A, 442, 727-729

[6] Marsset, M. et al. (2016), A&A, 586, A15

[7] Clark, B. E. et al. (2004), AAS, 128, 3070-3081

[8] de León, J. et al. (2012), Icarus, 218, 196-206

[9] Devogèle, M. et al. (2018), Icarus, 304, 31-57

[10] Ivezic, Z. et al. (2022), Icarus, 371, 114696

[11] DeMeo, F. et al. (2009), Icarus, 202, 160-180

[12] Mahlke, M. et al. (2022), A&A, 665, A26

[13] Penttilä, A. et al. (2022), A&A, 649, A46

[14] Klimczak, H. et al. (2022), A&A, 667, A10

[15] DeMeo, F. et al. (2022), Icarus, 380, 114971

[16] Mahlke, M. et al. (2023), A&A, 676, A94

[17] Galinier, M. et al. (2023), A&A, 671, A40

[18] Oszkiewicz, D. et al. (2022), MNRAS, 519, 2917-2928

[19] Korda, D. et al. (2023), A&A, 669, A101

[20] Carruba, V. et al. (2024), MNRAS, 528, 796-814

[21] Marsset, M. et al. (2019), ApJ Letters, 882, L2

[22] Alvarez-Candal, A. et al. (2024), A&A, 685, A29

[23] Tholen, D. (1984), PhD Thesis, MIT

How to cite: Mahlke, M.: Simplified access of asteroid spectral data and metadata using classy, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-172, https://doi.org/10.5194/epsc2024-172, 2024.

The aim of this work is to classify co-orbital motion at different timescales using a machine learning approach. Asteroids that move, on average, in a 1:1 mean motion resonance with a given planet, under the assumptions of the restricted three-body problem, are of special interest, because they follow very stable orbital configurations. The capability of classifying this kind of dynamics in an automatic way is of paramount importance to understand key mechanisms in the solar system but also for space exploration and exploitation missions. Starting from the analysis of Tadpole, Quasi-Satellite and Horseshoe regimes on a medium timescale, we apply features extraction and machine learning algorithms to the timeseries of the relative angle between the asteroid and the planet. The dataset is composed of pseudo-real timeseries computed by means of the JPL Horizons system (thus considering a full dynamical model) and simulated data computed by means of the REBOUND software. In a second step, the process is enriched to also be able to classify relative transitions between different co-orbital motions and between resonant and non-resonant dynamics, thus also considering much longer timescales. To this end, different algorithms are evaluated, starting from classical statistical approaches to more advanced deep learning ones.

How to cite: Azevedo, T., Ciacci, G., Di Ruzza, S., Barucci, A., and Alessi, E. M.: Towards a machine learning classification model of asteroids' co-orbital regimes, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-274, https://doi.org/10.5194/epsc2024-274, 2024.

In this session, we will discuss the use of Machine Learning methods for the identification and localization of cometary activity for Solar System objects in ground and in space-based wide-field all-sky surveys. We will begin the chapter by discussing the challenges of identifying known and unknown active, extended Solar System objects in the presence of stellar-type sources and the application of classical pre-ML identification techniques and their limitations. We will then transition to the discussion of implementing ML techniques to address the challenge of extended object identification. We will finish with prospective future methods and the application to future surveys such as the Vera C. Rubin Observatory.

How to cite: Bolin, B.: Identification and Localization of Cometary Activityin Solar System Objects with Machine Learning, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-136, https://doi.org/10.5194/epsc2024-136, 2024.

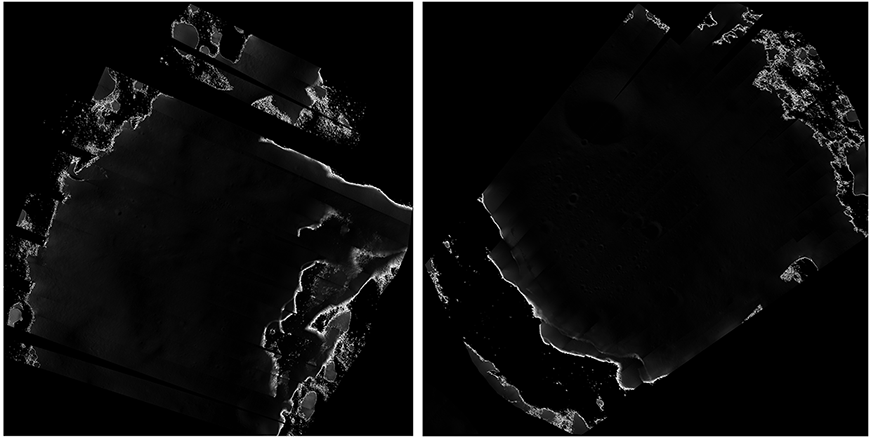

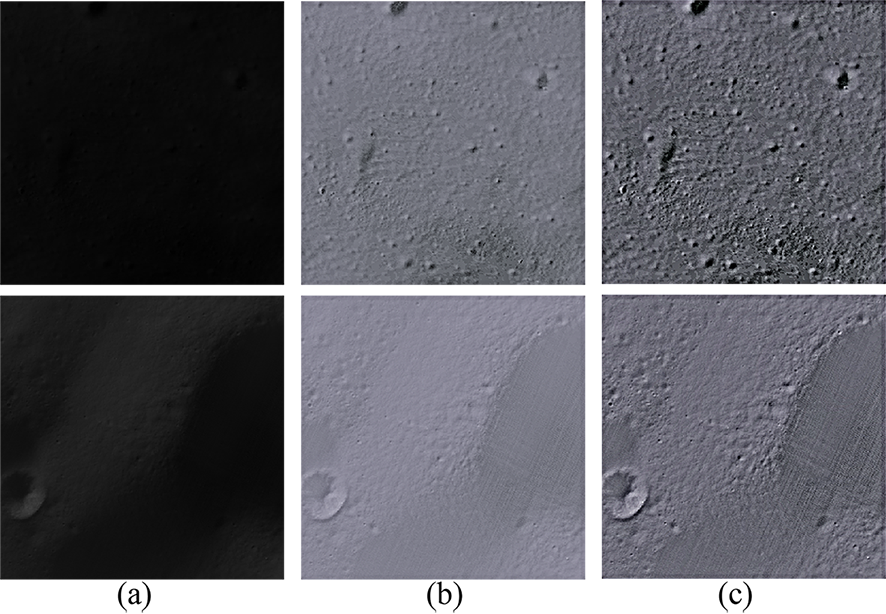

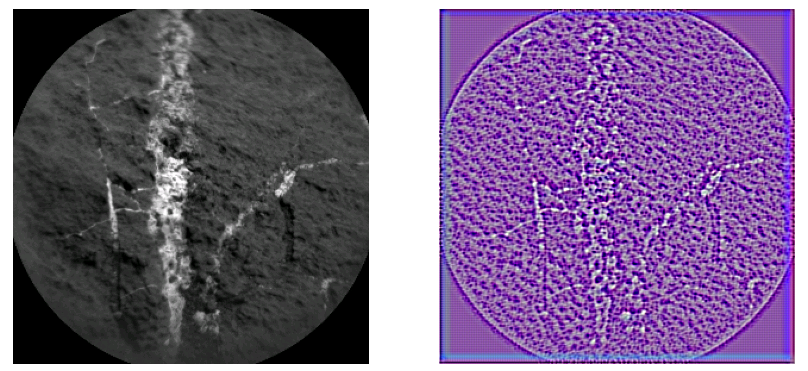

The moon is the earth's only natural satellite and it has a huge impact on the earth. Many countries have carried out a large number of lunar exploration missions over the years. The Lunar Reconnaissance Orbiter (LRO) launched by the United States in 2009 carried seven scientific instrument payloads to comprehensively map the lunar terrain and explore lunar resources. The optical narrow-angle camera (NAC) captures, processes and transmits back a large amount of image data [1]. These optical image data are intuitive data for studying the moon. Due to the axial tilt and undulating terrain, there are many areas (i.e., permanently shadowed regions (PSRs)) near the lunar south pole and north pole that are never illuminated by sunlight, so the imaging quality of these areas is poor. As shown in Fig. 1, PSR images have low signal-to-noise ratio (SNR), poor visibility, and most of the content is obscured. However, PSR may contain important materials such as water-ice, which makes it critical to study PSR with images. How to use low-light image enhancement methods to obtain high-quality PSR images has become a major challenge in this field.

Fig. 1. Optical image examples of the lunar south pole PSR. These images were captured by LRO NAC and stitched together in post-processing.

Image enhancement is an important task in computer vision, which aims to design algorithms to improve the interpretability of images. Image enhancement in the past was mainly divided into traditional methods and deep learning methods. Traditional methods represented by histogram normalization (HE)[2] and dehazing model theory[3] are limited in enhancement range and physical assumptions, and have low automation capabilities. Deep learning methods are divided into supervised learning methods, GAN-based methods[4] and zero-shot learning methods. Supervised learning relies on pre-made paired datasets consisting of original and optimized images. However, for PSR images, there is no standard optimized image, so supervised learning methods are not suitable for PSR image enhancement. Although GAN-based methods get rid of the restrictions on paired datasets, they still need to select data to meet the needs of the generator and discriminator, which makes the process more cumbersome. The zero-shot learning method, which does not rely on any data and can directly enhance images, is suitable for the enhancement of scarce data such as PSR images. An important category of zero-shot learning methods is the decomposition of low-quality images derived from Retinex decomposition theory. Regarding an image G(x), Retinex theory believes that it can be divided into a combination of reflection map and illumination map, that is:

G(x) = R(x)•I(x)

where x is the pixel position of the image, R and I represent the reflection map and illumination map respectively. For low-light images such as PSR images, I(x) is too dark, and R, which represents image features, should also be adjusted. By adjusting these sub-maps, the Retinex-based method can achieve image enhancement.

However, traditional Retinex only considers the content and brightness of the image (i.e., reflectance map and illumination map), but does not consider image noise. Low-light images such as PSR have a low number of photons transmitted to the CCD during imaging, resulting in dim visual phenomena. But low photons not only reduce the brightness of the image, but also introduce a lot of noise, which further reduces the quality of the image. The traditional Retinex method will expand the original noise while adjusting the brightness, which hinders image content analysis. To this end, we propose a Retinex-based model RZSL-PSR that introduces noise estimation. This model decomposes the PSR image into reflection map, illumination map and noise map. The specific formula is:

G(x) = R(x)•I(x) + N(x)

Based on this formula, the model uses the proposed sub-map learning network to output three sub-maps respectively. The network structure includes downsampling, concatenation fusion, and skip connection structures, which helps to obtain feature maps that integrate multi-scale, strong semantic information, and high-resolution information.

Subsequently, the three output sub-maps are optimized to obtain the final output enhanced image. This process does not rely on learning image mappings of paired datasets, but instead utilizes a non-reference loss function to supervise model training. The loss function is as follows:

L = Lr + a•Lt + b•Ln

where Lr is the loss function to ensure that the output image conforms to the Retinex decomposition theory, Lt is the loss function to flatten the illumination uniformity of the image, and Ln is the loss function to estimate and suppress the noise in the dark area. Guided by these loss functions, the model learns nonlinear function mappings for enhancing PSR images, including increasing brightness and removing noise.

As shown in Fig. 2, the enhanced PSR image has better visibility than the original image. However, in order to highlight the landforms within the area for research on landforms and materials, we use USM sharpening for processing. The sharpening map results in Fig. 2 show that the landforms inside the PSR are highlighted, which helps to count the internal landforms.

Fig. 2. PSR’s (original image)-(enhanced image)-(sharpened image) comparison example. Among them (a) is the input PSR image, (b) is the enhanced

image, and (c) is the image after USM sharpening of the enhanced image.

Acknowledgements

References

[1] Vondrak R, Keller J, Chin G, et al. Lunar Reconnaissance Orbiter (LRO): Observations for lunar exploration and science[J]. Space science reviews, 2010, 150: 7-22.

[2] Pisano E D, Zong S, Hemminger B M, et al. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms[J]. Journal of Digital imaging, 1998, 11: 193-200.

[3] Dong X, Pang Y, Wen J. Fast efficient algorithm for enhancement of low lightingvideo[M]//ACM SIGGRApH 2010 posters. 2010: 1-1.

[4] Meng Y, Kong D, Zhu Z, et al. From night to day: GANs based low quality image enhancement[J]. Neural Processing Letters, 2019, 50: 799-814.

How to cite: Zhang, F., Ye, M., Hao, W., Chen, Y., and Li, F.: A Retinex-based zero-shot learning method for permanently shadowed regions’ image enhancement, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-60, https://doi.org/10.5194/epsc2024-60, 2024.

From Pixels to Craters: Advancing Planetary Surface Analysis with Deep Learning.

La Grassa, R., Martellato, E., Re, C., Tullo, A., Vergara Sassarini, N.A. and Cremonese, G.

National Institute for Astrophysics (INAF), 35100 Padua, Italy

Introduction:

Over time, space missions with dedicated instruments for remote sensing allowed us to realize more and more accurate crater catalogs. Such catalogs are important tools for surface analysis. Given impact been collected continuously with time, they permit for instance to compare the different evolution of regions. In particular, when their size-frequency distribution is evaluated in conjunction with chronological models, craters allow datation of planetary surfaces, and provide insights into its geological evolution. In addition, when available both diameter and depth values of craters, the statistical analysis of structures can also provide geomechanical properties of the surface.

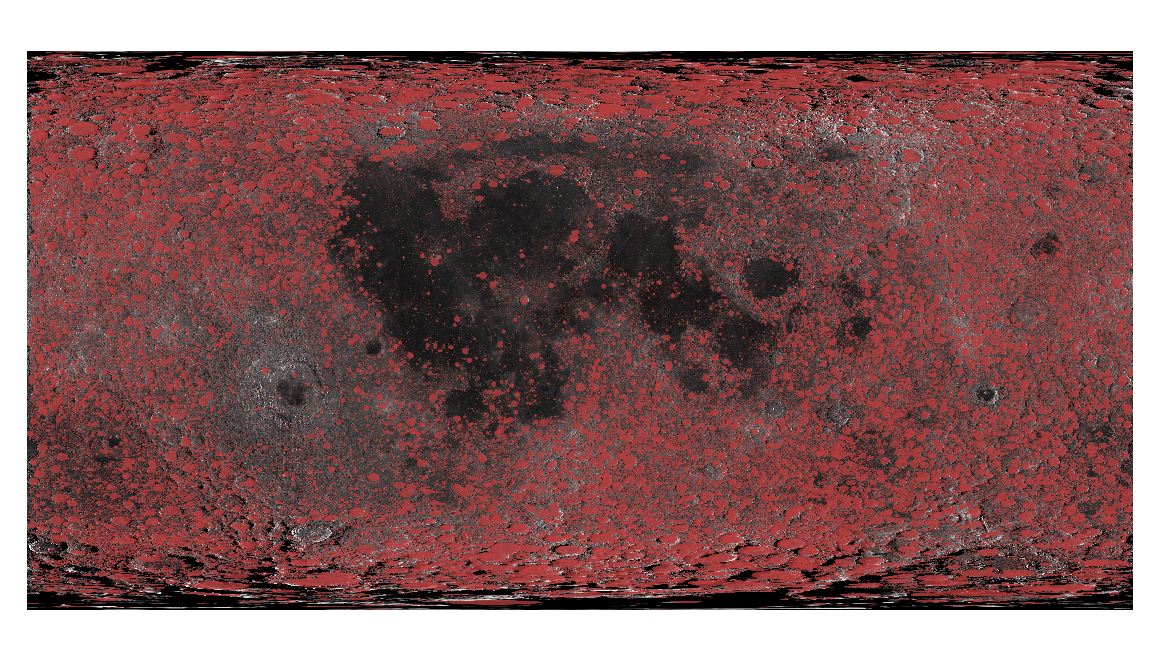

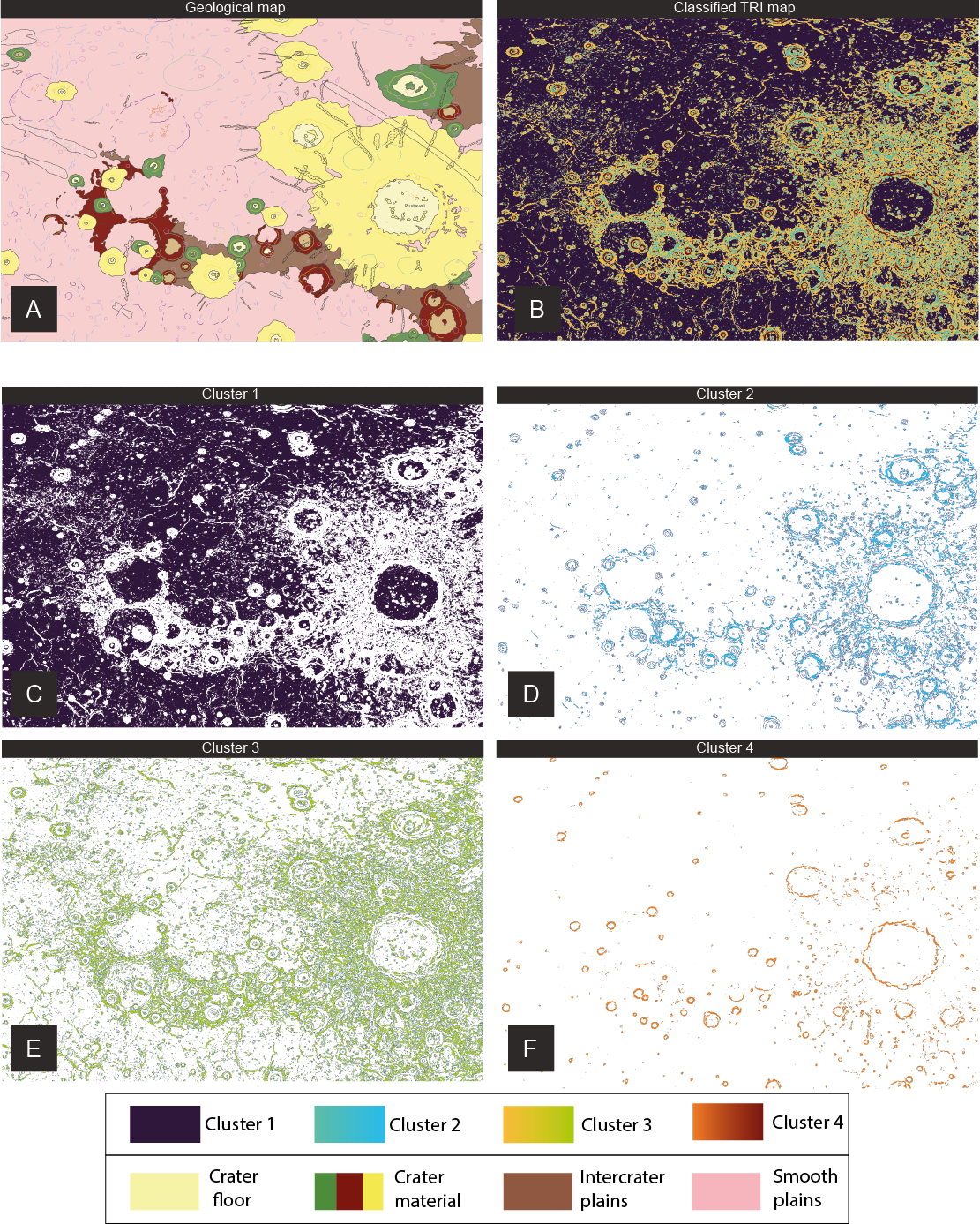

In this study, we present a comprehensive catalog of lunar impact craters, augmented by advancements in deep learning technology. This catalog is the final output of an articulated workflow that includes image pre-processing steps, application of an advanced double-architected neural network model, and final nested post-processing. This catalog not only extends the spatial resolution and coverage of existing databases (e.g., [3]), but also introduces a paradigm shift by including craters as small as 0.4 km in diameter. Moreover, the methodologies developed herein pave the way for the application of similar approaches to other celestial bodies, including for instance Mercury and the Ceres dwarf planet.

Catalog Overview:

Our newly compiled catalog comprises approximately 5 million lunar impact craters (Fig. 2). Thanks to the implementation of a super-resolution algorithm, we were able to expand the lunar crater statistics down to 1 km in diameter, with nearly 69.3% of craters exhibiting diameters lower than 1 km. Furthermore, the impact craters detected in the 1-5 km and 5-100 km ranges are about 28.7% and 1.9% of the total catalog, respectively. Fig 2 shows the distribution of the extracted craters over the global basemap. The results in terms of Recall are very promising, as reported in Table 1, with performances varying with latitude.

|

|

|

Table 1: Number of tiles, Instances and Recall metric extracted by YOLOLens and different latitude degrees using Robbins Ground-Truth as main labels. |

Methodology and Validation:

Our approach exploits a custom neural network, refers as YOLOLens [1], which combines the principles of super-resolution with the state-of-the-art YOLO (You Only Look Once) object detection model [2]. This fusion of technologies enables the integration of high-resolution image processing with precise crater detection, yielding a comprehensive end-to-end solution for lunar crater cataloging. The methodologies developed for lunar crater detection are ready for adaptation and application to other celestial bodies, such as Mercury and the Ceres dwarf planet, expanding our understanding of impact cratering processes across the solar system.

To construct the YOLOLens model, we first enhanced the spatial resolution of lunar imagery, increasing the amount of detail available for crater identification. Subsequently, we integrated this enhanced imagery into the YOLO framework, allowing the localization of craters across the lunar surface. This innovative approach not only facilitated the detection of craters down to sizes as small as 0.4 km, but also ensured the accuracy and efficiency of the crater identification process.

Following the development of the YOLOLens model, extensive validation was conducted to assess its performance against established global crater catalogs. Through accurate comparisons and statistical analyses, we established a consistent crater size-frequency distribution for craters equal to or greater than 1 km, validating the efficacy of our methodology.

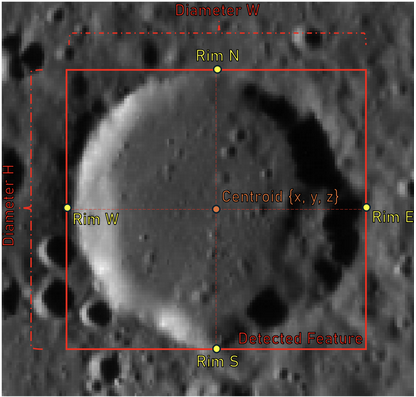

Elevation Data and Statistical Formulations:

One additional characteristic of our catalog is that it provides not only the crater size and position, but also the elevation of the crater rims and center, facilitating a comprehensive analysis of lunar topography (Fig. 1). Moreover, we employ statistical formulations to extract essential crater parameters depth/diameter (d/D). The depth/diameter ratio, a fundamental measure in crater studies, holds particular importance in understanding the geological processes that shaped planetary surfaces. This ratio is a key indicator of crater formation mechanisms, impact energy, and surface properties, providing invaluable insights into planetary evolution. By incorporating elevation data and rigorous statistical analyses into our catalog, we empower researchers to improve the investigations on the lunar cratering processes.

This comprehensive approach not only enhances our understanding of lunar geology but also lays the groundwork for comparative studies across different planetary bodies. As such, the opportunity to provide elevation information and crater morphometric parameters underscores the comprehensive nature of our catalog and its significance in advancing planetary science research.

|

Figure 1: schematic representation of the 4 elevation rim extract by post-processing over the Lunar DTM using the bounding box predicted by YoloLens. The d/D measure and all elevations are introduced into the final catalog. |

|

Figure 2: Overview of the global catalog LU5M812TGT of ≥0.4 km lunar craters, highlighting craters with red circles on LROC WAC Global Mosaic |

Conclusion:

Through the integration of cutting-edge deep learning techniques and validation procedures, we constructed a complete global lunar catalog that offers an important resource for the understanding of lunar geology. This catalog stands to have an interdisciplinary collaboration and technological innovation in space exploration. Additionally, adapting our methodologies to other celestial bodies can further expand our knowledge of impact cratering processes and planetary evolution beyond the Moon.

References:

[1] La Grassa, R., Cremonese, G., Gallo, I., Re, C. and Martellato, E., 2023. YOLOLens: A deep learning model based on super-resolution to enhance the crater detection of the planetary surfaces. Remote Sensing.

[2] Redmon, J., Divvala, S., Girshick, R. and Farhadi, A., 2016. You only look once: Unified, real-time object detection. Computer vision and pattern recognition.

[3] Robbins, S.J., 2019. A new global database of lunar impact craters> 1–2 km: 1. Crater locations and sizes, comparisons with published databases, and global analysis. Journal of Geophysical Research.

Acknowledgements: we gratefully acknowledge funding from the Italian Space Agency (ASI) under ASI-INAF agreement 2017-47-H.0

How to cite: La Grassa, R., Martellato, E., Re, C., Tullo, A., Amanda, V. S., and Cremonese, G.: From Pixels to Craters: Advancing Planetary Surface Analysis with Deep Learning., Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-390, https://doi.org/10.5194/epsc2024-390, 2024.

Introduction: Understanding the distribution and characteristics of impact craters on planetary surfaces is essential if we want to understand the geological processes such as tectonic deformation, volcanism1, erosion, transport, and impact cratering itself2 which constantly rebuilt planetary surfaces. By analysing the distribution and the density of crater using the "crater counting" approach, it is possible to estimate the age of the planetary surface3 at regional scale. Historically, crater detection has been made manually. For Mars and the Moon, the existing handmade databases4,5,6 brings an inestimable value to the community. Nevertheless, even with corrective analysis7, manual databases are subject to human limitations. Indeed, studies have shown that human attention to repetitive tasks, such as crater counting, rapidly decreases after 30 minutes8and then errors started to occur. Several machine learning and AI-based approaches have been proposed to automatically detect craters on planetary surface images9,10,11.

Data: To train our machine learning algorithm, we basically need two kind of data. We need images for the detection and a global crater database which encompass the ground truth. In this work we took images from the context camera on board of the Mars Reconnaissance Orbiter (CTX) and preprocess by the Bruce Murray Laboratory of Caltech12. For the crater database we took A. Lagain’s global martian database which encompass more than 376000 craters of a size bigger than 1 km in diameter7.

Method: In this work, we presents a novel approach using the Faster Region-based Convolutional Neural Network (Faster R-CNN) for automatic crater detection13. As shown in Fig. 1, Faster R-CNN employs three stages: CNN backbone extracts features, RPN generates ROIs with anchor boxes predicting object presence and adjustments, and Detector refines object classification and bounding box coordinates, ensuring precise object detection and localization in images.

Fig. 1 : Faster R-CNN architecture as describe by S.Ren & al, 201613 with our image configuration

The proposed method involves a preprocessing step in which we cut the images to a size of 224x224 pixels, we reproject the images in order to be sure that crater will always have a circular shape at ever latitude and we split the crater database to have a ground truth label for each image. Then, we train our model with 82874 images and we test our detector on 4828 images.

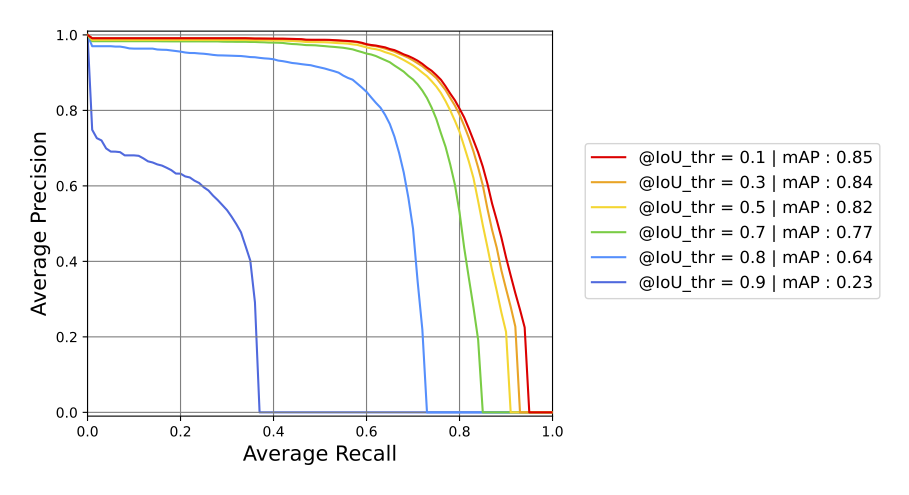

Results: Extensive experiments on high-resolution planetary imagery demonstrate excellent performances with a mean average precision mAP50 > 0.82 with an intersection over union criterion IoU ≥ 0.5, independent of crater scale (Fig.2 & 3).

Fig. 2 : Precision as a function of recall for six different IoU thresholds.

Fig. 3 : Precision vs Recall curves for an IoU = 0.5 and for different bounding box sizes of the test dataset.

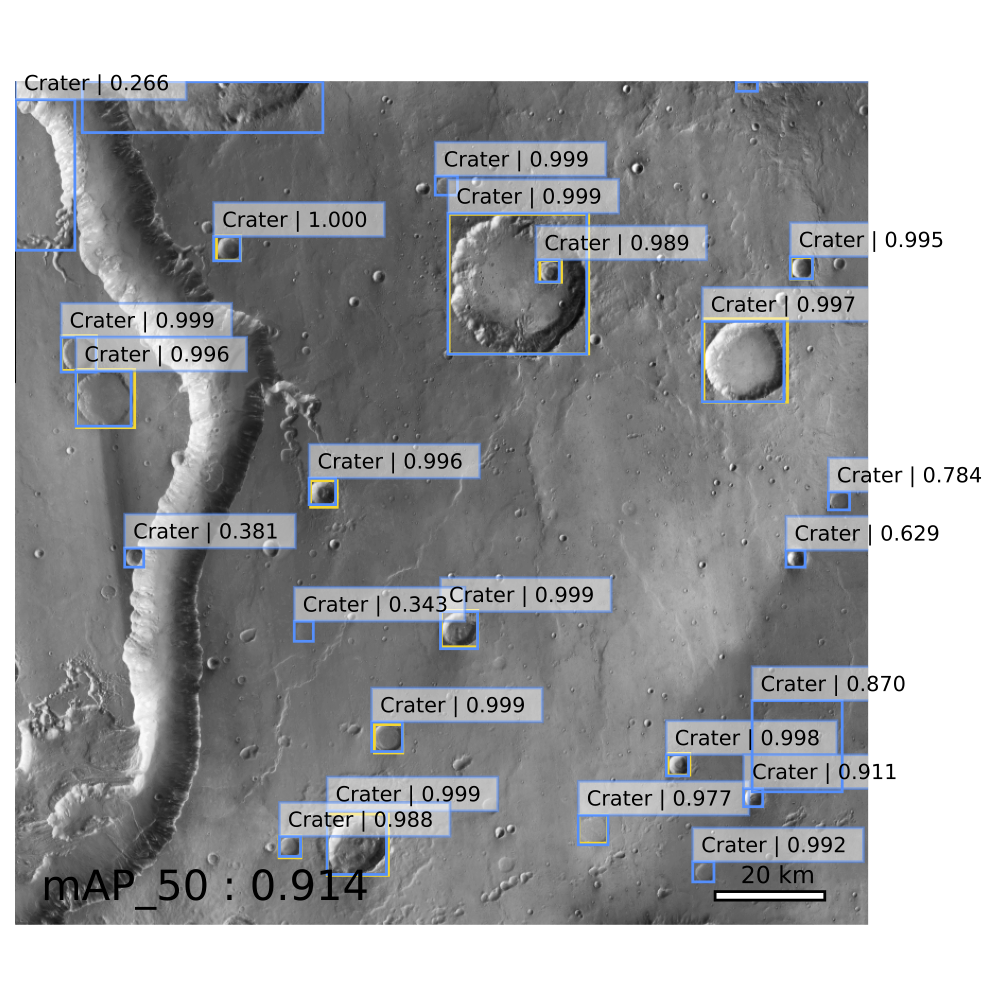

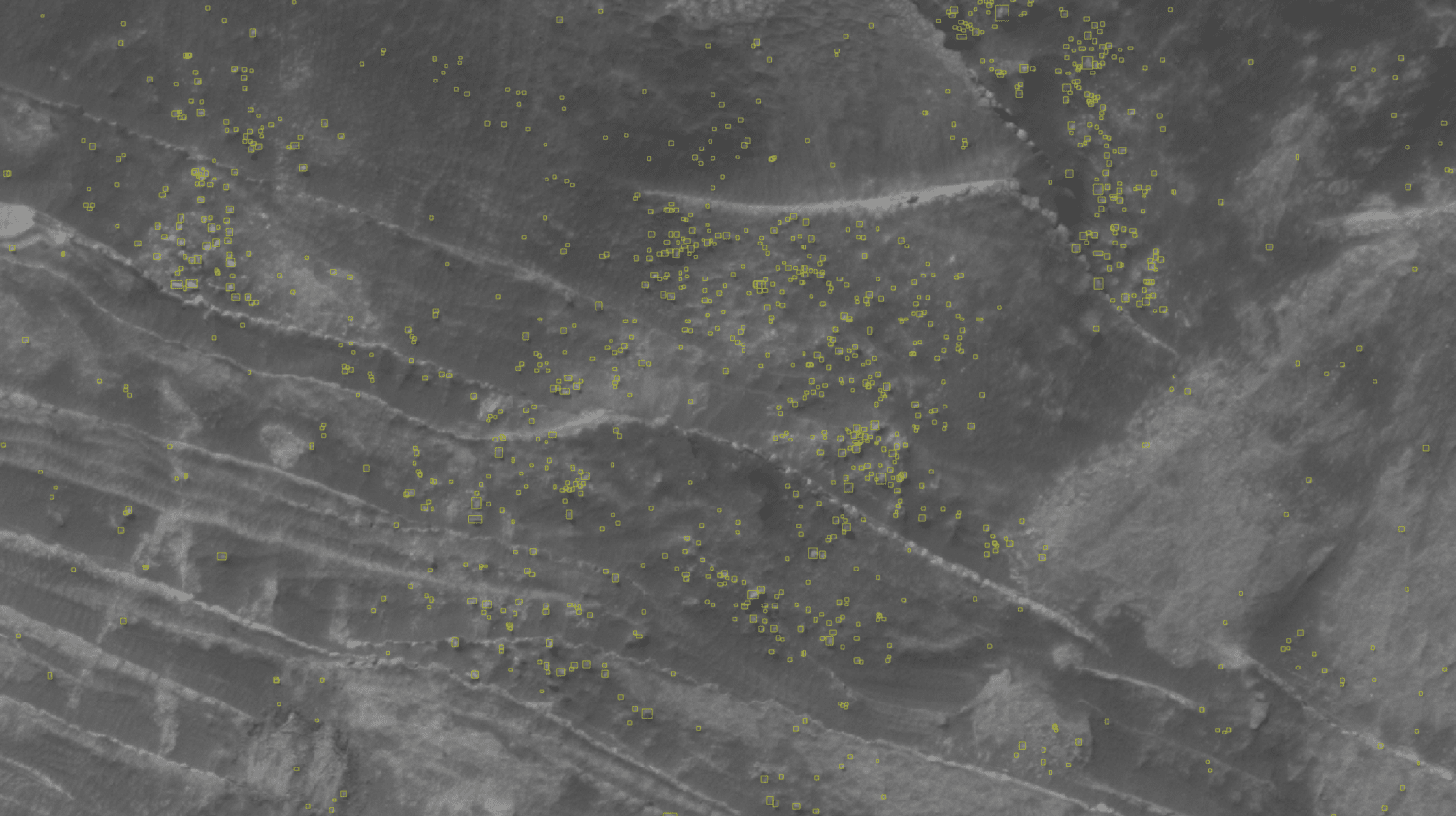

The Fig. 4 show an example on an equatorial Mars quadrangle.

Fig. 4 : Inference on an equatorial region of Mars. The lower right corner of this 4°×4°

quadrangle is located at 44°W, 0°N. The yellow boxes represent the ground truth infor-

mation and the blue boxes the predictions ones. Please

note that crater smaller than 10 pixels in diameter are ignored.

The results also highlight the versatility and potential of our robust model for automating the analysis of craters across different celestial bodies. The automatic crater detection tool holds great promise for future scientific research of space exploration missions.

References:

[1] : M. H. Carr, Volcanism on mars 78 (20) 4049–4062. doi:10.1029/ jb078i020p04049.

[2] : Hartmann, William K., and Gerhard Neukum. "Cratering chronology and the evolution of Mars." Chronology and Evolution of Mars: Proceedings of an ISSI Workshop, 10–14 April 2000, Bern, Switzerland. Springer Netherlands, 2001.

[3] : G. Neukum, B. A. Ivanov, W. K. Hartmann, Cratering records in the in- ner solar system in relation to the lunar reference system, in: Chronology and Evolution of Mars: Proceedings of an ISSI Workshop, 10–14 April 2000, Bern, Switzerland, Springer, 2001, pp. 55–86.

[4] : Head III, James W., et al. "Global distribution of large lunar craters: Implications for resurfacing and impactor populations." science 329.5998 (2010): 1504-1507.

[5] : S. J. Robbins, A new global database of lunar impact craters¿ 1–2 km: 1. crater locations and sizes, comparisons with published databases, and global analysis, Journal of Geophysical Research: Planets 124 (4) (2019) 871–892

[6] : S. J. Robbins, B. M. Hynek, A new global database of mars impact craters ≥ 1 km: 1. database creation, properties, and parameters, Jour- nal of Geophysical Research: Planets 117 (E5) (2012).

[7] : A. Lagain, S. Bouley, D. Baratoux, C. Marmo, F. Costard, O. De- laa, A. P. Rossi, M. Minin, G. Benedix, M. Ciocco, et al., Mars crater database: A participative project for the classification of the morpho- logical characteristics of large martian craters, geoscienceworld (2021).

[8] : J. E. See, S. R. Howe, J. S. Warm, W. N. Dember, Meta-analysis of the sensitivity decrement in vigilance., Psychological bulletin 117 (2) (1995) 230.

[9] : Salamuniccar, Goran, and Sven Loncaric. "Method for crater detection from martian digital topography data using gradient value/orientation, morphometry, vote analysis, slip tuning, and calibration." IEEE transactions on Geoscience and Remote Sensing 48.5 (2010): 2317-2329.

[10] : G. Benedix, A. Lagain, K. Chai, S. Meka, S. Anderson, C. Norman, P. Bland, J. Paxman, M. Towner, T. Tan, Deriving surface ages on mars using automated crater counting, Earth and Space Science 7 (3) (2020) e2019EA001005.

[11] : R. La Grassa, G. Cremonese, I. Gallo, C. Re, E. Martellato, YOLOLens: A deep learning model based on super-resolution to enhance the crater detection of the planetary surfaces, Remote Sensing 15 (5) (2023) 1171. doi:10.3390/rs15051171.

[12] : Dickson, L.A.Kerber, C.I.Fassett, B.L.Ehlmann, A global, blended ctx mosaic of mars with vectorized seam mapping: a new mosaicking pipeline using principles of non-destructive image editing., 49th Lunar and Planetary Science Conference (2018).

[13] : S. Ren, K. He, R. Girshick, J. Sun, Faster r-cnn: Towards real-time object detection with region proposal networks (2016). arXiv:1506. 01497.

How to cite: Martinez, L., Schmidt, F., Andrieu, F., Bentley, M., and Talbot, H.: Automatic crater detection and classification using Faster R-CNN, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-1005, https://doi.org/10.5194/epsc2024-1005, 2024.

Introduction

Impact craters on the lunar surface are surrounded by boulders, rock fragments larger than 25.6 cm [1], which formed during the ejecta emplacement. These boulders are exposed on the surface and thus subject to meteoroid bombardment leading to two main erosional effects: abrasion and shattering [2]. Their abundance decreases with time producing regolith. By analyzing boulder fields, key information on regolith formation and evolution, as well as information about the subsurface rockiness of geologic units exposed by rock-excavating craters can be derived.

For this, global information of the distribution and size of boulders around impact craters is crucial. Currently, the only available global rock abundance map was derived with NASA’s Lunar Reconnaissance Orbiter (LRO) Diviner instrument [3] with an effective resolution of down to 330 m [4] but the dataset does not contain information about individual boulders. LRO Narrow Angle Camera (NAC) [5] images with resolutions of down to 0.5 m cover almost the entire lunar surface and are thus the best currently available source to map individual boulders on a global scale. For the vast majority of the more than 3 million available images, boulders have not been mapped. Compared to manual boulder counting, automated mapping of boulders allows for a substantially faster and reproducible analysis [e.g., 6-9].

Methods

Because of its great success in computer vision problems in various disciplines, we chose a deep learning approach for our boulder detection algorithm. Moreover, previous studies showed the feasibility to use deep learning for global mapping of the lunar surface [e.g., 10, 11]. Recently, we presented our boulder segmentation model [12], which utilizes Mask R-CNN [13] for a regional analysis with single NAC images. For the global analysis, we are now using the object detection model YOLOv8 [14, 15] to reduce the computational cost and required memory.

We utilize the publicly available calibrated pyramid tiff files of the LRO NAC images, which are reduced to 8bit but provide full spatial resolution. The model was trained with 512x512 pixel image tiles created from LRO NAC images displaying boulder fields and background. For all tiles, we manually mapped the boulders by creating a bounding box around the illuminated part. Currently, our dataset contains 12,000 mapped boulders with diameters > 2 m and 650 images.

Next, we applied the trained model to the lunar surface. As there is currently no global LRO NAC mosaic available, we developed an image selection algorithm. For each point on the lunar surface, from the NAC images covering that point, the one with the highest resolution is selected, while we only consider incidence angles between 35° and 75°. Each selected NAC image is then split into tiles and passed to the trained YOLO model for boulder detection.

Preliminary results

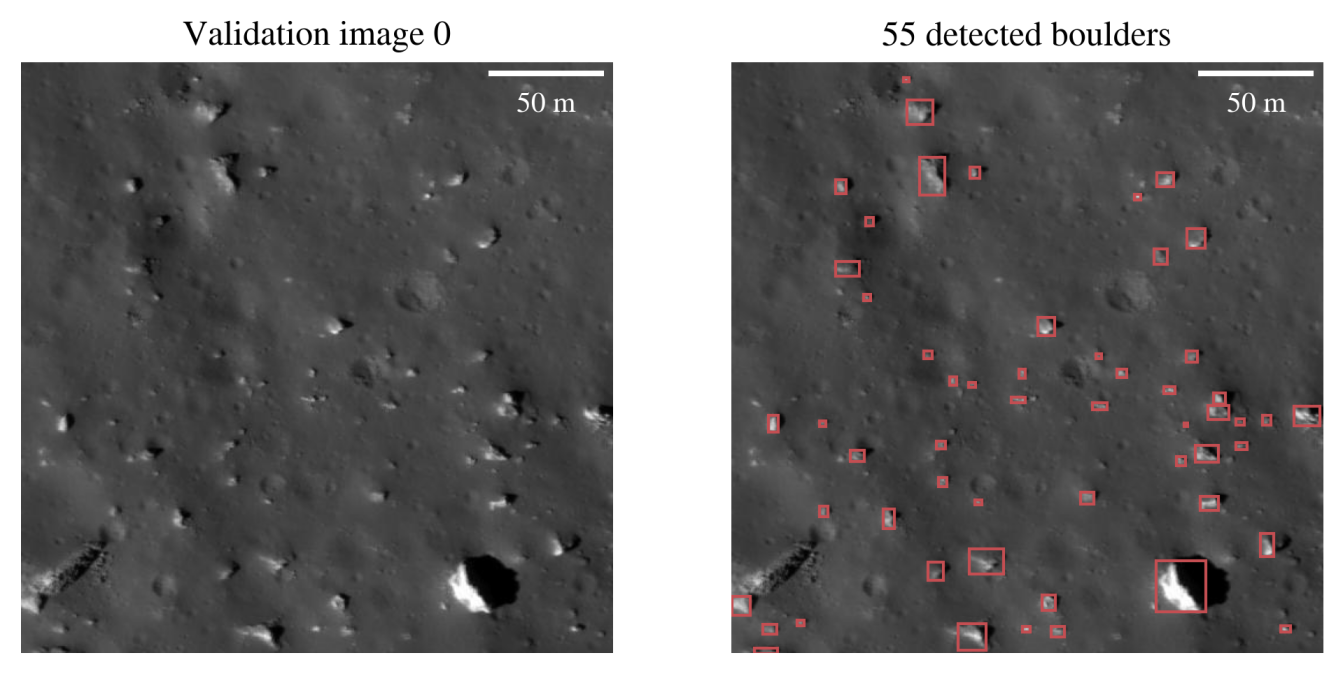

Currently, the model achieves an average precision of 65% with a precision of 66% and recall of 60% for the images of the validation set (Fig. 1). Most false negatives occur for small, likely highly abraded rocks with a reflectance that differs visually only slightly from the background regolith. False positives are mainly caused by outcrops whose boundaries cannot be clearly determined. The performance can be improved, e.g., by adding more examples of mapped boulders.

Figure 1: Examples of the detections (right) for a validation image (left, subframe of NAC image M112671946RC).

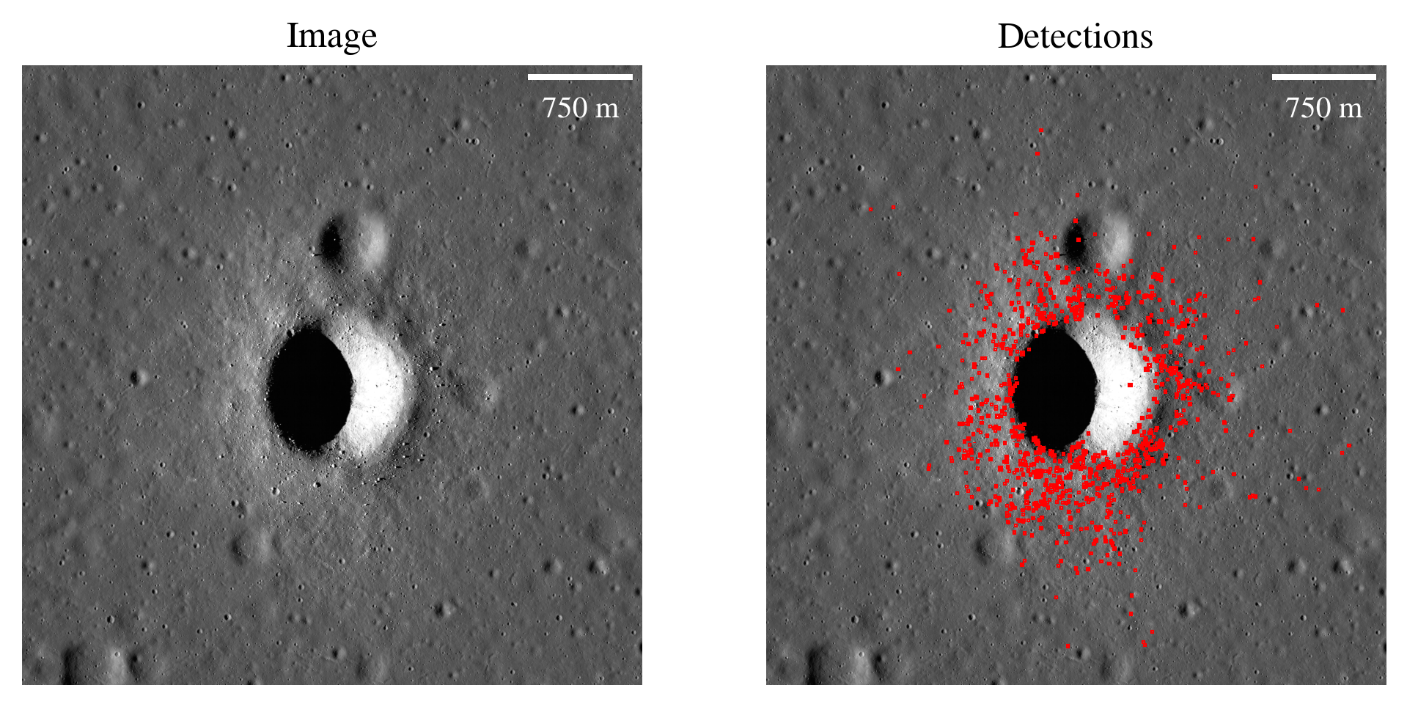

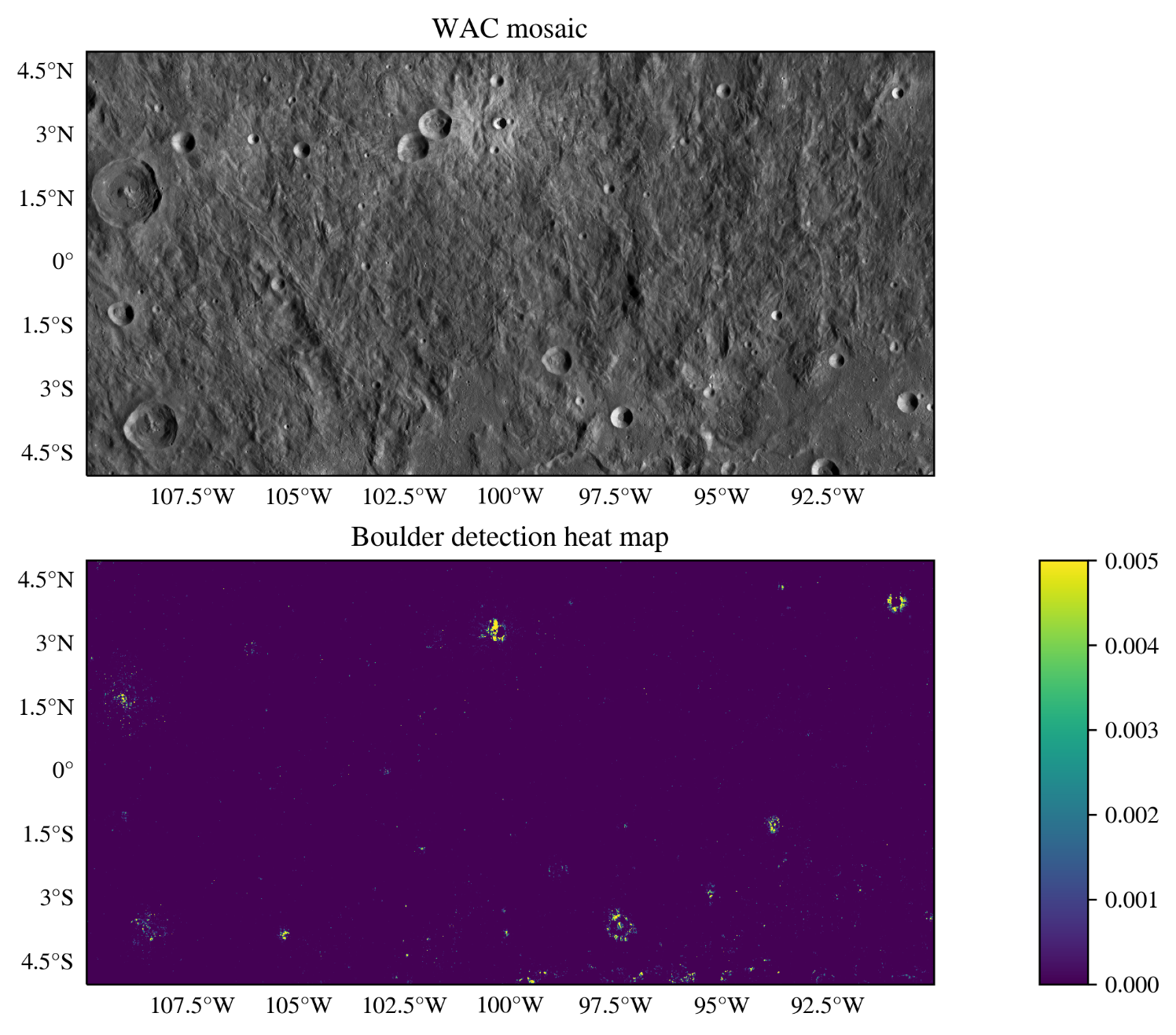

The model detects most boulders around impact craters correctly for regions that were not included in the training and validation process (Figs. 2 and 3). It can distinguish between background, particularly consisting of small craters, and boulders, which both display shadows. Boulders on crater walls remain mostly undetected, likely because of different lighting conditions currently not present in the training set and because of large shadows hiding boulders. Moreover, the specific lighting conditions for the utilized NAC images also influence the quality of the detections for boulders around craters, creating bands of differing absolute boulder abundances (Fig. 3). The mitigation of this effect is subject to current development.

Figure 2: Examples of the detections (right) for a subframe of NAC image M193103501LC (left), which was not included in the training or validation set.

Figure 3: Example of a regional heat map of the boulder abundance (bottom), defined as the areal fraction of the detected boulders. For context, the publicly available mosaic, derived from LRO’s Wide Angle Camera [5] images, is shown for the same region (top).

Outlook

We are developing a deep learning model that can automatically map boulders in LRO NAC images, allowing for a reproducible analysis on a global scale. To improve the performance of the model and to minimize the effects of the specific illumination conditions, we plan to extend the training set. The model will then be used to derive a global map of boulders on the lunar surface to answer key questions about regolith formation and evolution and to improve thermophysical models of the lunar surface.

References

[1] Dutro J. T. J., Dietrich, R. V. and Foose, R. M. (1989), AGI data sheets for geology in the field, laboratory, and office.

[2] Hörz F. et al. (2020) PSS, 194, 105105.

[3] Paige D. A. et al. (2010) Space Sci. Rev., 150, 125-160.

[4] Powell T. M. et al. (2023) JGR : Planets, 128, e2022JE007532

[5] Robinson M. S. et al. (2010) Space Sci. Rev., 150, 81-124.

[6] Li Y. and Wu B. (2018) JGR : Planets, 123, 1061-1088.

[7] Bickel V. T. et al. (2019) IEEE Trans. Geosci. Remote Sens., 57, 3501-3511.

[8] Zhu L. et al. (2021) Remote Sens., 13, 3776.

[9] Prieur N. C. et al. (2023) JGR : Planets, 128, e2023JE008013.

[10] Bickel V. T. et al. (2020) Nat. Commun., 11, 2862.

[11] Rüsch O. and Bickel V. T. (2023), Planet. Sci. J., 4, 126.

[12] Aussel B. and Rüsch O. (2024) LPSC 55, Abstract #1834.

[13] He K. et al. (2017) Proc. IEEE Int. Conf. Comput. Vis., 2961-2969.

[14] Redmon J. et al. (2016) Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., 779-788

[15] Jocher G., et al. (2023) Github, Ultralytics YOLO (Version 8.0.0), https://github.com/ultralytics/ultralytics.

How to cite: Aussel, B., Rüsch, O., Gundlach, B., Bickel, V. T., Kruk, S., and Sefton-Nash, E.: Global mapping of boulders on the lunar surface with deep learning, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-998, https://doi.org/10.5194/epsc2024-998, 2024.

The detailed and accurate shape of the terrain is necessary for some fields, such as geodetic analysis and geomorphology, which are concerned with the physical processes of a planet and its orbital attitudes. The Mercury Laser Altimeter (MLA) onboard the MESSENGER orbiter has performed successive altimetry measurements between 2011 and 2015. These measurements provide a global shape of the planet with approximately 3200 laser profiles, with the majority of these profiles covering the northern hemisphere due to MESSENGER's highly elliptical orbit (Cavanaugh et al., 2007). A number of studies have sought to derive digital terrain models (DTMs) of Mercury using stereo-imaging techniques (Fassett, 2016; Manheim et al., 2022, Florinsky, 2018). Some have employed MLA data (Zuber et al., 2012; Preusker et al., 2017). However, challenges remain due to discrepancies in the laser altimeter tracks, which are thought to be due to residual errors in spacecraft orbit, pointing, etc. Filtering these offsets provides an opportunity for further refinement and improvement of DTM generation methods for Mercury's surface.

The aim of this paper is to accurately extract terrain from MLA point clouds, particularly around the characteristic features of the northern hemisphere, at high resolutions. In this work, we propose an algorithm that follows a region-growing approach to extract the terrain. To identify the optimal surface solution in each local patch (Arghavanian, 2022), we apply machine learning methods and statistical parameters, starting from regions that are relatively easy to fit and then growing to the most challenging areas. Additionally, users have the flexibility to add or remove constraints or fine-tune terrain-relevant and empirical parameters based on their specific topography. The final surface is obtained by global surface fitting, thereby filling any gaps in the data. To evaluate the performance of the proposed method, the results will be compared with previously produced DEMs.

References:

Arghavanian A., 2022, Channel detection and tracking from LiDAR data in complicated terrain, PhD thesis, Middle East Technical University, Ankara, Turkey.

Cavanaugh, J.F. et al. (2007) ‘The Mercury Laser Altimeter Instrument for the MESSENGER Mission’, Space Science Reviews, 131(1), pp. 451–479. Available at: https://doi.org/10.1007/s11214-007-9273-4.

Fassett C.I., 2016, Ames stereo pipeline-derived digital terrain models of Mercury from MESSENGER stereo imaging, Planetary and Space Science, Volume 134, Pages 19-28.

Florinsky I.V., 2018, Multiscale geomorphometric modeling of Mercury, Planetary and Space Science Volume 151, Pages 56-70.

Manheim M.R., Henriksen M.R., Robinson M.S., Kerner H. R., Karas B.A., Becker K.J., Chojnacki M., Sutton S.S., Blewett D.T., 2022, High-Resolution Regional Digital Elevation Models and Derived Products from MESSENGER MDIS Images, Remote Sens, 14, 3564.

Preusker F, Stark A, Oberst J, Matz K.D., Gwinner K, Roatsch T, Watters T.R., 2017, Planetary and Space Science, Volume 142, Pages 26-37.

Zuber, M.T. et al. (2012) ‘Topography of the Northern Hemisphere of Mercury from MESSENGER Laser Altimetry’, Science, 336(6078), pp. 217–220. Available at: https://doi.org/10.1126/science.1218805.

How to cite: Arghavanian, A., Stenzel, O., and Hilchenbach, M.: Smooth Hermean surface extraction by Region Growing from MESSENGER Laser Altimeter Data , Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-320, https://doi.org/10.5194/epsc2024-320, 2024.

This work introduces an approach to enhance the computational efficiency of 3D Global Circulation Model (GCM) simulations by integrating a machine-learned surrogate model into the OASIS GCM[1]. Traditional GCMs, which are based on repeatedly numerically integrating physical equations governing atmospheric processes across a series of time-steps, are time-intensive, leading to compromises in spatial and temporal resolution of simulations. This research improves upon this limitation, enabling higher resolution simulations within practical timeframes.

Speeding up 3D simulations holds significant implications in multiple domains. Firstly, it facilitates the integration of 3D models into exoplanet inference pipelines, allowing for robust characterisation of exoplanets from a previously unseen wealth of data anticipated from post-JWST instruments[2-3]. Secondly, acceleration of 3D models will enable higher resolution atmospheric simulations of Earth and Solar System planets, enabling more detailed insights into their atmospheric physics and chemistry.

This work builds upon previous efforts in both exoplanet science and Earth climate science to accelerate 3D atmospheric models. Prior work in exoplanet science primarily relies on extrapolating 1D models to approximate certain 3D atmospheric variations[4-6], which often introduces both known and unknown biases. Earth climate science, benefiting from a wealth of high-resolution observational measurements, has had a different set of methods employed, namely machine-learned surrogate models trained on such observations[7-8]. This work employs machine-learned surrogate model techniques, benchmarked in Earth climate science[8], to be used in general planetary climate models.

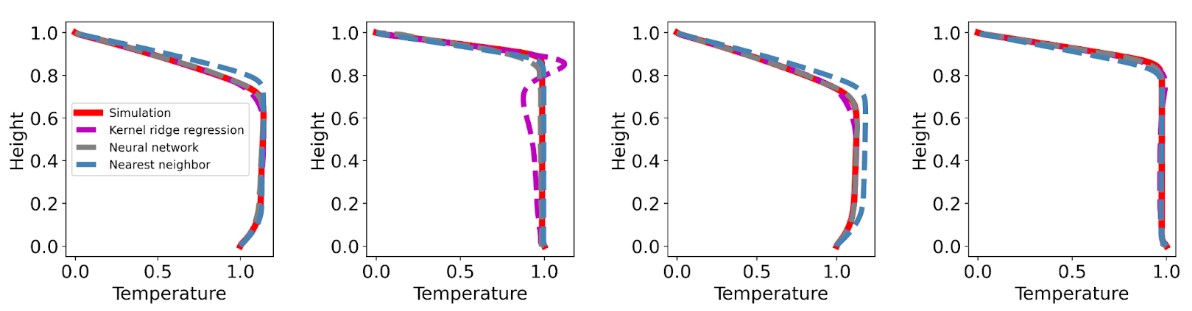

Our method replaces the radiative transfer module in OASIS with a recurrent neural network-based model trained on simulation inputs and outputs. Radiative transfer is typically one of the slowest and lowest resolution components of a GCM, thus providing the largest scope for overall model speed-up. The surrogate model was trained and tested on the specific test case of the Venusian atmosphere, to benchmark the utility of this approach in the case of non-terrestrial atmospheres. This approach yields promising results, with the surrogate-integrated GCM demonstrating above 99.0% accuracy and 10 times CPU speed-up compared to the original GCM.

In conclusion, this work presents a method to accelerate 3D GCM simulations, offering a pathway to more efficient and detailed modelling of planetary atmospheres.

References:

[1] Mendonca J. & Buchhave L., ‘Modelling the 3D Climate of Venus with OASIS’, 2020, Volume 496, Pages 3512-3530, Monthly Notices of the Royal Astronomical Society

[2] Gardner, J. P., “The James Webb Space Telescope”, Space Science Reviews, vol. 123, no. 4, pp. 485–606, 2006. doi:10.1007/s11214-006-8315-7.

[3] Tinetti, G., “Ariel: Enabling planetary science across light-years”, arXiv e-prints, 2021. doi:10.48550/arXiv.2104.04824.

[4] Q. Changeat and A. Al-Refaie, “TauREx3 PhaseCurve: A 1.5D Model for Phase-curve Description,” Astrophys J, vol. 898, no. 2, p. 155, Aug. 2020, doi: 10.3847/1538-4357/ab9b82.

[5] K. L. Chubb and M. Min, “Exoplanet atmosphere retrievals in 3D using phase curve data with ARCiS: application to WASP-43b,” Jun. 2022, doi: 10.1051/0004-6361/202142800.

[6] P. G. J. Irwin et al., “2.5D retrieval of atmospheric properties from exoplanet phase curves: Application toWASP-43b observations,” Mon Not R Astron Soc, vol. 493, no. 1, pp. 106–125, Mar. 2020, doi: 10.1093/mnras/staa238.

[7] Yao, Y., Zhong, X., Zheng, Y., & Wang, Z. 2023, Journal of Advances in Modeling Earth Systems, 15, e2022MS003445, doi: 10.1029/2022MS003445

[8] Ukkonen, P. 2022, Journal of Advances in Modeling Earth Systems, 14, e2021MS002875, doi: 10.1029/2021MS002875

How to cite: Tahseen, T., Mendonça, J., and Waldmann, I.: Accelerating Radiative Transfer in 3D Simulations of the Venusian Atmosphere, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-356, https://doi.org/10.5194/epsc2024-356, 2024.

1. Introduction

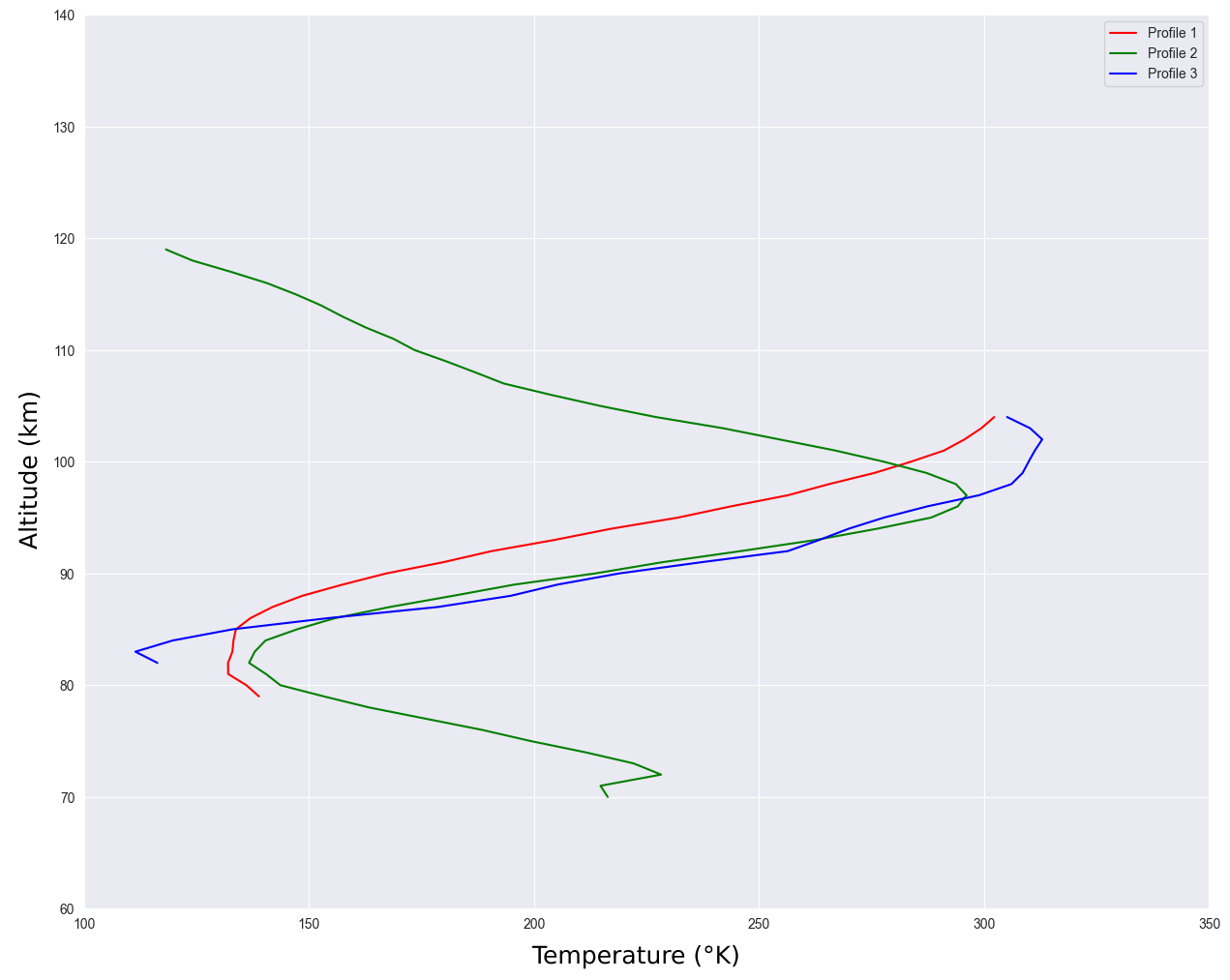

When working with atmospheric data, researchers often face partial datasets. This is the case of the SOIR instrument on board Venus Express [1], which measured the temperature of the Venus mesosphere, inferred via solar occultations. Due to inherent limitations in the measurement process, temperature measurements are missing at varying altitudes in the profiles. While altitudes of measurements across the whole dataset can span from 60 km to 160 km, most of the profiles only contain a range of 10 to 50 consecutive measurements. Figure 1 shows examples of such profiles. To fill this data gap, machine learning, and specifically probabilistic models are promising candidates.

Fig. 1: Three temperature profiles from SOIR, with varying ranges of altitude.

2. Gaussian Processes and MAGMA

Gaussian processes (GPs) are probabilistic models mainly used for predictions based on empirical observations. The capacity of GPs to estimate the uncertainty in their predictions makes them particularly appropriate for extrapolating atmospheric data. Since traditional GPs only try to fit a single function, adaptations should be implemented for the case of the SOIR dataset, which contains numerous profiles. Using one GP for each profile would not allow models to leverage information across the whole dataset to make better predictions. This motivates the use of so-called multi-task GPs, where “task” refers to an atmospheric profile in our context. The literature in the field of multi-task GPs is extensive [2, 3, 4], but most models either struggle with large datasets or have difficulties learning the covariance between the tasks. The MAGMA algorithm [5] is a recent advancement in the field of multi-task GPs that solves the above-mentioned issues. To complete a gap at a specific altitude in a profile, MAGMA uses both the profile values that are close to the gap and the mean value measured at that altitude in all other profiles. This results in better confidence intervals, even far from known observations. Previous to this work, MAGMA had never been applied to atmospheric datasets.

3. Profile Extrapolation

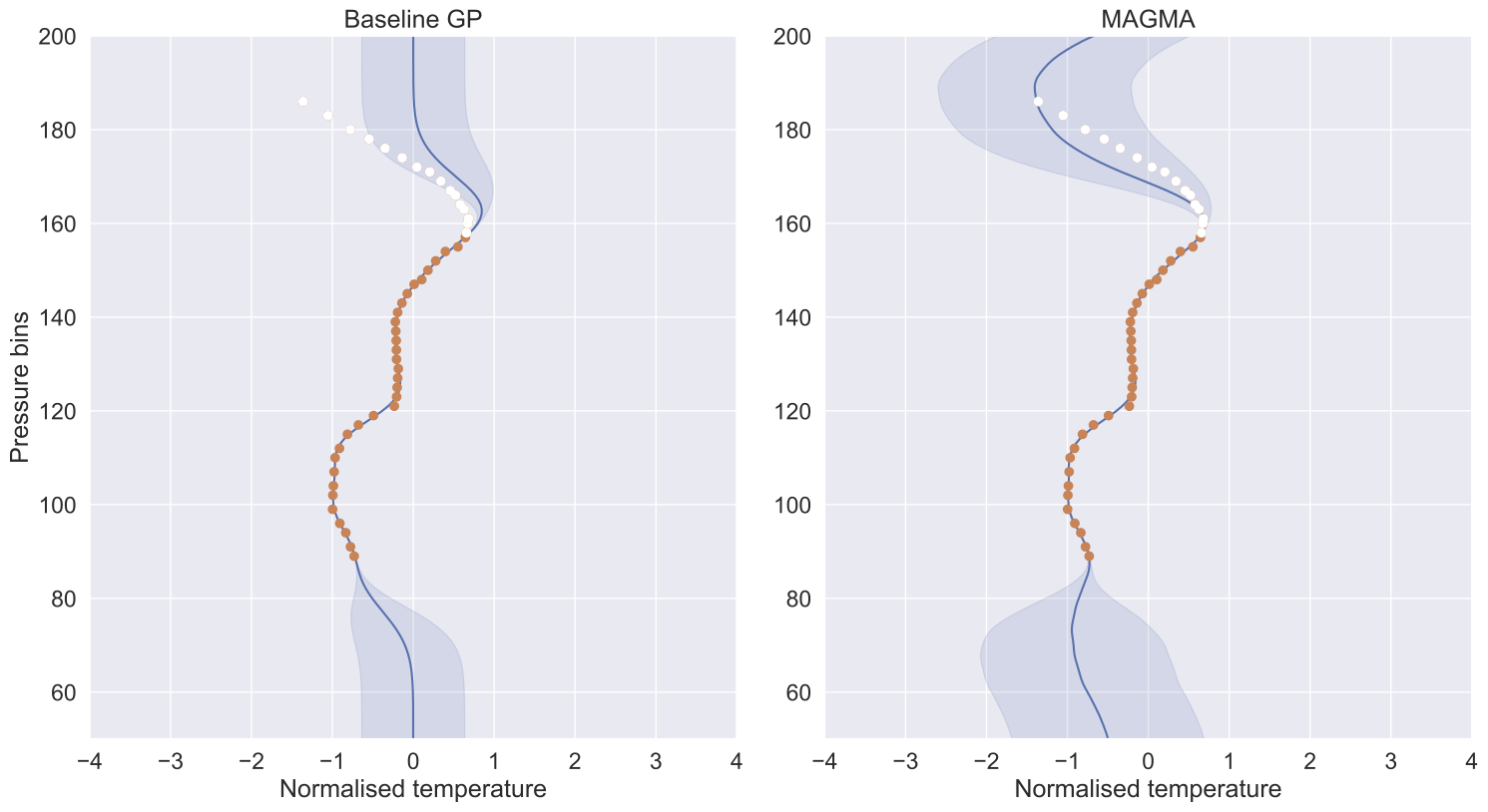

To assess the performances of MAGMA, we compare this algorithm with a traditional GP working on each profile individually. First, each profile is preprocessed to standardise each temperature value and use a logarithmic pressure scale as a height indicator rather than altitude. As MAGMA needs profiles to share common inputs, each pressure measurement is mapped to its closest bin on a 250 discrete bin scale. We then divide the dataset into train and test sets. Each test profile is further divided into test observations and validation observations. During experiments, test observations are given to the models as a starting point. The models can then make predictions, providing an estimated mean value and a confidence interval for each missing pressure bin. The validation observations are compared with their corresponding predictions to evaluate the performances of each model. Experimental results show that MAGMA has significantly better performances than a traditional GP, as seen in Figure 2. It provides estimations that are closer to the actual measurement and confidence intervals that are more precise, as shown in Figure 3.

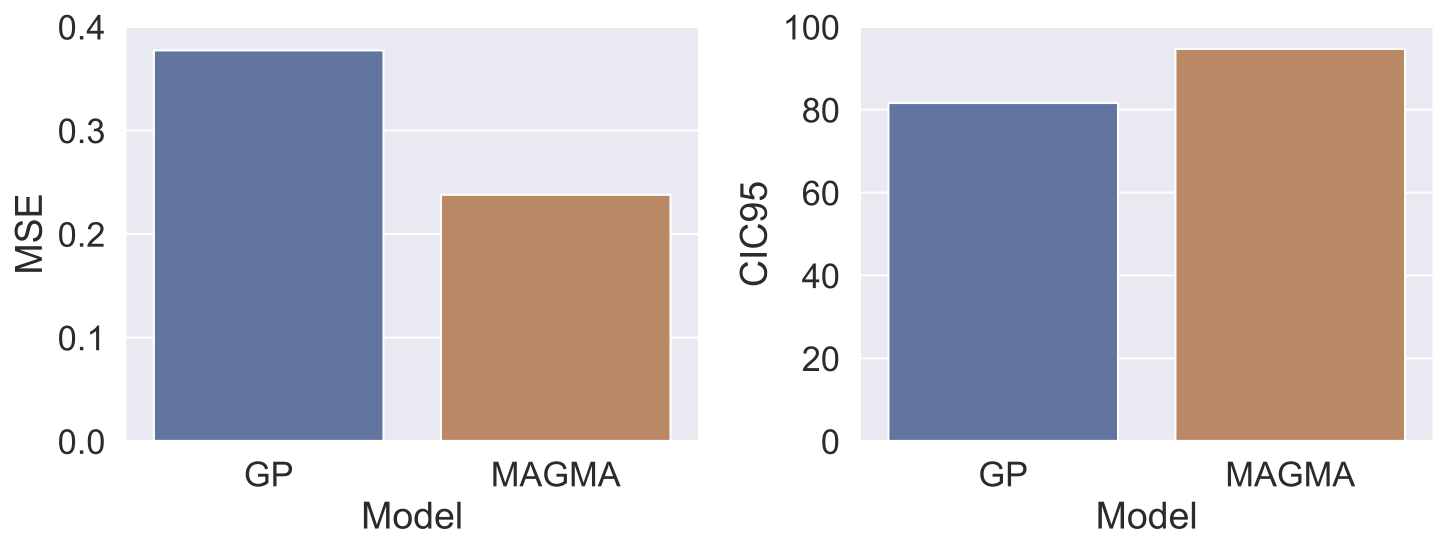

Fig. 2: Average performances of MAGMA and the baseline model on two distinct metrics. Mean Squared Error (MSE) measures the distance between predictions and true values (to be minimised). CIC95 is the ratio of validation observations actually sitting in the predicted 95% confidence interval (should be close to 95%).

Fig. 3: Predictions from the baseline and MAGMA, for different training settings. The test observations and validation observations are represented in orange and white, respectively. The grey-shaded area corresponds to the 95% confidence interval.

4. Conclusion

We apply MAGMA, a novel probabilistic learning algorithm, to the SOIR dataset, enhancing predictions for missing observations. This contribution is a first step toward a practical application of GPs to planetary aeronomy datasets. Future research will explore possible enhancements to the data preprocessing and model architectures to complete the SOIR dataset with more precise and credible estimations.

References

-

[1] L. Trompet, Y. Geunes, T. Ooms, et al. Description, accessibility and usage of SOIR/Venus Express atmospheric profiles of Venus distributed in VESPA (Virtual European Solar and Planetary Access). Planetary and Space Science, 150:60–64, 2018.

-

[2] Edwin V Bonilla, Kian Chai, and Christopher Williams. Multi-task Gaussian Process Prediction. In Advances in Neural Information Processing Systems, volume 20, 2007.

-

[3] Edwin V. Bonilla, Felix V. Agakov, and Christopher K. I. Williams. Kernel Multi-task Learning using Task-specific Features. In Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics, pages 43–50. PMLR, 2007.

-

[4] Carlos Ruiz, Carlos M. Alaiz, and José R. Dorronsoro. A survey on kernel-based multi-task learning. Neurocomputing, 577:127255, 2024.

-

[5] Arthur Leroy, Pierre Latouche, Benjamin Guedj, and Servane Gey. MAGMA: inference and prediction using multi-task Gaussian processes with common mean. Machine Learning, 111(5):1821–1849, 2022.

How to cite: Lejoly, S., Piccialli, A., Mahieux, A., Vandaele, A. C., and Frénay, B.: MAGMA Gaussian Processes to complete Venusian atmospheric profiles from SOIR , Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-382, https://doi.org/10.5194/epsc2024-382, 2024.

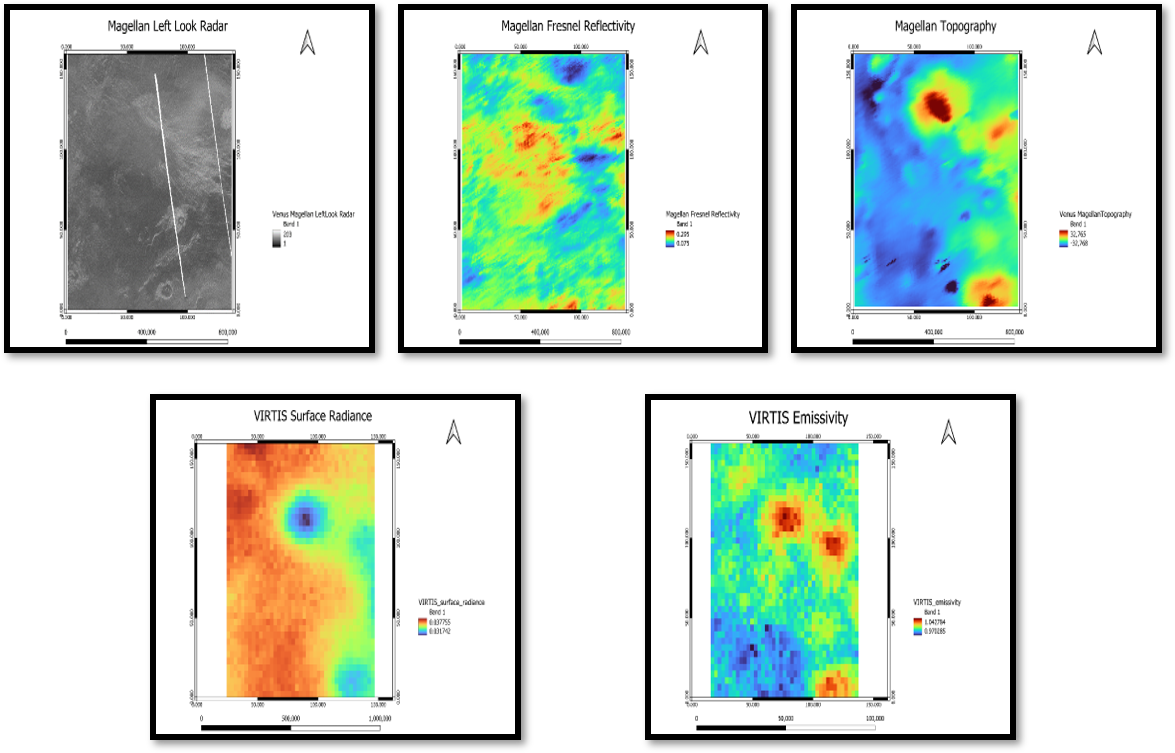

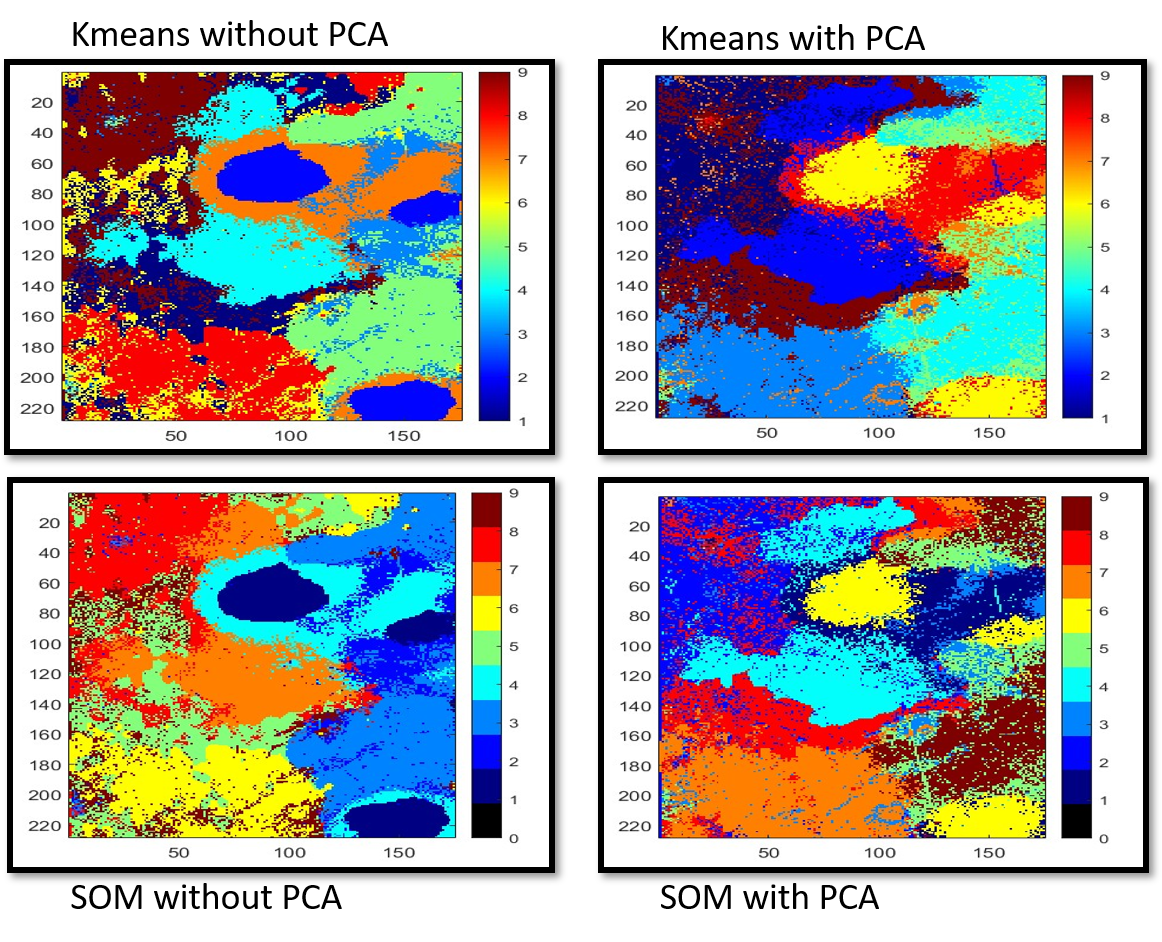

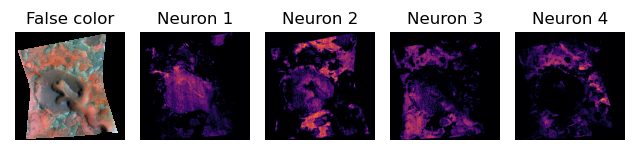

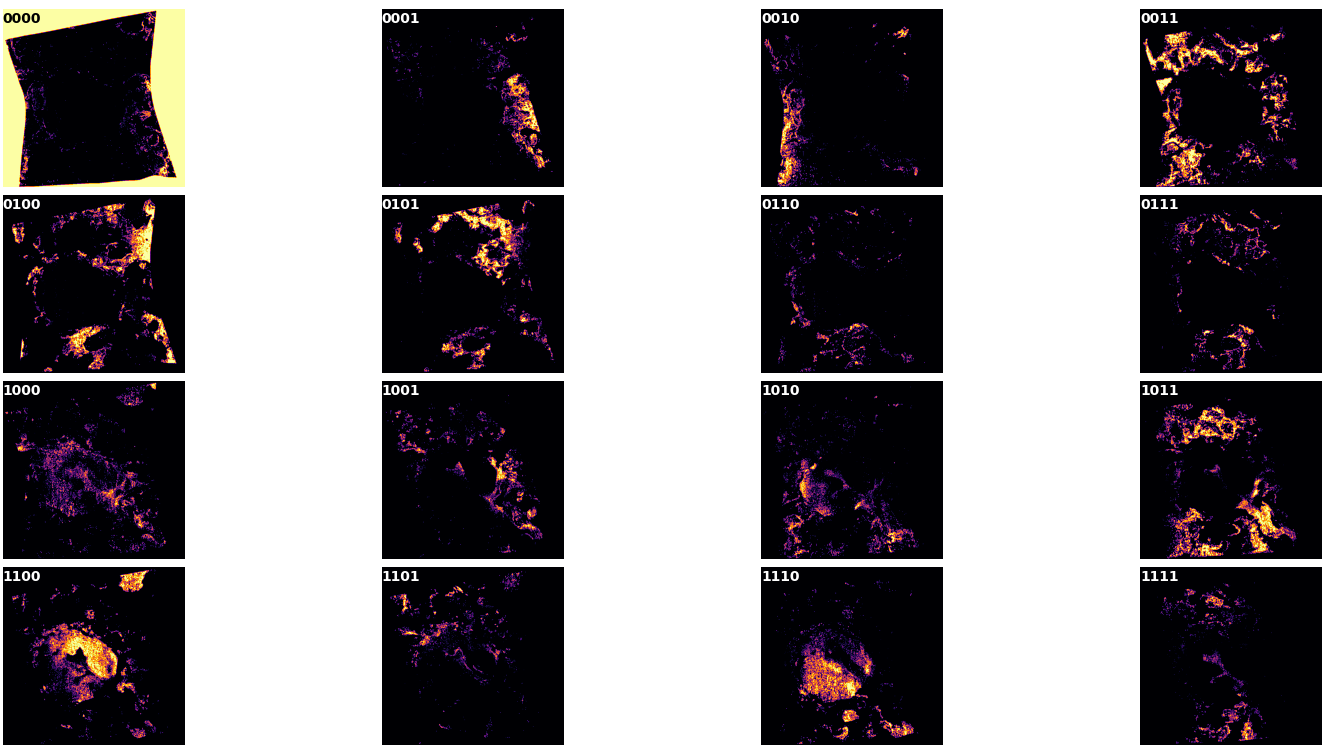

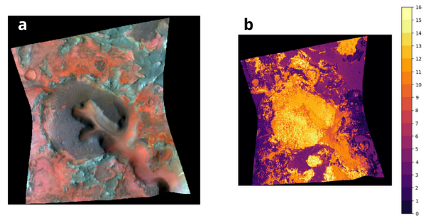

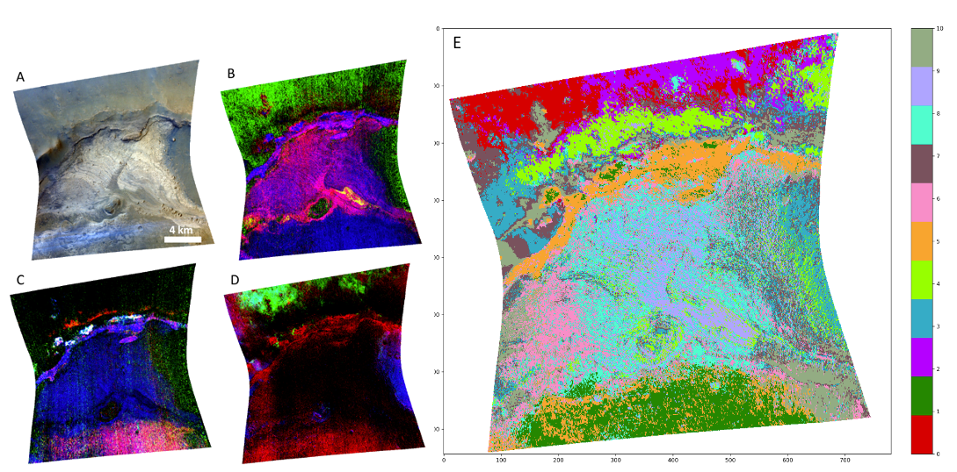

This study investigates the geological characteristics of the transition area between Themis Regio and Helen Planitia on Venus, leveraging a multi-layered dataset comprising Magellan Radar data, Magellan Fresnel Reflectivity, Magellan Topography, VIRTIS Surface Radiance and VIRTIS Emissivity. The selection of this region aligns with the standardized area utilized in the tutorials presented at the GMAP Winter School 2024, facilitating comparative analysis with established geological mapping methodologies. The data layers are meticulously stacked and subjected to comprehensive preprocessing procedures to ensure consistency and mitigate inherent challenges associated with Venusian remote sensing datasets, including atmospheric interference and topographical variations. Subsequently, unsupervised clustering techniques are employed to delineate meaningful spatial patterns within the dataset stack. In this investigation, we explore two widely recognized unsupervised clustering methodologies: K-means clustering and Self-Organizing Maps (SOM).

This study investigates the geological characteristics of the transition area between Themis Regio and Helen Planitia on Venus, leveraging a multi-layered dataset comprising Magellan Radar data, Magellan Fresnel Reflectivity, Magellan Topography, VIRTIS Surface Radiance and VIRTIS Emissivity. The selection of this region aligns with the standardized area utilized in the tutorials presented at the GMAP Winter School 2024, facilitating comparative analysis with established geological mapping methodologies. The data layers are meticulously stacked and subjected to comprehensive preprocessing procedures to ensure consistency and mitigate inherent challenges associated with Venusian remote sensing datasets, including atmospheric interference and topographical variations. Subsequently, unsupervised clustering techniques are employed to delineate meaningful spatial patterns within the dataset stack. In this investigation, we explore two widely recognized unsupervised clustering methodologies: K-means clustering and Self-Organizing Maps (SOM).

Notably, our analysis reveals that the SOM yields a less cluttered cluster map than the k-means algorithm within the Venusian dataset and appears to capture intricate geological features. Furthermore, the SOM yields a geologically reasonable scene segmentation also without the application of dimensionality reduction techniques such as Principal Component Analysis (PCA), suggesting its robustness in handling high-dimensional datasets inherent to planetary remote sensing. Moreover, beyond traditional clustering methodologies, our research is planned to extend towards supervised learning approaches utilizing the outputs of unsupervised clustering for automated feature identification. This hybrid methodology harnesses the strengths of both unsupervised and supervised learning paradigms, offering a comprehensive framework for geological mapping and feature characterization on planetary surfaces. The findings of this study contribute to advancing our understanding of Venusian geology and refining remote sensing methodologies tailored for planetary exploration. The insights garnered from this research are instrumental not only in unraveling the geological complexities of Venus but also in informing future missions and exploration endeavors aimed at unraveling the mysteries of our neighboring planet.

How to cite: Garg, S. and Woehler, C.: A Case Study of Classification Comparison of Venusian Data Layers for Geological Mapping, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-827, https://doi.org/10.5194/epsc2024-827, 2024.

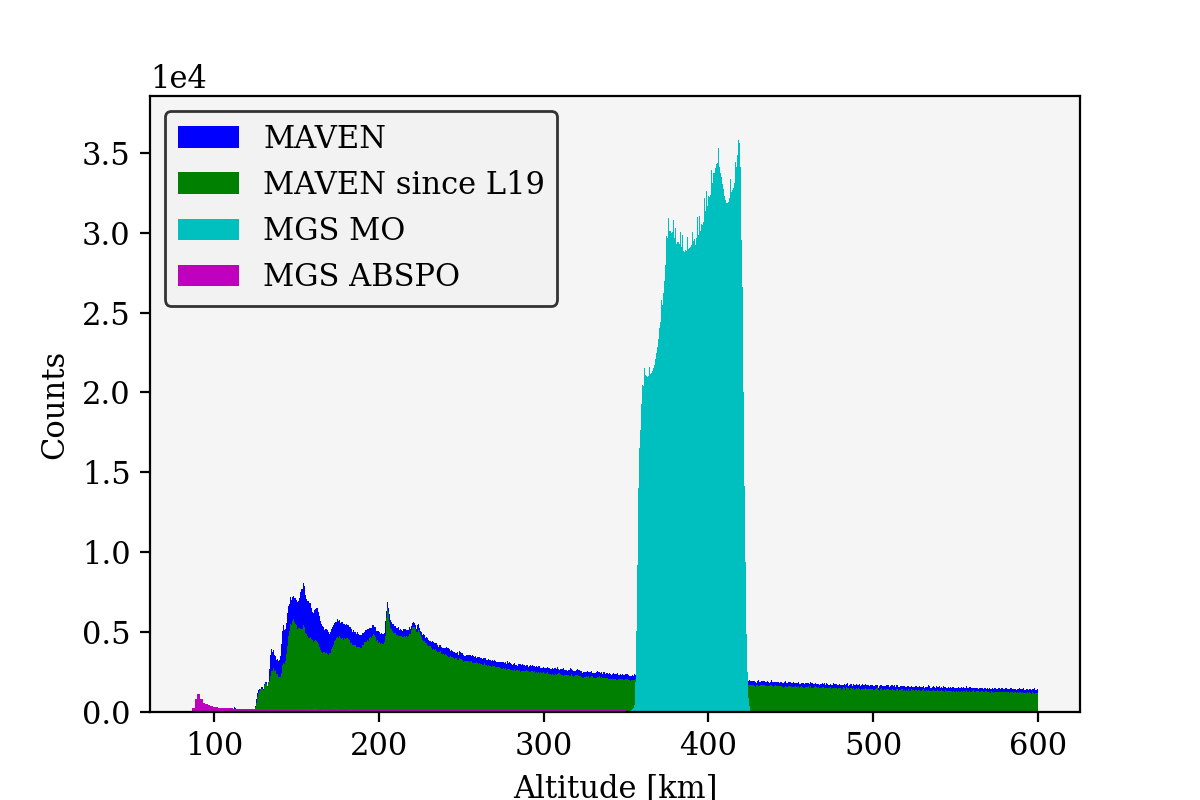

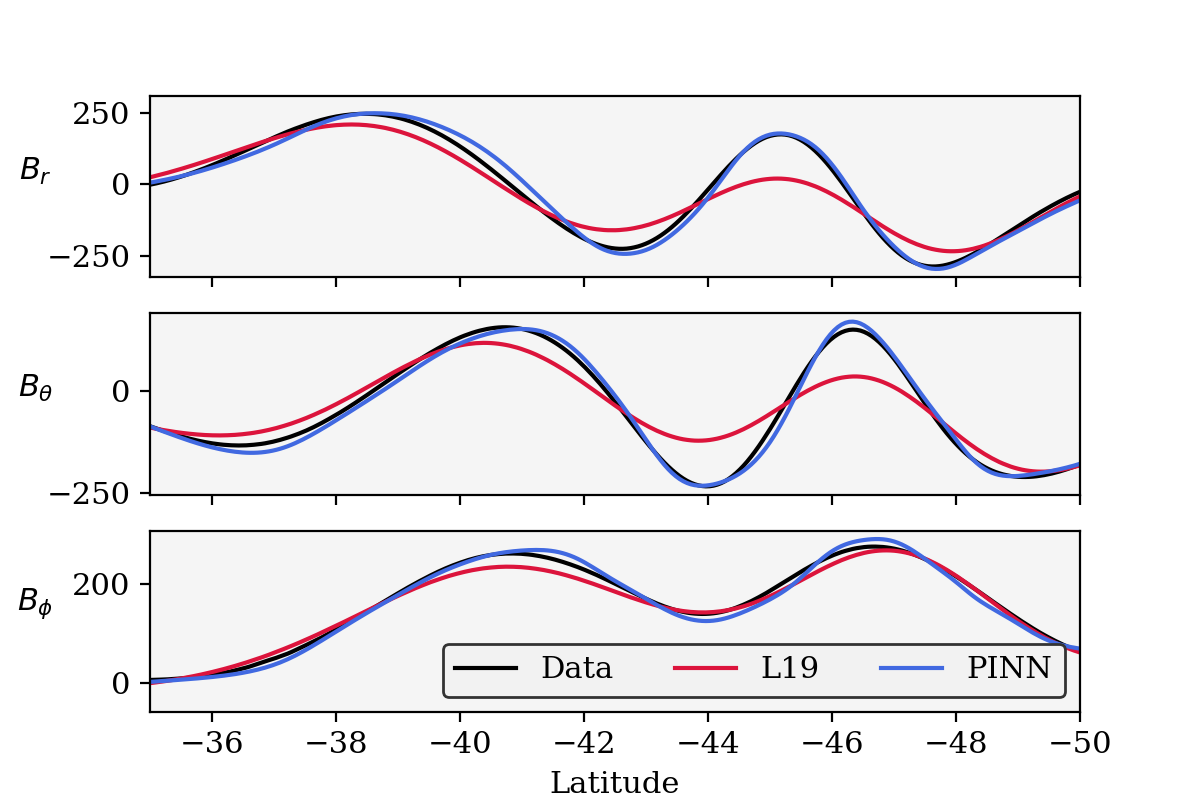

Introduction

Mars’ dust cycle is a critical factor that drives the weather and climate of the planet. Airborne

dust affects the energy balance that drives the atmospheric dynamic. Therefore, for studying the present-day and recent-past climate of Mars we need to observe and understand the different processes involved in the dust cycle. To this end, the Mars Environmental Dynamics Analyser (MEDA) station [1] includes a set of sensors capable of measuring the radiance fluxes, the wind direction and velocity, the pressure, and the humidity over the Martian surface. Combining these observations with radiative transfer (RT) simulations, airborne dust particles can be detected and characterized (optical depth, particle size, refractive index) along the day. The retrieval of these dust properties allows us to analyze dust storms or dust-lifting events, such as dust devils, on Mars [2][3].

Dust devils are thought to account for 50% of the total dust budget, and they represent a

continuous source of lifted dust, active even outside the dust storms season. For these reasons, they have been proposed as the main mechanism able to sustain the ever-observed dust haze of the Martian atmosphere. Our radiative transfer simulations indicate that variations in the dust loading near the surface can be detected and characterized by MEDA radiance sensor RDS [4].

This study reanalyzes the dataset of dust devil detections obtained in [3] employing artificial

intelligence techniques including anomaly detection based on autoencoders [5] and deep learning models [6] to analyze RDS and pressure sensor data. As we will show, preliminary results indicate that our AI models can successfully identify and characterize these phenomena with high accuracy. The final aim is to develop a powerful tool that can improve the database for the following sols of the mission, and subsequently extend its use for other atmospheric studies.

Dataset

The dataset used in this study includes data from 365 Martian days, which represents half a Martian year of observations and it was collected with a temporal resolution of one sample per second (for 12 hours in average observations per sol). This dataset, which has been labeled by hand, contains 424 detected Dust Devil events. The duration of these events varies considerably, ranging from just a few seconds to several minutes. The precise manual labeling is crucial for training reliable machine learning models that can effectively recognize and predict these events.

AI techniques

Deep learning is increasingly used to detect events in time-based signals, significantly enhancing accuracy and speed. Methods like CNN [7] and RNN [8] identify complex patterns effectively. These models learn from vast data, enabling real-time and predictive analytics.

Deep learning-based autoencoders are powerful tools for anomaly detection in temporal signals [5], offering a sophisticated method to capture complex patterns and identify outliers. Autoencoders, which are neural networks designed to reconstruct their input, learn to represent normal patterns during training. By minimizing the reconstruction error, these models learn to encode the regular, predictable aspects of temporal data. When an anomalous signal occurs, it typically results in a higher reconstruction error due to deviations from learned patterns.

The nature of the events and the low frequency of occurrence presents two main challenges for the Machine Learning algorithm:

• The database is highly unbalance. As it is said in Section Dataset, 424 events are detected over 365 Martian days. It means that less than 0.2% of the database corresponds to Dust Devil cases.

• Due to the spatial nature of the events, the Dust Devils manifests in different RDS sensors each time. This makes it difficult for the algorithm to generalize.

Results

For the experiments, the data is windowed and data augmentation techniques are used to try to correct the issue of class imbalance. The training set was selected randomly and intentionally balanced to ensure an equal number of samples per class. For testing the models, data from six randomly chosen suns are selected. The following results (Table 1) are obtained:

| Name | Train Accuracy | Test Accuracy |

| DNN | 75.0% | 65.7% |

| CNN | 82.5% | 78.9% |

| LSTM | 73.1% | 67.0% |

At the time of writing this document, there are no consistent results for autoencoder’s approach.

Conclusions and Future Steps

This study demonstrates that data augmentation and advanced AI techniques can significantly improve the detection of dust devils on Mars. The use of deep learning, specifically convolutional neural networks (CNNs), has shown to outperform other models in accuracy both during training and testing phases.

Future work will focus on enhancing feature extraction, exploring new data augmentation techniques, and further developing autoencoders.

Acknowledgements

This work was funded by the Spanish Ministry of Science and Innovation under Project PID2021-129043OB-I00 (funded by MCIN/AEI/10.13039/501100011033/FEDER, EU), and by the Community of Madrid and University of Alcala under project EPU-INV/2020/003.

[1] Rodriguez-Manfredi at al. The mars environmental dynamics analyzer, meda. a suite of

environmental sensors for the mars 2020 mission. Space Science Reviews, 217, 04 2021.

[2] Lemmond et al. Dust, sand, and winds within an active martian storm in jezero crater. Geophysical Research Letters, 49(17):e2022GL100126, 2022. e2022GL100126 2022GL100126.

[3] Toledo et al. Dust devil frequency of occurrence and radiative effects at jezero crater, mars, as

measured by meda radiation and dust sensor (rds). Journal of Geophysical Research: Planets,

128(1):e2022JE007494, 2023. e2022JE007494 2022JE007494.

[4] Ap´estigue et al. Radiation and dust sensor for mars environmental dynamic analyzer onboard m2020 rover. Sensors, 22(8), 2022.

[5] Fatemeh Esmaeili, Erica Cassie, Hong Phan T. Nguyen, Natalie O. V. Plank, Charles P. Unsworth, and Alan Wang. Anomaly detection for sensor signals utilizing deep learning autoencoder-based neural networks. Bioengineering, 10(4), 2023.

[6] Alaa Sheta, Hamza Turabieh, Thaer Thaher, Jingwei Too, Majdi Mafarja, Md Shafaeat Hossain, and Salim R. Surani. Diagnosis of obstructive sleep apnea from ecg signals using machine learning and deep learning classifiers. Applied Sciences, 11(14), 2021.

[7] Naoya Takahashi, Michael Gygli, Beat Pfister, and Luc Van Gool. Deep convolutional neural

networks and data augmentation for acoustic event detection. CoRR, abs/1604.07160, 2016.

[8] Yong Yu, Xiaosheng Si, Changhua Hu, and Jianxun Zhang. A Review of Recurrent Neural

Networks: LSTM Cells and Network Architectures. Neural Computation, 31(7):1235–1270, 07

2019.

How to cite: Aguilar, M., Apéstigue, V., Mohino, I., Gil, R., Toledo, D., Arruego, I., Hueso, R., Martínez, G., Lemmon, M., Newman, C., Ganzer, M., de la Torre, M., and Rodríguez, J. A.: Advance Dust Devil Detection with AI using Mars2020 MEDA instrument, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-538, https://doi.org/10.5194/epsc2024-538, 2024.

Introduction: The north polar region of Mars is one of the most active places of the planet with avalanches [1] and ice block falls [2] being observed every year on High Resolution Imaging Science Experiment (HiRISE) data. Both phenomena originate at the steep icy scarps, which exist on the interface between two adjacent geological units, the older and darker Basal Unit (BU) and the younger and brighter Planum Boreum 1 unit, which is a part of the so called North Polar Layered Deposits (NPLD). We are primarily interested in monitoring the current scarp erosion rate by tracking the deposition of ice debris at the foot of the polar cap. A similar analysis was already performed by Shu Se et. al [3], yet focusing only on the areas of the scarp where the ice fragments might have originated from. We strive to confirm the previous findings and establish an automated pipeline for continuous monitoring of the area throughout the entire MRO mission.

Methodology: Given the large scale of the region of interest, combined with a growing amount of available satellite data we believe that automation and optimization are key. To get from raw HiIRISE .JP2 products to clean ice block detections in approx. 10 min/image we propose the following routine:

- Gathering the data: Unfortunately not every image is equally valuable. We want to focus on the areas that were imaged over multiple years, as this allows us to continuously follow the evolution of polar ice. The filtering process can be automated by using python scripts and connecting directly to the PDS database.

- Scarp mapping with UNet: Since ice blocks accumulate at the bottom of the scarps, we only need to consider the portion of BU adjacent to NPLD. With a modified UNet [4] we can segment large HiRISE products and then use the resulting masks to derive precise scarp coordinates, extract only the relevant portions of the images & greatly reduce the computation times of the subsequent steps.

- Upscaling & sharpening with KernelGAN [5]: Large boulders are usually easy to identify due to their well differentiated visual features. As the blocks get smaller features become less pronounced and margins between different object instances shrink. We have experimentally established that cutting HiRISE products into tiles of 160x160 pixels and then upscaling them to 640x640 yields the best results when performing object detection.

- Co-registration: to perform change detection we need to co-register the images with pixel wise precision. Using DTMs creates additional complexity and can be avoided with the help of a purely image based method such as cv.findHomography.