- 1Institute of Planetary Research, Planetary Geodesy, Berlin, Germany (frank.preusker@dlr.de)

- 22Southwest Research Institute, 1050 Walnut Street, Suite 300, Boulder, CO 80302, USA

- 3NASA/Goddard Space Flight Center, Greenbelt, MD 20771, USA

Introduction: In October 2021, the Lucy space probe launched its mission to explore a series of Jupiter Trojans. Close flybys will be used to investigate and determine the shape, diversity, surface composition and geology of the objects [1]. On November 1, 2023, Lucy reached the recently-added target, asteroid (152830) Dinkinesh at closest distance of approximately 430 kilometers.

Data set: The Lucy space probe is equipped with the two different types of framing cameras, the Lucy Long Range Reconnaissance Imager (L'LORRI) [2] and the Terminal Tracking CAMera pair (TTCAM) [3], which are mounted on a pivoting platform (Instrument Pointing Platform, IPP). Depending on the distance between the spacecraft and the object, either the wide-angle TTCAMs or the narrow-angle L'LORRI are used. Therefore, only L'LORRI images were used for the reconstruction of the surface of Dinkinesh. A total of 194 L'LORRI images were selected, covering a period between ±40 minutes to the closest approach (at 16:54:40 UTC) and having a best ground sampling distance of 2.1-8.4 meters. For these images, the image resolution was increased using a disk-deconvolution algorithm [4] that takes the point spread function into account.

Methods: The stereo-photogrammetric processing for Dinkinesh is based on a software suite that has been developed during the last decade. It has been applied successfully to several planetary image data sets [5-9]. It covers the classical workflow from photogrammetric block adjustment to digital terrain model (DTM) or surface model, and map generation. For classic missions in which the object is examined from orbit, the methods listed above are completely sufficient. For fly-by missions, however, it is absolutely necessary to combine these with "shape from silhouette". This allows the reduction of systematic pointing errors to a minimum by fitting the line of sight of the camera to body center and the reconstruction of surface areas (limbs), which cannot be determined by classical photogrammetric analysis.

Results: First, we pre-adjusted the orientation of all 194 images from the closest approach phase by fitting the line of sight of each image to the center of Dinkinesh's standard sphere (mean radius of about 360 m). Then all images with a ground sampling distance better than 5 m/pixel were selected for stereo-photogrammetric processing. For these 45 selected images, we constrained stereo requirements to identify independent stereo image combinations.

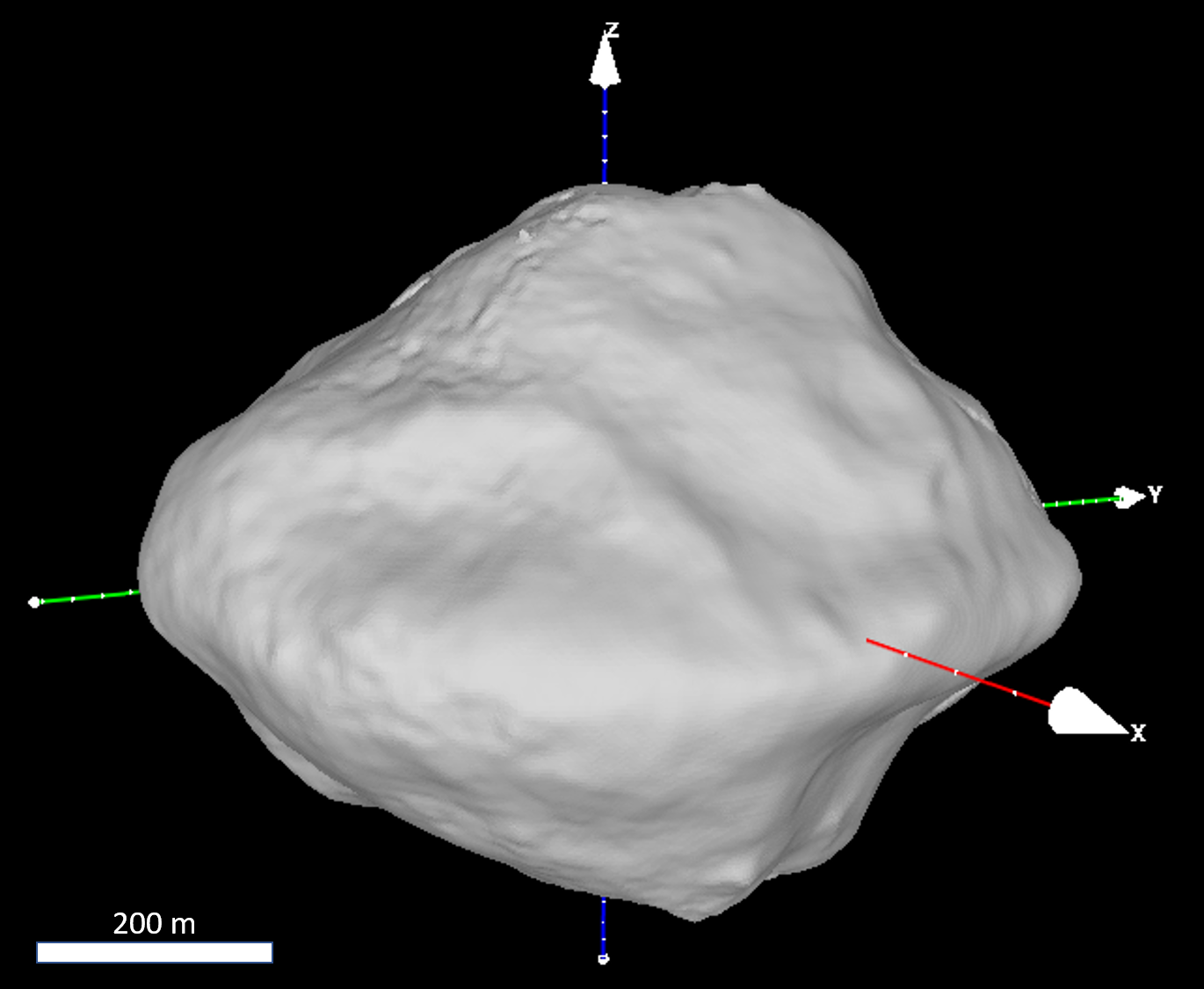

From these 94 stereo image combinations we applied a sparse multi-image matching to set-up of a 3D control network of about 3,000 surface points. The control point network defines the input for the photogrammetric least square adjustment where final corrections for the image orientation data are derived. The three-dimensional one sigma (3D) point accuracy of the resulting ground points has been improved to ±0.7 m. Furthermore, the control point network has been used to determine Dinkinesh’ spin axis orientation to an accuracy of ±0.5°. Together with the rotation rate, which was determined from light curve measurements of images from the departure phase, a pre-coordinate system was defined [10]. Finally, dense image matchings were carried out to yield about 5.4 million object points. From these object points, we have generated a stereo derived shape model with a lateral spacing of 2.1 m/pixel (3 pixel/degree) and a vertical accuracy of about 0.5 m. The stereo derived shape model covers approximately 45 percent of Dinkinesh’ surface. With this stereo derived surface model as a replacement for the standard sphere in the first step, the pre-orientation of the complete image data set was repeated. In addition, the image coordinates of the limb were determined for each image using the contrast transition from the surface to the space background and converted into object coordinates. Finally, the stereo derived object points have been combined with the limb derived object points to generate the final surface model, which yields an increase in surface coverage to approximately 60 percent (Figure 1).

Outlook: A final version of the Dinkinesh global shape model an overall re-assessment of Dinkinesh’s geophysical properties can be expected from the analysis of the entire L’LORRI image dataset of Dinkinesh and will be published soon [10]. Furthermore, derived data products such as a monochrome global base map and a global albedo map will be presented at the time of the conference.

Acknowledgments: The Lucy mission is funded through the NASA Discovery program on contract No. NNM16AA08C.

References: [1] Levison H.F. et al., (2021), Planet. Sci. J., 2, 5, 171. [2] Weaver H.A. et al., (2023), Space Sci. Rev., 219, 82. [3] Bell J.F. et al, (2023), Space Sci. Rev., 219, 86. [4] Robbins et al., (2023), Planet. Sci. J., 4, 234. [5] Preusker F. et al., (2017), A&A, 607, L1. [6] Preusker F. et al., (2015), A&A, 583, A33. [7] Preusker F. et al., (2019), A&A, 632, L4. [8] Gwinner K. et al., (2009), Photogrammetric Engineering Remote Sensing, 75, 1127–1142. [9] Scholten F. et al., (2012), JGR, Vol. 117. [10] Levison H.F. et al., (2024), Nature, in preparation

|

Figure 1. 3D view of asteroid (152830) Dinkinesh’s global surface model

|

How to cite: Preusker, F., Mottola, S., Matz, K.-D., Levison, H., Marchi, S., Noll, K., and Spencer, J.: Shape model of asteroid (152830) Dinkinesh from LUCY imagery, Europlanet Science Congress 2024, Berlin, Germany, 8–13 Sep 2024, EPSC2024-963, https://doi.org/10.5194/epsc2024-963, 2024.